richardaecn / Class Balanced Loss

Programming Languages

Projects that are alternatives of or similar to Class Balanced Loss

Class-Balanced Loss Based on Effective Number of Samples

Tensorflow code for the paper:

Class-Balanced Loss Based on Effective Number of Samples

Yin Cui, Menglin Jia, Tsung-Yi Lin, Yang Song, Serge Belongie

Dependencies:

- Python (3.6)

- Tensorflow (1.14)

Datasets:

- Long-Tailed CIFAR.

We provide a download link that includes all the data used in our paper in .tfrecords format. The data was converted and generated by

src/generate_cifar_tfrecords.py(original CIFAR) andsrc/generate_cifar_tfrecords_im.py(long-tailed CIFAR).

Effective Number of Samples:

For a visualization of the data and effective number of samples, please take a look at data.ipynb.

Key Implementation Details:

- Weights for class-balanced loss

- Focal loss

- Assigning weights to different loss

- Initialization of the last layer

Training and Evaluation:

We provide 3 .sh scripts for training and evaluation.

- On original CIFAR dataset:

./cifar_trainval.sh

- On long-tailed CIFAR dataset (the hyperparameter

IM_FACTORis the inverse of "Imbalance Factor" in the paper):

./cifar_im_trainval.sh

- On long-tailed CIFAR dataset using the proposed class-balanced loss (set non-zero

BETA):

./cifar_im_trainval_cb.sh

- Run Tensorboard for visualization:

tensorboard --logdir=./results --port=6006

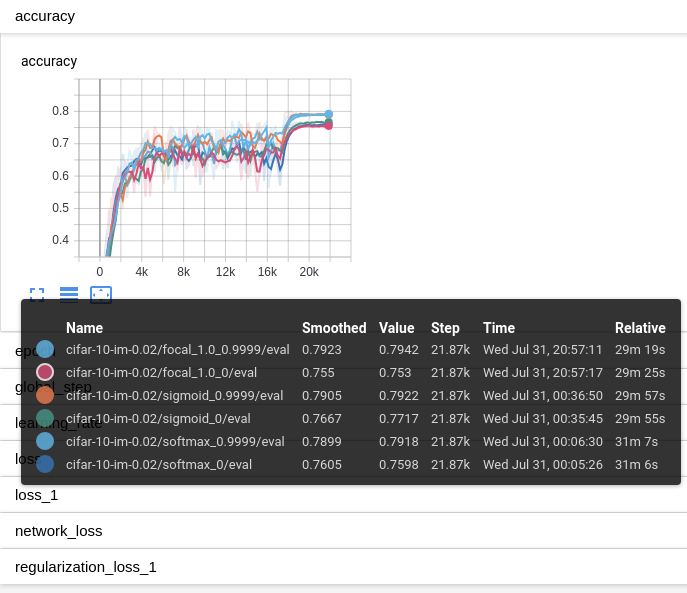

- The figure below are the results of running

./cifar_im_trainval.shand./cifar_im_trainval_cb.sh:

Training with TPU:

We train networks on iNaturalist and ImageNet datasets using Google's Cloud TPU. The code for this section is in tpu/. Our code is based on the official implementation of Training ResNet on Cloud TPU and forked from https://github.com/tensorflow/tpu.

Data Preparation:

-

Download datasets (except images) from this link and unzip it under

tpu/. The unzipped directorytpu/raw_data/contains the training and validation splits. For raw images, please download from the following links and put them into the corresponding folders intpu/raw_data/: -

Convert datasets into .tfrecords format and upload to Google Cloud Storage (gcs) using

tpu/tools/datasets/dataset_to_gcs.py:

python dataset_to_gcs.py \

--project=$PROJECT \

--gcs_output_path=$GCS_DATA_DIR \

--local_scratch_dir=$LOCAL_TFRECORD_DIR \

--raw_data_dir=$LOCAL_RAWDATA_DIR

The following 3 .sh scripts in tpu/ can be used to train and evaluate models on iNaturalist and ImageNet using Cloud TPU. For more details on how to use Cloud TPU, please refer to Training ResNet on Cloud TPU.

Note that the image mean and standard deviation and input size need to be updated accordingly.

- On ImageNet (ILSVRC 2012):

./run_ILSVRC2012.sh

- On iNaturalist 2017:

./run_inat2017.sh

- On iNaturalist 2018:

./run_inat2018.sh

- The pre-trained models, including all logs viewable on tensorboard, can be downloaded from the following links:

| Dataset | Network | Loss | Input Size | Download Link |

|---|---|---|---|---|

| ILSVRC 2012 | ResNet-50 | Class-Balanced Focal Loss | 224 | link |

| iNaturalist 2018 | ResNet-50 | Class-Balanced Focal Loss | 224 | link |

Citation

If you find our work helpful in your research, please cite it as:

@inproceedings{cui2019classbalancedloss,

title={Class-Balanced Loss Based on Effective Number of Samples},

author={Cui, Yin and Jia, Menglin and Lin, Tsung-Yi and Song, Yang and Belongie, Serge},

booktitle={CVPR},

year={2019}

}