zsyzzsoft / Co Mod Gan

Programming Languages

Projects that are alternatives of or similar to Co Mod Gan

Large Scale Image Completion via Co-Modulated Generative Adversarial Networks, ICLR 2021 (Spotlight)

Demo | Paper

[NEW!] Time to play with our interactive web demo!

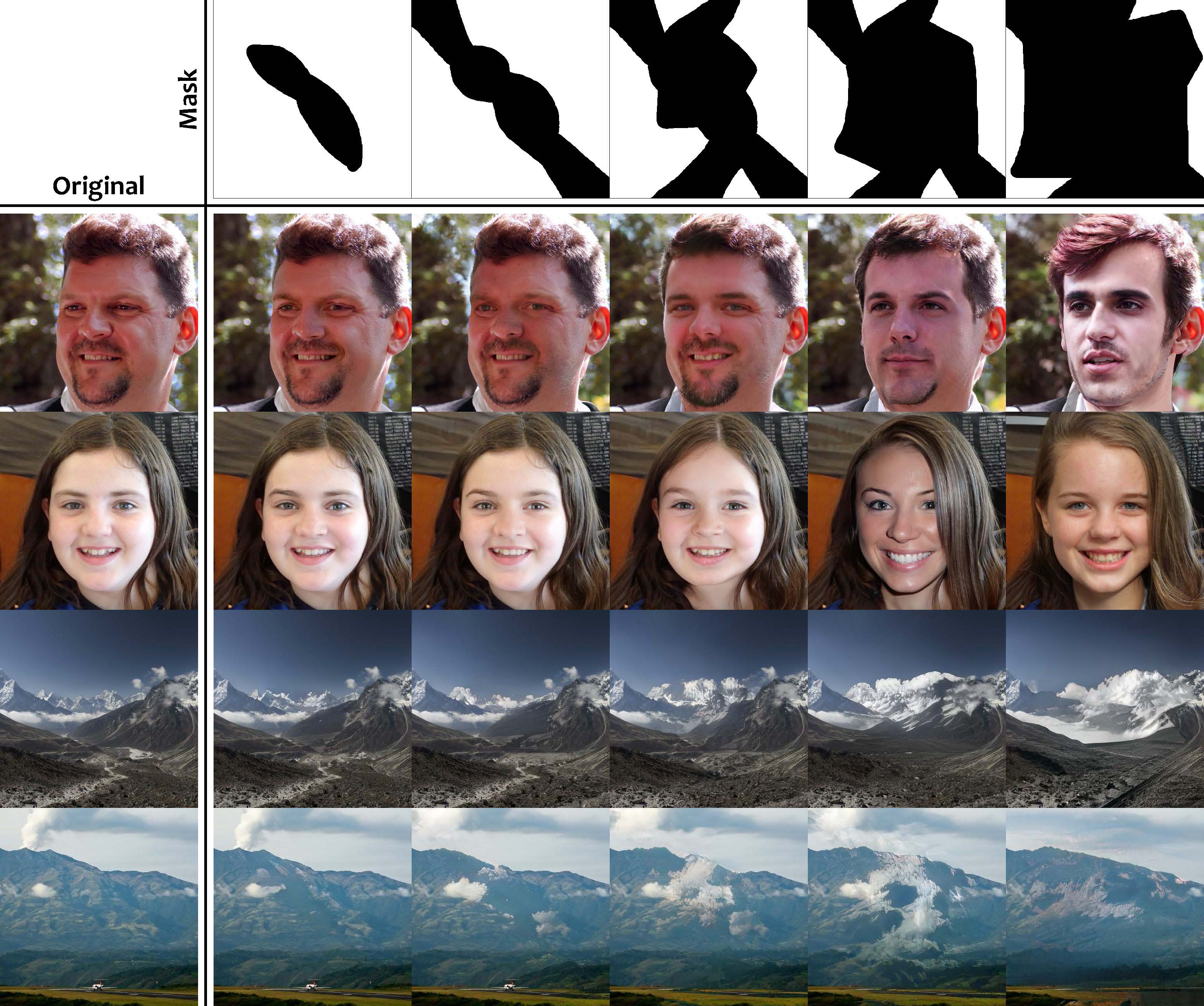

Numerous task-specific variants of conditional generative adversarial networks have been developed for image completion. Yet, a serious limitation remains that all existing algorithms tend to fail when handling large-scale missing regions. To overcome this challenge, we propose a generic new approach that bridges the gap between image-conditional and recent modulated unconditional generative architectures via co-modulation of both conditional and stochastic style representations. Also, due to the lack of good quantitative metrics for image completion, we propose the new Paired/Unpaired Inception Discriminative Score (P-IDS/U-IDS), which robustly measures the perceptual fidelity of inpainted images compared to real images via linear separability in a feature space. Experiments demonstrate superior performance in terms of both quality and diversity over state-of-the-art methods in free-form image completion and easy generalization to image-to-image translation.

Large Scale Image Completion via Co-Modulated Generative Adversarial Networks

Shengyu Zhao, Jonathan Cui, Yilun Sheng, Yue Dong, Xiao Liang, Eric I Chang, Yan Xu

Tsinghua University and Microsoft Research

arXiv | OpenReview

Overview

This repo is implemented upon and has the same dependencies as the official StyleGAN2 repo. We also provide a Dockerfile for Docker users. This repo currently supports:

- [x] Large scale image completion experiments on FFHQ and Places2

- [ ] Image-to-image translation experiments on edges to photos and COCO-Stuff

- [x] Evaluation code of Paired/Unpaired Inception Discriminative Score (P-IDS/U-IDS)

Datasets

- FFHQ dataset (in TFRecords format) can be downloaded following the StyleGAN2 repo.

- Places2 dataset can be downloaded in this website (Places365-Challenge 2016 high-resolution images, training set and validation set). The raw images should be converted into TFRecords using

dataset_tools/create_places2.py.

Training

The following script is for training on FFHQ. It will splits 10k images for validation. We recommend using 8 NVIDIA Tesla V100 GPUs for training. Training at 512x512 resolution takes about 1 week.

python run_training.py --data-dir=DATA_DIR --dataset=DATASET --metrics=ids10k --num-gpus=8

The following script is for training on Places2, which has a validation set of 36500 images:

python run_training.py --data-dir=DATA_DIR --dataset=DATASET --metrics=ids36k5 --total-kimg 50000 --num-gpus=8

Evaluation

The following script is for evaluation:

python run_metrics.py --data-dir=DATA_DIR --dataset=DATASET --network=CHECKPOINT_FILE(S) --metrics=METRIC(S) --num-gpus=1

Commonly used metrics are ids10k and ids36k5 (for FFHQ and Places2 respectively), which will compute P-IDS and U-IDS together with FID. By default, masks are generated randomly for evaluation, or you may append the metric name with -h0 ([0.0, 0.2]) to -h4 ([0.8, 1.0]) to specify the range of masked ratio.

Our pre-trained models are available on Google Drive. Below lists our provided pre-trained models:

| Model name & URL | Description |

|---|---|

| co-mod-gan-ffhq-9-025000.pkl | Large scale image completion on FFHQ (512x512) |

| co-mod-gan-ffhq-10-025000.pkl | Large scale image completion on FFHQ (1024x1024) |

| co-mod-gan-places2-050000.pkl | Large scale image completion on Places2 (512x512) |

| co-mod-gan-coco-stuff-025000.pkl | Image-to-image translation on COCO-Stuff (labels to photos) (512x512) |

| co-mod-gan-edges2shoes-025000.pkl | Image-to-image translation on edges2shoes (256x256) |

| co-mod-gan-edges2handbags-025000.pkl | Image-to-image translation on edges2handbags (256x256) |

Use the following script to run the interactive demo locally:

python run_demo.py -d DATA_DIR/DATASET -c CHECKPOINT_FILE(S)

Citation

If you find this code helpful, please cite our paper:

@inproceedings{zhao2021comodgan,

title={Large Scale Image Completion via Co-Modulated Generative Adversarial Networks},

author={Zhao, Shengyu and Cui, Jonathan and Sheng, Yilun and Dong, Yue and Liang, Xiao and Chang, Eric I and Xu, Yan},

booktitle={International Conference on Learning Representations (ICLR)},

year={2021}

}