xhujoy / Cyclegan Tensorflow

Tensorflow implementation for learning an image-to-image translation without input-output pairs. https://arxiv.org/pdf/1703.10593.pdf

Stars: ✭ 676

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Cyclegan Tensorflow

cycleGAN-PyTorch

A clean and lucid implementation of cycleGAN using PyTorch

Stars: ✭ 107 (-84.17%)

Mutual labels: image-translation, cyclegan

Cyclegan

PyTorch implementation of CycleGAN

Stars: ✭ 38 (-94.38%)

Mutual labels: cyclegan, image-translation

Pytorch-Image-Translation-GANs

Pytorch implementations of most popular image-translation GANs, including Pixel2Pixel, CycleGAN and StarGAN.

Stars: ✭ 106 (-84.32%)

Mutual labels: image-translation, cyclegan

BicycleGAN-pytorch

Pytorch implementation of BicycleGAN with implementation details

Stars: ✭ 99 (-85.36%)

Mutual labels: image-translation, cyclegan

pytorch-CycleGAN

Pytorch implementation of CycleGAN.

Stars: ✭ 39 (-94.23%)

Mutual labels: image-translation, cyclegan

day2night

Image2Image Translation Research

Stars: ✭ 46 (-93.2%)

Mutual labels: image-translation, cyclegan

Attentiongan

AttentionGAN for Unpaired Image-to-Image Translation & Multi-Domain Image-to-Image Translation

Stars: ✭ 341 (-49.56%)

Mutual labels: cyclegan, image-translation

multitask-CycleGAN

Pytorch implementation of multitask CycleGAN with auxiliary classification loss

Stars: ✭ 88 (-86.98%)

Mutual labels: image-translation, cyclegan

ganslate

Simple and extensible GAN image-to-image translation framework. Supports natural and medical images.

Stars: ✭ 17 (-97.49%)

Mutual labels: image-translation, cyclegan

Cyclegan Tensorflow 2

CycleGAN Tensorflow 2

Stars: ✭ 330 (-51.18%)

Mutual labels: cyclegan, image-translation

traiNNer

traiNNer: Deep learning framework for image and video super-resolution, restoration and image-to-image translation, for training and testing.

Stars: ✭ 130 (-80.77%)

Mutual labels: cyclegan

Sean

SEAN: Image Synthesis with Semantic Region-Adaptive Normalization (CVPR 2020, Oral)

Stars: ✭ 387 (-42.75%)

Mutual labels: image-translation

Splice

Official Pytorch Implementation for "Splicing ViT Features for Semantic Appearance Transfer" presenting "Splice" (CVPR 2022)

Stars: ✭ 126 (-81.36%)

Mutual labels: image-translation

Image-Colorization-CycleGAN

Colorization of grayscale images using CycleGAN in TensorFlow.

Stars: ✭ 16 (-97.63%)

Mutual labels: cyclegan

automatic-manga-colorization

Use keras.js and cyclegan-keras to colorize manga automatically. All computation in browser. Demo is online:

Stars: ✭ 20 (-97.04%)

Mutual labels: cyclegan

Von

[NeurIPS 2018] Visual Object Networks: Image Generation with Disentangled 3D Representation.

Stars: ✭ 497 (-26.48%)

Mutual labels: cyclegan

Voice Converter Cyclegan

Voice Converter Using CycleGAN and Non-Parallel Data

Stars: ✭ 384 (-43.2%)

Mutual labels: cyclegan

Pix2depth

DEPRECATED: Depth Map Estimation from Monocular Images

Stars: ✭ 293 (-56.66%)

Mutual labels: cyclegan

CycleGAN

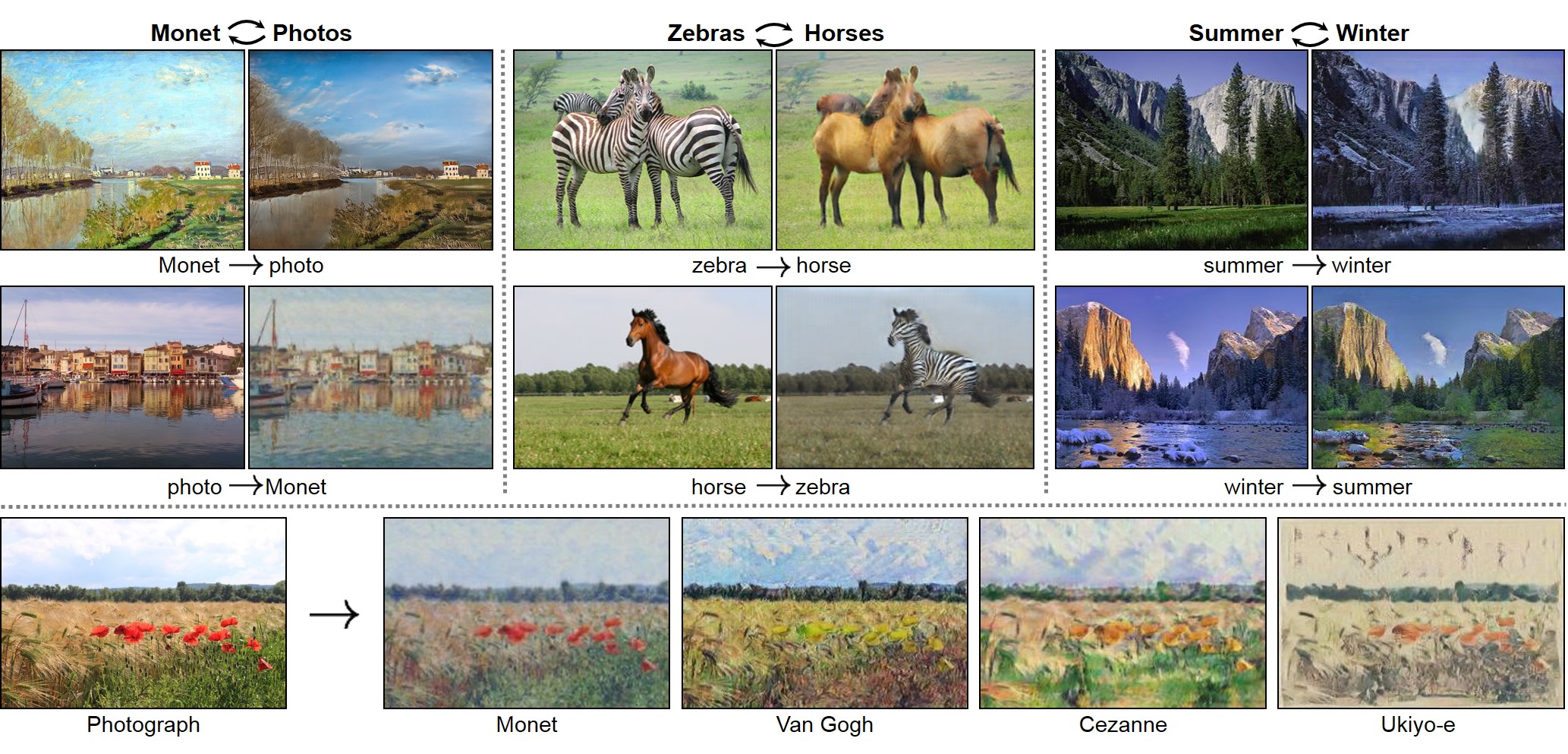

Tensorflow implementation for learning an image-to-image translation without input-output pairs. The method is proposed by Jun-Yan Zhu in Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkssee. For example in paper:

Update Results

The results of this implementation:

You can download the pretrained model from this url

and extract the rar file to ./checkpoint/.

Prerequisites

- tensorflow r1.1

- numpy 1.11.0

- scipy 0.17.0

- pillow 3.3.0

Getting Started

Installation

- Install tensorflow from https://github.com/tensorflow/tensorflow

- Clone this repo:

git clone https://github.com/xhujoy/CycleGAN-tensorflow

cd CycleGAN-tensorflow

Train

- Download a dataset (e.g. zebra and horse images from ImageNet):

bash ./download_dataset.sh horse2zebra

- Train a model:

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra

- Use tensorboard to visualize the training details:

tensorboard --logdir=./logs

Test

- Finally, test the model:

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra --phase=test --which_direction=AtoB

Training and Test Details

To train a model,

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=/path/to/data/

Models are saved to ./checkpoints/ (can be changed by passing --checkpoint_dir=your_dir).

To test the model,

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=/path/to/data/ --phase=test --which_direction=AtoB/BtoA

Datasets

Download the datasets using the following script:

bash ./download_dataset.sh dataset_name

-

facades: 400 images from the CMP Facades dataset. -

cityscapes: 2975 images from the Cityscapes training set. -

maps: 1096 training images scraped from Google Maps. -

horse2zebra: 939 horse images and 1177 zebra images downloaded from ImageNet using keywordswild horseandzebra. -

apple2orange: 996 apple images and 1020 orange images downloaded from ImageNet using keywordsappleandnavel orange. -

summer2winter_yosemite: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper. -

monet2photo,vangogh2photo,ukiyoe2photo,cezanne2photo: The art images were downloaded from Wikiart. The real photos are downloaded from Flickr using combination of tags landscape and landscapephotography. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853. -

iphone2dslr_flower: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

Reference

- The torch implementation of CycleGAN, https://github.com/junyanz/CycleGAN

- The tensorflow implementation of pix2pix, https://github.com/yenchenlin/pix2pix-tensorflow

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].