indiejoseph / Doc Han Att

Hierarchical Attention Networks for Chinese Sentiment Classification

Stars: ✭ 206

Projects that are alternatives of or similar to Doc Han Att

Pytorch Seq2seq

Tutorials on implementing a few sequence-to-sequence (seq2seq) models with PyTorch and TorchText.

Stars: ✭ 3,418 (+1559.22%)

Mutual labels: jupyter-notebook, rnn, attention

Tensorflow2 Docs Zh

TF2.0 / TensorFlow 2.0 / TensorFlow2.0 官方文档中文版

Stars: ✭ 177 (-14.08%)

Mutual labels: document, jupyter-notebook, rnn

Chinese Chatbot

中文聊天机器人,基于10万组对白训练而成,采用注意力机制,对一般问题都会生成一个有意义的答复。已上传模型,可直接运行,跑不起来直播吃键盘。

Stars: ✭ 124 (-39.81%)

Mutual labels: jupyter-notebook, rnn, attention

Attentive Neural Processes

implementing "recurrent attentive neural processes" to forecast power usage (w. LSTM baseline, MCDropout)

Stars: ✭ 33 (-83.98%)

Mutual labels: jupyter-notebook, rnn, attention

Rnn For Joint Nlu

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling" (https://arxiv.org/abs/1609.01454)

Stars: ✭ 176 (-14.56%)

Mutual labels: jupyter-notebook, rnn, attention

Machine Learning

My Attempt(s) In The World Of ML/DL....

Stars: ✭ 78 (-62.14%)

Mutual labels: jupyter-notebook, rnn, attention

Multihead Siamese Nets

Implementation of Siamese Neural Networks built upon multihead attention mechanism for text semantic similarity task.

Stars: ✭ 144 (-30.1%)

Mutual labels: jupyter-notebook, attention

Hey Jetson

Deep Learning based Automatic Speech Recognition with attention for the Nvidia Jetson.

Stars: ✭ 161 (-21.84%)

Mutual labels: jupyter-notebook, attention

Char Rnn Pytorch

使用PyTorch实现Char RNN生成古诗和周杰伦的歌词

Stars: ✭ 114 (-44.66%)

Mutual labels: jupyter-notebook, rnn

Load forecasting

Load forcasting on Delhi area electric power load using ARIMA, RNN, LSTM and GRU models

Stars: ✭ 160 (-22.33%)

Mutual labels: jupyter-notebook, rnn

Attentionn

All about attention in neural networks. Soft attention, attention maps, local and global attention and multi-head attention.

Stars: ✭ 175 (-15.05%)

Mutual labels: jupyter-notebook, attention

Linear Attention Recurrent Neural Network

A recurrent attention module consisting of an LSTM cell which can query its own past cell states by the means of windowed multi-head attention. The formulas are derived from the BN-LSTM and the Transformer Network. The LARNN cell with attention can be easily used inside a loop on the cell state, just like any other RNN. (LARNN)

Stars: ✭ 119 (-42.23%)

Mutual labels: jupyter-notebook, rnn

Nlp Models Tensorflow

Gathers machine learning and Tensorflow deep learning models for NLP problems, 1.13 < Tensorflow < 2.0

Stars: ✭ 1,603 (+678.16%)

Mutual labels: jupyter-notebook, attention

Stylenet

A cute multi-layer LSTM that can perform like a human 🎶

Stars: ✭ 187 (-9.22%)

Mutual labels: jupyter-notebook, rnn

Graph attention pool

Attention over nodes in Graph Neural Networks using PyTorch (NeurIPS 2019)

Stars: ✭ 186 (-9.71%)

Mutual labels: jupyter-notebook, attention

Char Rnn Chinese

Multi-layer Recurrent Neural Networks (LSTM, GRU, RNN) for character-level language models in Torch. Based on code of https://github.com/karpathy/char-rnn. Support Chinese and other things.

Stars: ✭ 192 (-6.8%)

Mutual labels: chinese, rnn

Deep Learning With Python

Deep learning codes and projects using Python

Stars: ✭ 195 (-5.34%)

Mutual labels: jupyter-notebook, rnn

Ml Ai Experiments

All my experiments with AI and ML

Stars: ✭ 107 (-48.06%)

Mutual labels: jupyter-notebook, rnn

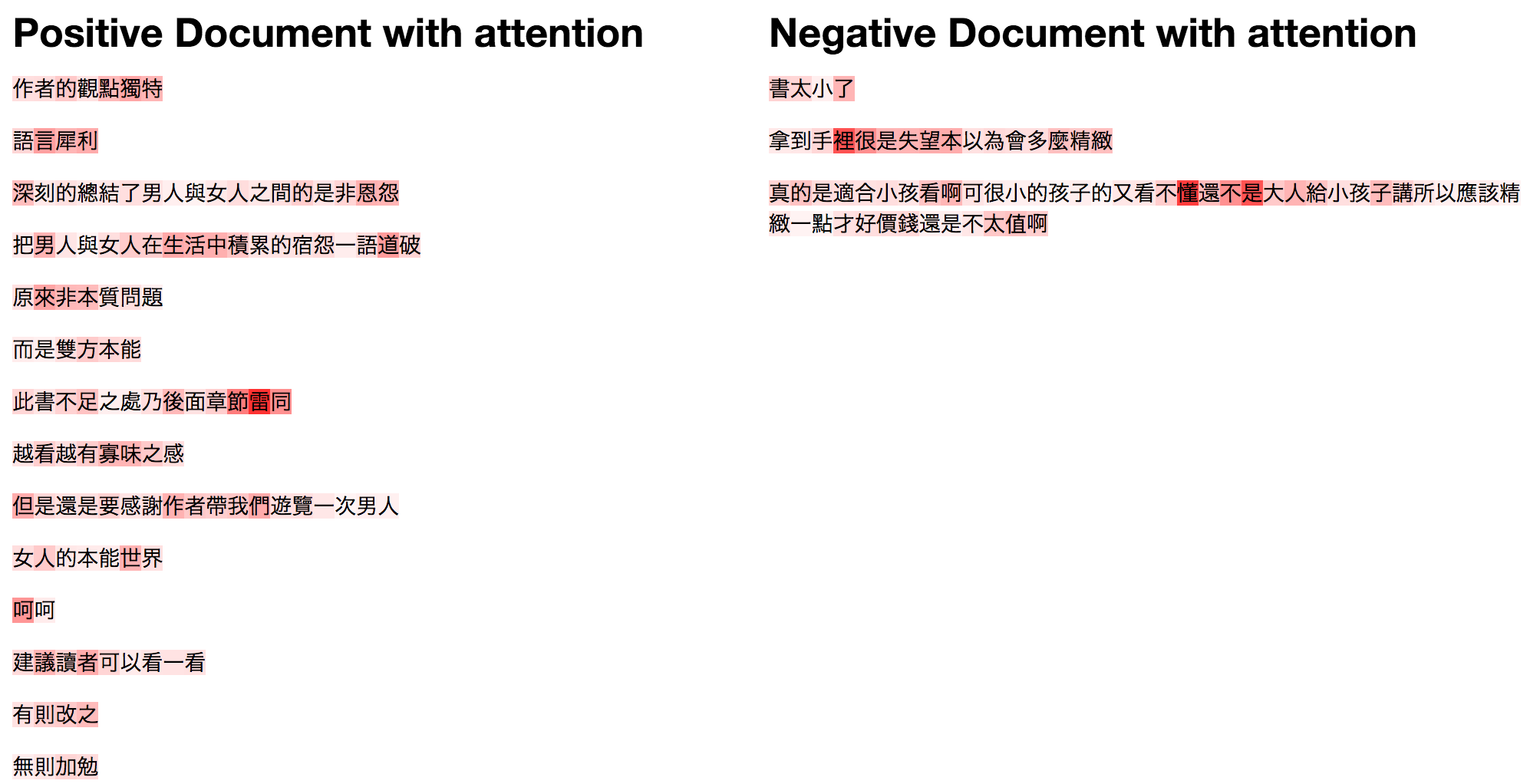

Hierarchical Attention Networks for Chinese Sentiment Classification

This is HAN version of sentiment classification, with pre-trained character-level embedding, and used RAN instead of GRU.

Dataset

Downloaded from internet but i forget where is it ;p, the original dataset is in Simplified Chinese, i used opencc translated it into Traditional Chinese. After 100 epochs, the valid accuracy achieved 96.31%

Requirement

Tensorflow r1.1+

Attention Heatmap

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].