kvmanohar22 / Img2imggan

Programming Languages

Projects that are alternatives of or similar to Img2imggan

img2ImgGAN

Implementation of the paper : Toward Multimodal Image-to-Image Translation

- Link to the paper : arXiv:1711.11586

- PyTorch implementation: Link

- Summary of the paper: Gist

Results

Contents:

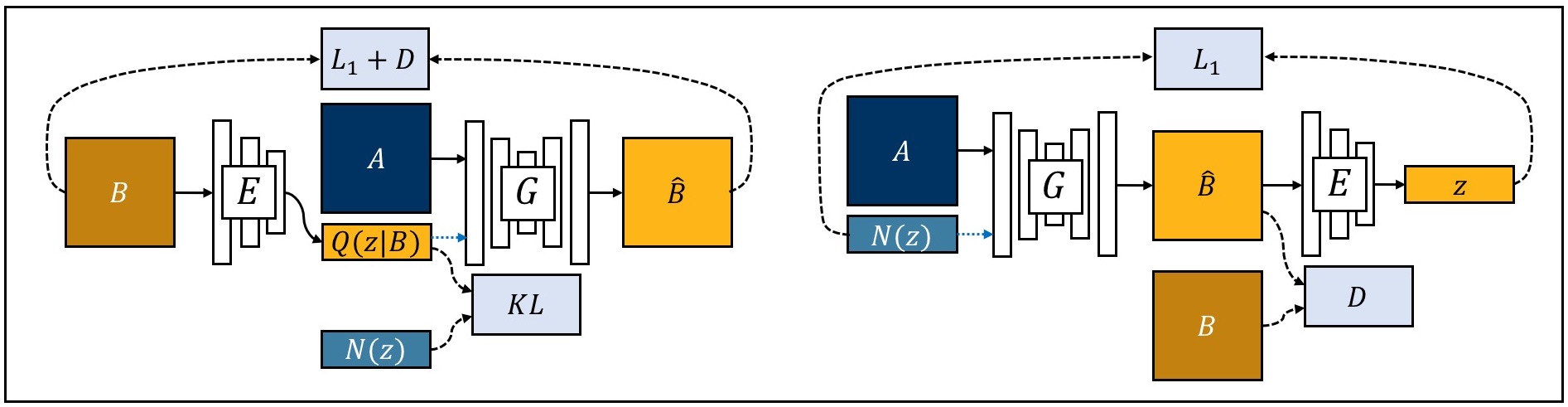

Model Architecture Visualization

- Network

Fig 1: Structure of BicycleGAN. (Image taken from the paper)

Fig 1: Structure of BicycleGAN. (Image taken from the paper)

- Tensorboard visualization of the entire network

cVAE-GAN Network

Dependencies

- tensorflow (1.4.0)

- numpy (1.13.3)

- scikit-image (0.13.1)

- scipy (1.0.0)

To install the above dependencies, run:

$ sudo pip install -r requirements.txt

Structure

-img2imgGAN/

-nnet

-utils

-data/

-edges2handbags

-edges2shoes

-facades

-maps

Setup

-

Download the datasets from the following links

-

To generate numpy files for the datasets,

$ python main.py --create <dataset_name>

This creates

train.npyandval.npyin the corresponding dataset directory. This generates very huge files. As an alternate, the next step attempts to read images at run-time during training -

Alternate to the above step, you could read the images in real time during training. To do this, you should create files containing paths to the images. This can be done by running the following script in the root of this repo.

$ bash setup_dataset.sh

Usage

-

Generating graph:

To visualize the connections between the graph nodes, we can generate the graph using the flag

archi. This would be useful to assert the connections are correct. This generates the graph forbicycleGAN$ python main.py --archi

To generate the model graph for

cvae-gan,$ python main.py --model cvae-gan --archi

Possible models are:

cvae-gan,clr-gan,bicycle(default)To visualize the graph on

tensorboard, run the following command:$ tensorboard --logdir=logs/summary/Run_1 --host=127.0.0.1

Replace

Run_1with the latest directory name -

Complete list of options:

$ python main.py --help

-

Training the network

To train

model(saycvae-gan) ondataset(sayfacades) from scratch,$ python main.py --train --model cvae-gan --dataset facades

The above command by default trains the model in which images from distribution of

domain Bare generated conditioned on the images from the distribution ofdomain A. To switch the direction,$ python main.py --train --model cvae-gan --dataset facades --direction b2a

To resume the training from a checkpoint,

$ python main.py --resume <path_to_checkpoint> --model cvae-gan

-

Testing the network

- Download the checkpoint file from here and place the checkpoint files in the

ckptdirectory

To test the model from the given trained models, by default the model generates 5 different images (by sampling 5 different noise samples)

$ ./test.sh <dataset_name> <test_image_path>To generate multiple output samples,

$ ./test.sh <dataset_name> <test_image_path> < # of samples>

Try it with some of the test samples present in the directory

imgs/test - Download the checkpoint file from here and place the checkpoint files in the

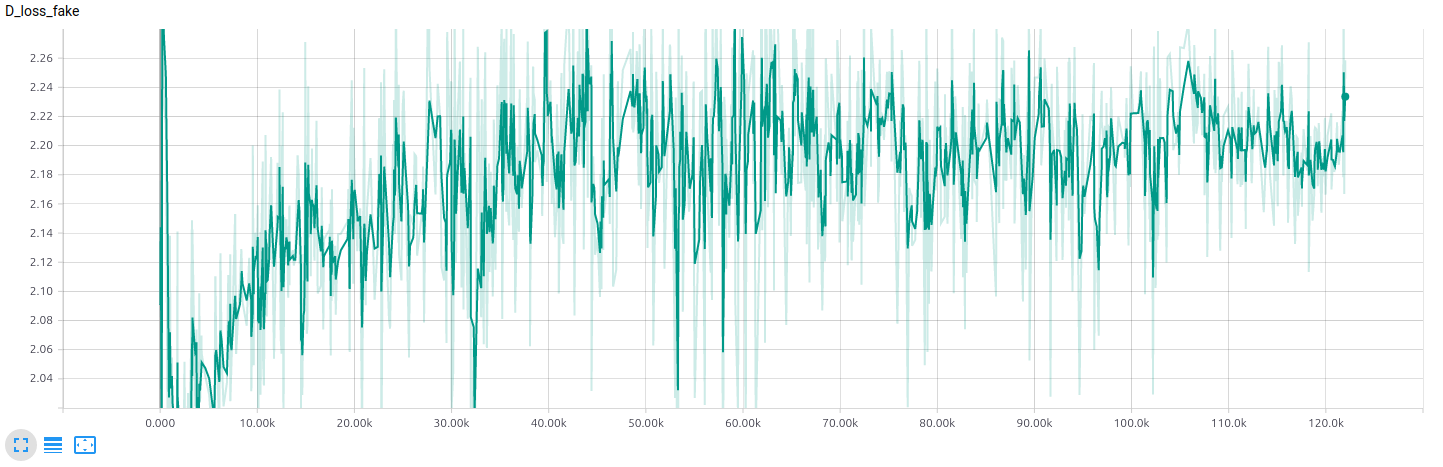

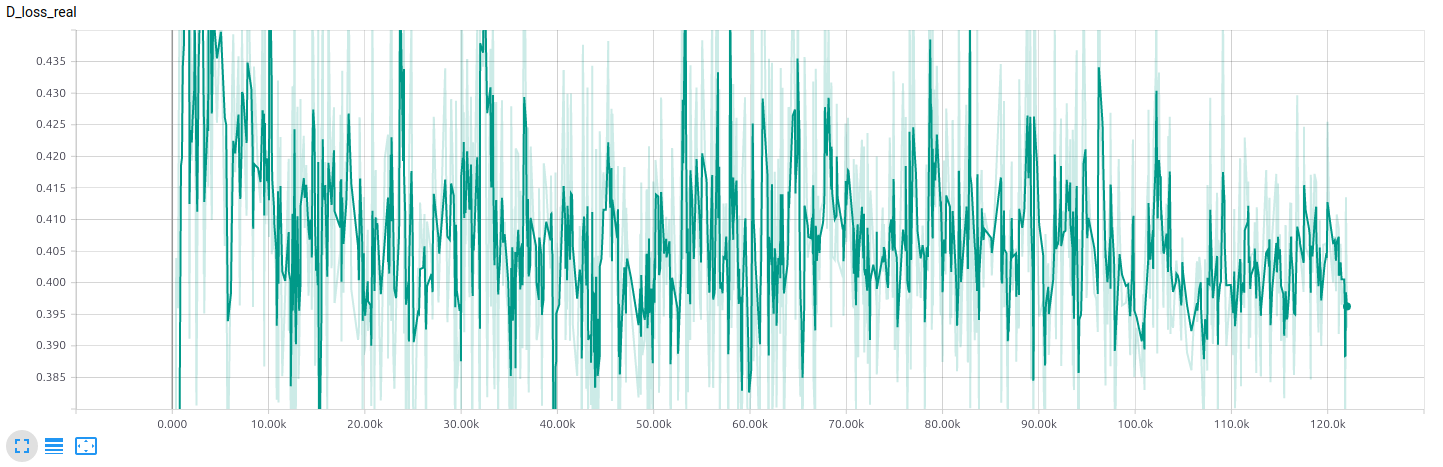

Visualizations

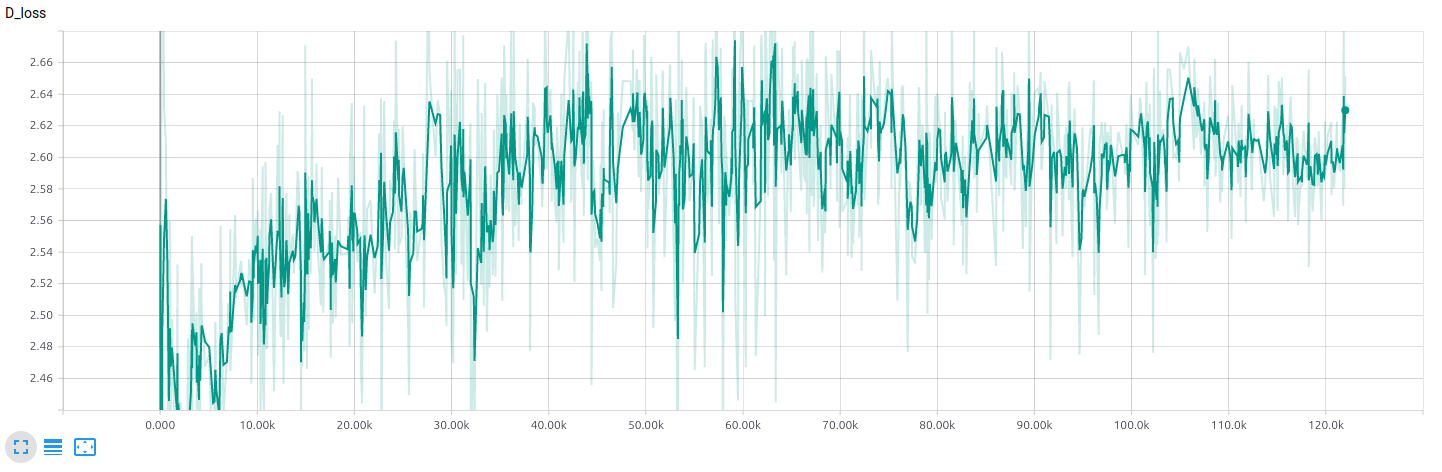

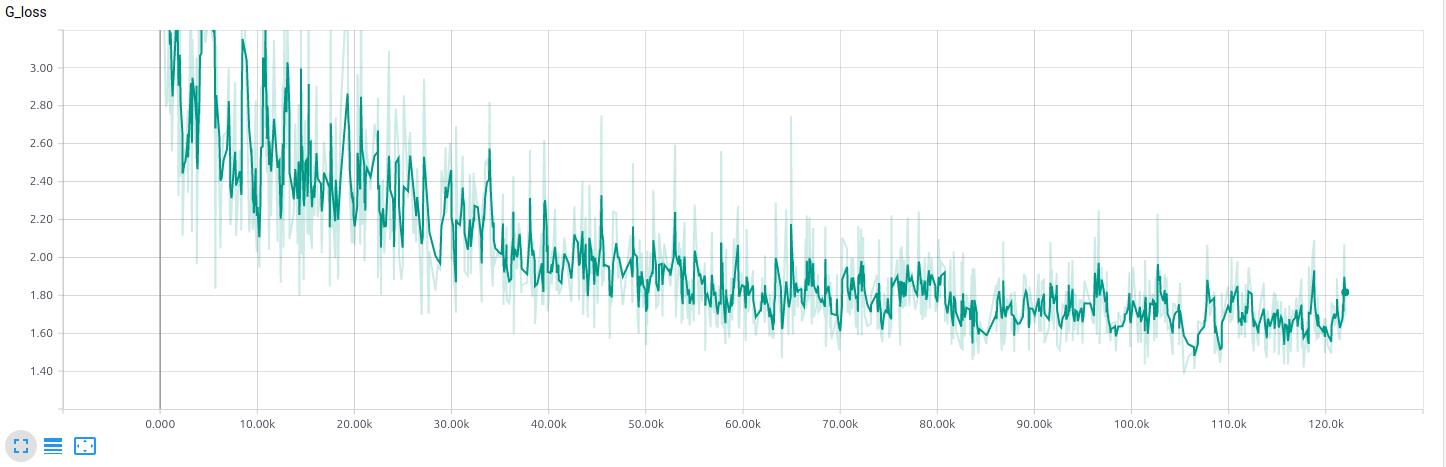

Loss of discriminator and generator as function of iterations on edges2shoes dataset.

TODO

- [x] Residual Encoder

- [ ] Multiple discriminators for

cVAE-GANandcLR-GAN - [ ] Inducing noise to all the layers of the generator

- [ ] Train the model on rest of the datasets

License

Released under the MIT license