lucidrains / Mixture Of Experts

Licence: mit

A Pytorch implementation of Sparsely-Gated Mixture of Experts, for massively increasing the parameter count of language models

Stars: ✭ 68

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Mixture Of Experts

Se3 Transformer Pytorch

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. This specific repository is geared towards integration with eventual Alphafold2 replication.

Stars: ✭ 73 (+7.35%)

Mutual labels: artificial-intelligence, transformer

Linear Attention Transformer

Transformer based on a variant of attention that is linear complexity in respect to sequence length

Stars: ✭ 205 (+201.47%)

Mutual labels: artificial-intelligence, transformer

Conformer

Implementation of the convolutional module from the Conformer paper, for use in Transformers

Stars: ✭ 103 (+51.47%)

Mutual labels: artificial-intelligence, transformer

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (+207.35%)

Mutual labels: artificial-intelligence, transformer

Routing Transformer

Fully featured implementation of Routing Transformer

Stars: ✭ 149 (+119.12%)

Mutual labels: artificial-intelligence, transformer

Omninet

Official Pytorch implementation of "OmniNet: A unified architecture for multi-modal multi-task learning" | Authors: Subhojeet Pramanik, Priyanka Agrawal, Aman Hussain

Stars: ✭ 448 (+558.82%)

Mutual labels: artificial-intelligence, transformer

Dragonfire

the open-source virtual assistant for Ubuntu based Linux distributions

Stars: ✭ 1,120 (+1547.06%)

Mutual labels: artificial-intelligence

Ai Platform

An open-source platform for automating tasks using machine learning models

Stars: ✭ 61 (-10.29%)

Mutual labels: artificial-intelligence

Botsharp

The Open Source AI Chatbot Platform Builder in 100% C# Running in .NET Core with Machine Learning algorithm.

Stars: ✭ 1,103 (+1522.06%)

Mutual labels: artificial-intelligence

Numbers

Handwritten digits, a bit like the MNIST dataset.

Stars: ✭ 66 (-2.94%)

Mutual labels: artificial-intelligence

Ultimatemrz Sdk

Machine-readable zone/travel document (MRZ / MRTD) detector and recognizer using deep learning

Stars: ✭ 66 (-2.94%)

Mutual labels: artificial-intelligence

Openvoiceos

OpenVoiceOS is a minimalistic linux OS bringing the open source voice assistant Mycroft A.I. to embbeded, low-spec headless and/or small (touch)screen devices.

Stars: ✭ 64 (-5.88%)

Mutual labels: artificial-intelligence

The Third Eye

An AI based application to identify currency and gives audio feedback.

Stars: ✭ 63 (-7.35%)

Mutual labels: artificial-intelligence

Radiate

Radiate is a parallel genetic programming engine capable of evolving solutions to many problems as well as training learning algorithms.

Stars: ✭ 65 (-4.41%)

Mutual labels: artificial-intelligence

Viewpagertransformer

Viewpager动画,包括渐变,旋转,缩放,3D,立方体等多种酷炫效果动画,实现原理是自定义ViewpagerTransformer,当然你也可以自定义多种动画

Stars: ✭ 62 (-8.82%)

Mutual labels: transformer

Espnetv2 Coreml

Semantic segmentation on iPhone using ESPNetv2

Stars: ✭ 66 (-2.94%)

Mutual labels: artificial-intelligence

Data Science Best Resources

Carefully curated resource links for data science in one place

Stars: ✭ 1,104 (+1523.53%)

Mutual labels: artificial-intelligence

Pyribs

A bare-bones Python library for quality diversity optimization.

Stars: ✭ 66 (-2.94%)

Mutual labels: artificial-intelligence

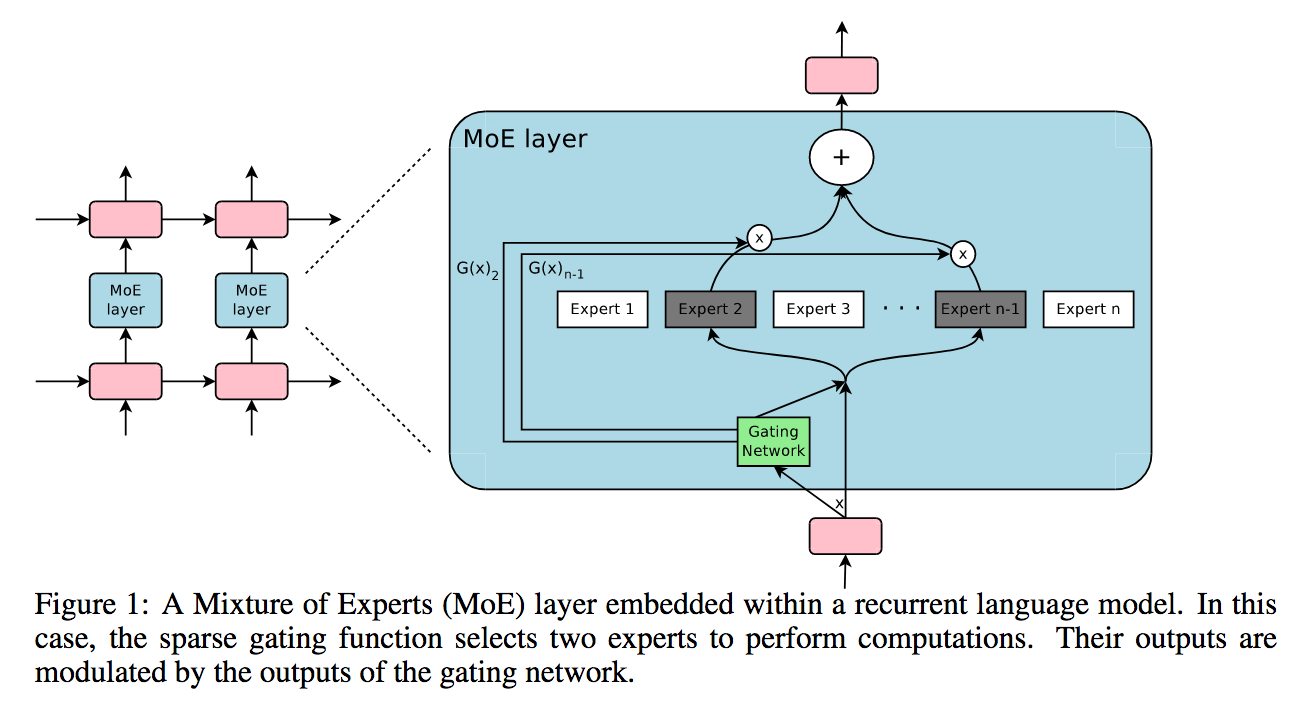

Sparsely Gated Mixture of Experts - Pytorch

A Pytorch implementation of Sparsely Gated Mixture of Experts, for massively increasing the capacity (parameter count) of a language model while keeping the computation constant.

It will mostly be a line-by-line transcription of the tensorflow implementation here, with a few enhancements.

Install

$ pip install mixture_of_experts

Usage

import torch

from torch import nn

from mixture_of_experts import MoE

moe = MoE(

dim = 512,

num_experts = 16, # increase the experts (# parameters) of your model without increasing computation

hidden_dim = 512 * 4, # size of hidden dimension in each expert, defaults to 4 * dimension

activation = nn.LeakyReLU, # use your preferred activation, will default to GELU

second_policy_train = 'random', # in top_2 gating, policy for whether to use a second-place expert

second_policy_eval = 'random', # all (always) | none (never) | threshold (if gate value > the given threshold) | random (if gate value > threshold * random_uniform(0, 1))

second_threshold_train = 0.2,

second_threshold_eval = 0.2,

capacity_factor_train = 1.25, # experts have fixed capacity per batch. we need some extra capacity in case gating is not perfectly balanced.

capacity_factor_eval = 2., # capacity_factor_* should be set to a value >=1

loss_coef = 1e-2 # multiplier on the auxiliary expert balancing auxiliary loss

)

inputs = torch.randn(4, 1024, 512)

out, aux_loss = moe(inputs) # (4, 1024, 512), (1,)

The above should suffice for a single machine, but if you want a heirarchical mixture of experts (2 levels), as used in the GShard paper, please follow the instructions below

import torch

from mixture_of_experts import HeirarchicalMoE

moe = HeirarchicalMoE(

dim = 512,

num_experts = (4, 4), # 4 gates on the first layer, then 4 experts on the second, equaling 16 experts

)

inputs = torch.randn(4, 1024, 512)

out, aux_loss = moe(inputs) # (4, 1024, 512), (1,)

1 billion parameters

import torch

from mixture_of_experts import HeirarchicalMoE

moe = HeirarchicalMoE(

dim = 512,

num_experts = (22, 22)

).cuda()

inputs = torch.randn(1, 1024, 512).cuda()

out, aux_loss = moe(inputs)

total_params = sum(p.numel() for p in moe.parameters())

print(f'number of parameters - {total_params}')

If you want some more sophisticated network for the experts, you can define your own and pass it into the MoE class as experts

import torch

from torch import nn

from mixture_of_experts import MoE

# a 3 layered MLP as the experts

class Experts(nn.Module):

def __init__(self, dim, num_experts = 16):

super().__init__()

self.w1 = nn.Parameter(torch.randn(num_experts, dim, dim * 4))

self.w2 = nn.Parameter(torch.randn(num_experts, dim * 4, dim * 4))

self.w3 = nn.Parameter(torch.randn(num_experts, dim * 4, dim))

self.act = nn.LeakyReLU(inplace = True)

def forward(self, x):

hidden1 = self.act(torch.einsum('end,edh->enh', x, self.w1))

hidden2 = self.act(torch.einsum('end,edh->enh', hidden1, self.w2))

out = torch.einsum('end,edh->enh', hidden2, self.w3)

return out

experts = Experts(512, num_experts = 16)

moe = MoE(

dim = 512,

num_experts = 16,

experts = experts

)

inputs = torch.randn(4, 1024, 512)

out, aux_loss = moe(inputs) # (4, 1024, 512), (1,)

Citation

@misc{shazeer2017outrageously,

title = {Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer},

author = {Noam Shazeer and Azalia Mirhoseini and Krzysztof Maziarz and Andy Davis and Quoc Le and Geoffrey Hinton and Jeff Dean},

year = {2017},

eprint = {1701.06538},

archivePrefix = {arXiv},

primaryClass = {cs.LG}

}

@misc{lepikhin2020gshard,

title = {GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding},

author = {Dmitry Lepikhin and HyoukJoong Lee and Yuanzhong Xu and Dehao Chen and Orhan Firat and Yanping Huang and Maxim Krikun and Noam Shazeer and Zhifeng Chen},

year = {2020},

eprint = {2006.16668},

archivePrefix = {arXiv},

primaryClass = {cs.CL}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].