atulkum / Pointer_summarizer

Licence: apache-2.0

pytorch implementation of "Get To The Point: Summarization with Pointer-Generator Networks"

Stars: ✭ 629

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Pointer summarizer

Seq2seq Summarizer

Pointer-generator reinforced seq2seq summarization in PyTorch

Stars: ✭ 306 (-51.35%)

Mutual labels: attention-mechanism, summarization

Nmt Keras

Neural Machine Translation with Keras

Stars: ✭ 501 (-20.35%)

Mutual labels: attention-mechanism

Keras Gat

Keras implementation of the graph attention networks (GAT) by Veličković et al. (2017; https://arxiv.org/abs/1710.10903)

Stars: ✭ 334 (-46.9%)

Mutual labels: attention-mechanism

Pytorch Original Transformer

My implementation of the original transformer model (Vaswani et al.). I've additionally included the playground.py file for visualizing otherwise seemingly hard concepts. Currently included IWSLT pretrained models.

Stars: ✭ 411 (-34.66%)

Mutual labels: attention-mechanism

Action Recognition Visual Attention

Action recognition using soft attention based deep recurrent neural networks

Stars: ✭ 350 (-44.36%)

Mutual labels: attention-mechanism

Awesome Graph Classification

A collection of important graph embedding, classification and representation learning papers with implementations.

Stars: ✭ 4,309 (+585.06%)

Mutual labels: attention-mechanism

Yolo Multi Backbones Attention

Model Compression—YOLOv3 with multi lightweight backbones(ShuffleNetV2 HuaWei GhostNet), attention, prune and quantization

Stars: ✭ 317 (-49.6%)

Mutual labels: attention-mechanism

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (-13.2%)

Mutual labels: attention-mechanism

Keras Self Attention

Attention mechanism for processing sequential data that considers the context for each timestamp.

Stars: ✭ 489 (-22.26%)

Mutual labels: attention-mechanism

Bottleneck Transformer Pytorch

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408 (-35.14%)

Mutual labels: attention-mechanism

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (-35.14%)

Mutual labels: attention-mechanism

Simgnn

A PyTorch implementation of "SimGNN: A Neural Network Approach to Fast Graph Similarity Computation" (WSDM 2019).

Stars: ✭ 351 (-44.2%)

Mutual labels: attention-mechanism

Structured Self Attention

A Structured Self-attentive Sentence Embedding

Stars: ✭ 459 (-27.03%)

Mutual labels: attention-mechanism

Transformer

A TensorFlow Implementation of the Transformer: Attention Is All You Need

Stars: ✭ 3,646 (+479.65%)

Mutual labels: attention-mechanism

Headlines

Automatically generate headlines to short articles

Stars: ✭ 516 (-17.97%)

Mutual labels: summarization

Paperrobot

Code for PaperRobot: Incremental Draft Generation of Scientific Ideas

Stars: ✭ 372 (-40.86%)

Mutual labels: attention-mechanism

Transformer Tts

A Pytorch Implementation of "Neural Speech Synthesis with Transformer Network"

Stars: ✭ 418 (-33.55%)

Mutual labels: attention-mechanism

Awesome Bert Nlp

A curated list of NLP resources focused on BERT, attention mechanism, Transformer networks, and transfer learning.

Stars: ✭ 567 (-9.86%)

Mutual labels: attention-mechanism

Moran v2

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition

Stars: ✭ 536 (-14.79%)

Mutual labels: attention-mechanism

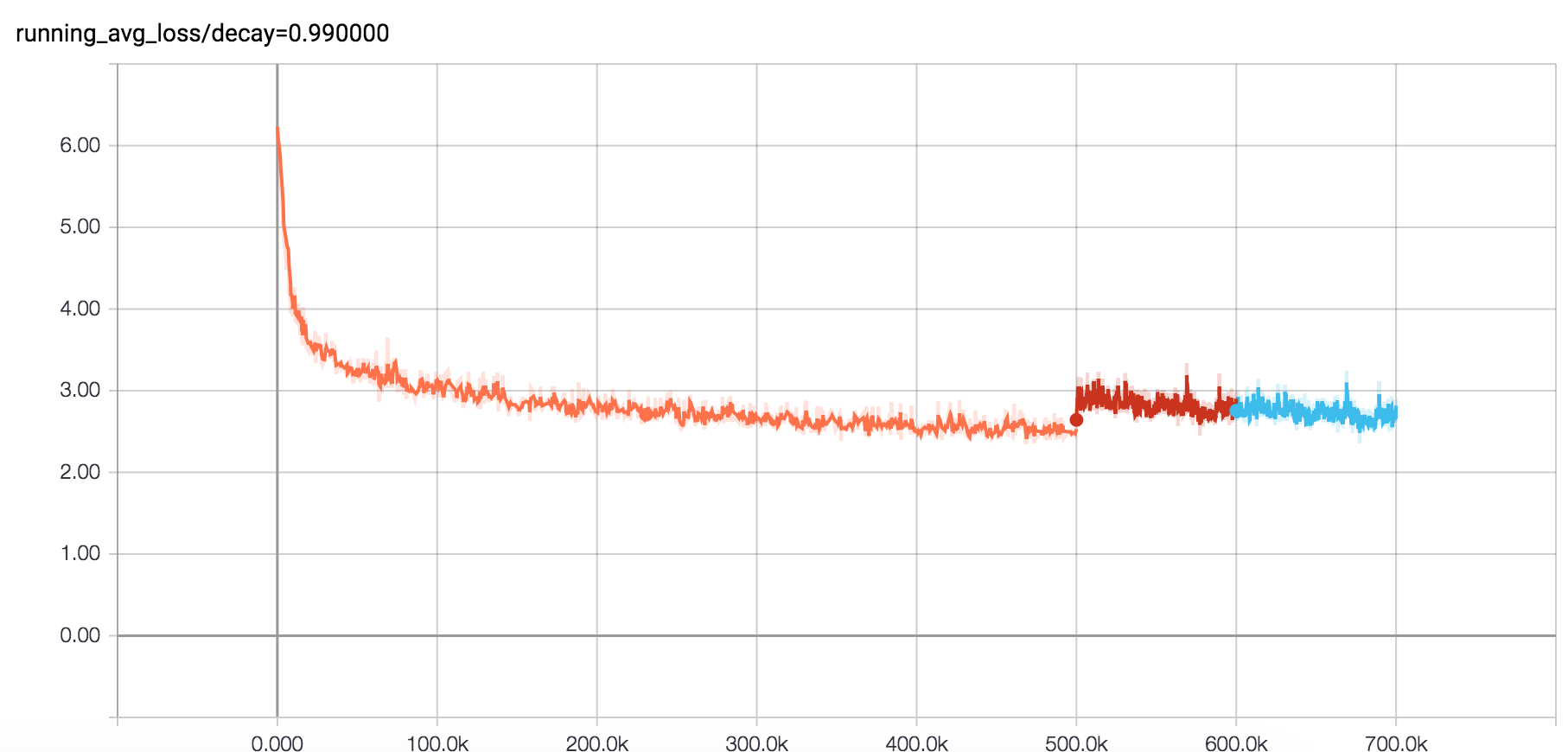

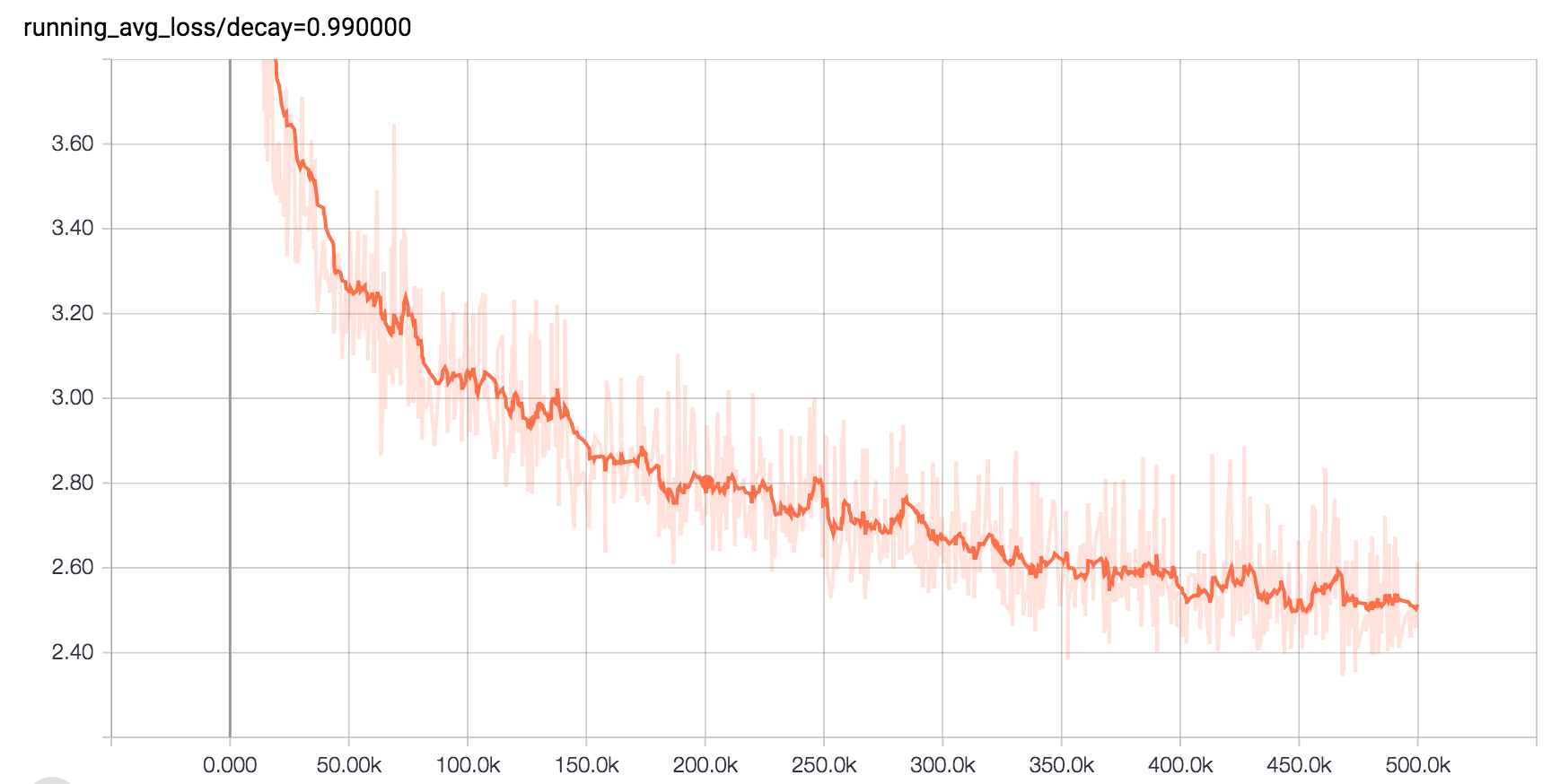

pytorch implementation of Get To The Point: Summarization with Pointer-Generator Networks

- Train with pointer generation and coverage loss enabled

- Training with pointer generation enabled

- How to run training

- Papers using this code

Train with pointer generation and coverage loss enabled

After training for 100k iterations with coverage loss enabled (batch size 8)

ROUGE-1:

rouge_1_f_score: 0.3907 with confidence interval (0.3885, 0.3928)

rouge_1_recall: 0.4434 with confidence interval (0.4410, 0.4460)

rouge_1_precision: 0.3698 with confidence interval (0.3672, 0.3721)

ROUGE-2:

rouge_2_f_score: 0.1697 with confidence interval (0.1674, 0.1720)

rouge_2_recall: 0.1920 with confidence interval (0.1894, 0.1945)

rouge_2_precision: 0.1614 with confidence interval (0.1590, 0.1636)

ROUGE-l:

rouge_l_f_score: 0.3587 with confidence interval (0.3565, 0.3608)

rouge_l_recall: 0.4067 with confidence interval (0.4042, 0.4092)

rouge_l_precision: 0.3397 with confidence interval (0.3371, 0.3420)

Training with pointer generation enabled

After training for 500k iterations (batch size 8)

ROUGE-1:

rouge_1_f_score: 0.3500 with confidence interval (0.3477, 0.3523)

rouge_1_recall: 0.3718 with confidence interval (0.3693, 0.3745)

rouge_1_precision: 0.3529 with confidence interval (0.3501, 0.3555)

ROUGE-2:

rouge_2_f_score: 0.1486 with confidence interval (0.1465, 0.1508)

rouge_2_recall: 0.1573 with confidence interval (0.1551, 0.1597)

rouge_2_precision: 0.1506 with confidence interval (0.1483, 0.1529)

ROUGE-l:

rouge_l_f_score: 0.3202 with confidence interval (0.3179, 0.3225)

rouge_l_recall: 0.3399 with confidence interval (0.3374, 0.3426)

rouge_l_precision: 0.3231 with confidence interval (0.3205, 0.3256)

How to run training:

- Follow data generation instruction from https://github.com/abisee/cnn-dailymail

- Run start_train.sh, you might need to change some path and parameters in data_util/config.py

- For training run start_train.sh, for decoding run start_decode.sh, and for evaluating run run_eval.sh

Note:

-

In decode mode beam search batch should have only one example replicated to batch size https://github.com/atulkum/pointer_summarizer/blob/master/training_ptr_gen/decode.py#L109 https://github.com/atulkum/pointer_summarizer/blob/master/data_util/batcher.py#L226

-

It is tested on pytorch 0.4 with python 2.7

-

You need to setup pyrouge to get the rouge score

Papers using this code:

- Automatic Program Synthesis of Long Programs with a Learned Garbage Collector NeuroIPS 2018 https://github.com/amitz25/PCCoder

- Automatic Fact-guided Sentence Modification AAAI 2020 https://github.com/darsh10/split_encoder_pointer_summarizer

- Resurrecting Submodularity in Neural Abstractive Summarization

- StructSum: Incorporating Latent and Explicit Sentence Dependencies for Single Document Summarization

- Concept Pointer Network for Abstractive Summarization EMNLP'2019 https://github.com/wprojectsn/codes

- VAE-PGN based Abstractive Model in Multi-stage Architecture for Text Summarization INLG2019

- Clickbait? Sensational Headline Generation with Auto-tuned Reinforcement Learning EMNLP'2019 https://github.com/HLTCHKUST/sensational_headline

- Abstractive Spoken Document Summarization using Hierarchical Model with Multi-stage Attention Diversity Optimization INTERSPEECH 2020

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].