greydanus / Scribe

Projects that are alternatives of or similar to Scribe

Scribe: Realistic Handwriting in Tensorflow

See blog post

Samples

"A project by Sam Greydanus"

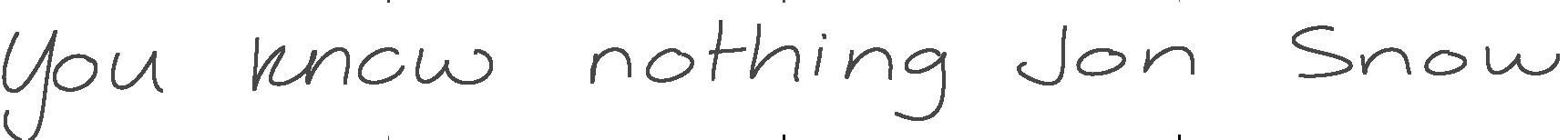

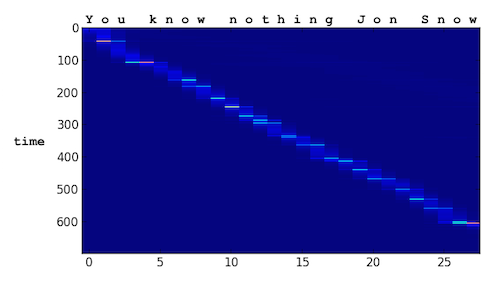

"You know nothing Jon Snow" (print)

"You know nothing Jon Snow" (print)

"You know nothing Jon Snow" (cursive)

"You know nothing Jon Snow" (cursive)

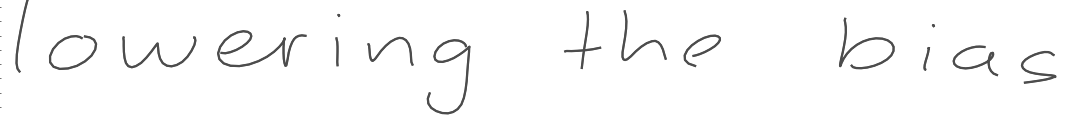

"lowering the bias"

"makes the writing messier"

"makes the writing messier"

"but more random"

"but more random"

Jupyter Notebooks

For an easy intro to the code (along with equations and explanations) check out these Jupyter notebooks:

Getting started

- install dependencies (see below).

- download the repo

- navigate to the repo in bash

- download and unzip folder containing pretrained models: https://goo.gl/qbH2uw

- place in this directory

Now you have two options:

- Run the sampler in bash:

mkdir -p ./logs/figures && python run.py --sample --tsteps 700 - Open the sample.ipynb jupyter notebook and run cell-by-cell (it includes equations and text to explain how the model works)

About

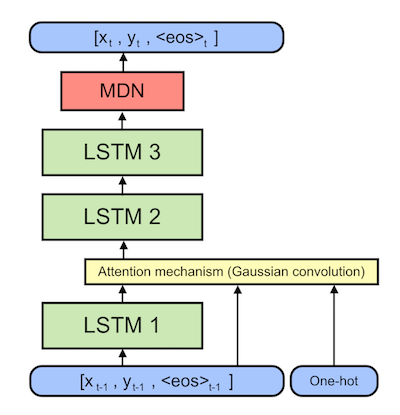

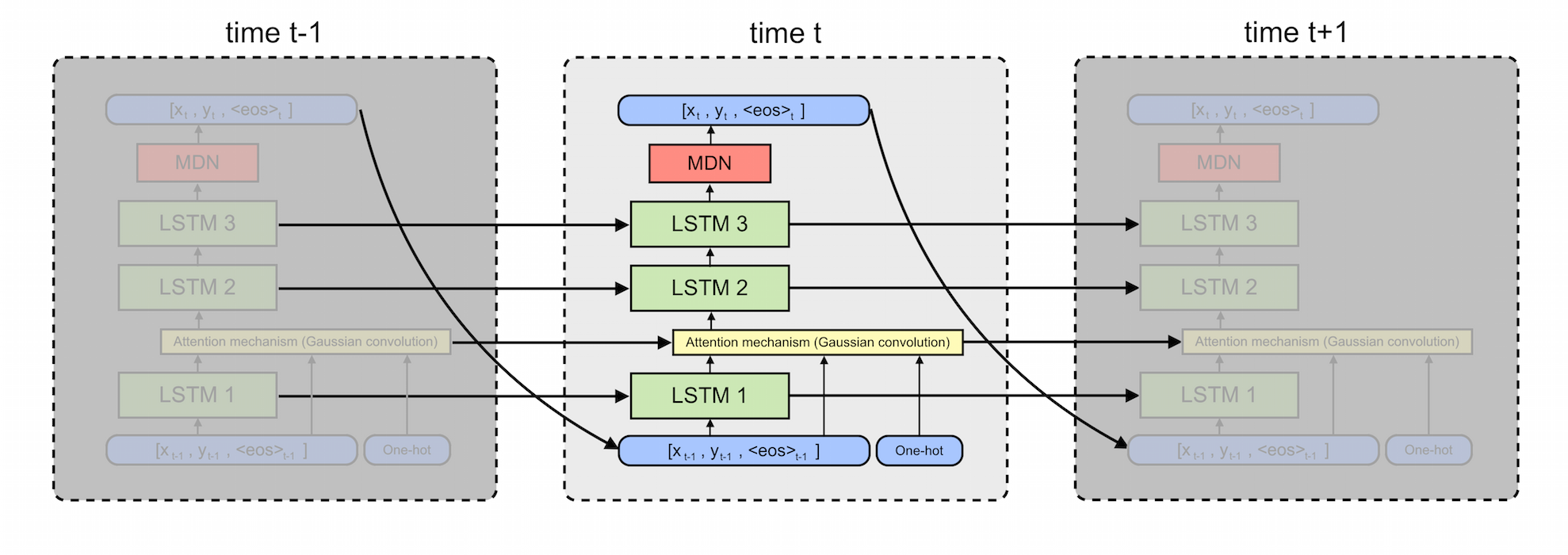

This model is trained on the IAM handwriting dataset and was inspired by the model described by the famous 2014 Alex Graves paper. It consists of a three-layer recurrent neural network (LSTM cells) with a Gaussian Mixture Density Network (MDN) cap on top. I have also implemented the attention mechanism from the paper which allows the network to 'focus' on character at a time in a sequence as it draws them.

The model at one time step looks like this

I've implemented the attention mechanism from the paper:

Dependencies

- All code is written in python 2.7. You will need:

- Numpy

- Matplotlib

- TensorFlow 1.0

- OPTIONAL: Jupyter (if you want to run sample.ipynb and dataloader.ipynb)