icoxfog417 / Tensorflow_qrnn

Licence: mit

QRNN implementation for TensorFlow

Stars: ✭ 241

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Tensorflow qrnn

Reside

EMNLP 2018: RESIDE: Improving Distantly-Supervised Neural Relation Extraction using Side Information

Stars: ✭ 222 (-7.88%)

Mutual labels: natural-language-processing

Wordgcn

ACL 2019: Incorporating Syntactic and Semantic Information in Word Embeddings using Graph Convolutional Networks

Stars: ✭ 230 (-4.56%)

Mutual labels: natural-language-processing

Chazutsu

The tool to make NLP datasets ready to use

Stars: ✭ 238 (-1.24%)

Mutual labels: natural-language-processing

Spacy Api Docker

spaCy REST API, wrapped in a Docker container.

Stars: ✭ 222 (-7.88%)

Mutual labels: natural-language-processing

Machine Learning Resources

A curated list of awesome machine learning frameworks, libraries, courses, books and many more.

Stars: ✭ 226 (-6.22%)

Mutual labels: natural-language-processing

Spacy Services

💫 REST microservices for various spaCy-related tasks

Stars: ✭ 230 (-4.56%)

Mutual labels: natural-language-processing

Dilated Cnn Ner

Dilated CNNs for NER in TensorFlow

Stars: ✭ 222 (-7.88%)

Mutual labels: natural-language-processing

Cmrc2018

A Span-Extraction Dataset for Chinese Machine Reading Comprehension (CMRC 2018)

Stars: ✭ 238 (-1.24%)

Mutual labels: natural-language-processing

Pytorch Transformers Classification

Based on the Pytorch-Transformers library by HuggingFace. To be used as a starting point for employing Transformer models in text classification tasks. Contains code to easily train BERT, XLNet, RoBERTa, and XLM models for text classification.

Stars: ✭ 229 (-4.98%)

Mutual labels: natural-language-processing

Pytorch Bert Crf Ner

KoBERT와 CRF로 만든 한국어 개체명인식기 (BERT+CRF based Named Entity Recognition model for Korean)

Stars: ✭ 236 (-2.07%)

Mutual labels: natural-language-processing

Catalyst

Accelerated deep learning R&D

Stars: ✭ 2,804 (+1063.49%)

Mutual labels: natural-language-processing

Catalyst

🚀 Catalyst is a C# Natural Language Processing library built for speed. Inspired by spaCy's design, it brings pre-trained models, out-of-the box support for training word and document embeddings, and flexible entity recognition models.

Stars: ✭ 224 (-7.05%)

Mutual labels: natural-language-processing

Deepnlp Models Pytorch

Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ)

Stars: ✭ 2,760 (+1045.23%)

Mutual labels: natural-language-processing

Machine Learning Notebooks

Machine Learning notebooks for refreshing concepts.

Stars: ✭ 222 (-7.88%)

Mutual labels: natural-language-processing

Pykakasi

NLP: Convert Japanese Kana-kanji sentences into Kana-Roman in simple algorithm.

Stars: ✭ 238 (-1.24%)

Mutual labels: natural-language-processing

Bert4doc Classification

Code and source for paper ``How to Fine-Tune BERT for Text Classification?``

Stars: ✭ 220 (-8.71%)

Mutual labels: natural-language-processing

Prodigy Recipes

🍳 Recipes for the Prodigy, our fully scriptable annotation tool

Stars: ✭ 229 (-4.98%)

Mutual labels: natural-language-processing

Pytorch Sentiment Analysis

Tutorials on getting started with PyTorch and TorchText for sentiment analysis.

Stars: ✭ 3,209 (+1231.54%)

Mutual labels: natural-language-processing

Malaya

Natural Language Toolkit for bahasa Malaysia, https://malaya.readthedocs.io/

Stars: ✭ 239 (-0.83%)

Mutual labels: natural-language-processing

Mitie

MITIE: library and tools for information extraction

Stars: ✭ 2,693 (+1017.43%)

Mutual labels: natural-language-processing

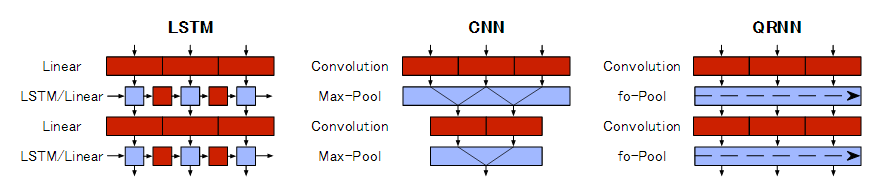

Tensorflow QRNN

QRNN implementation for TensorFlow. Implementation refer to below blog.

New neural network building block allows faster and more accurate text understanding

Dependencies

- TensorFlow: 0.12.0

- scikit-learn: 0.18.1 (for working check)

How to run

Forward Test

To confirm forward propagation, run below script.

python test_tf_qrnn_forward.py

Working Check

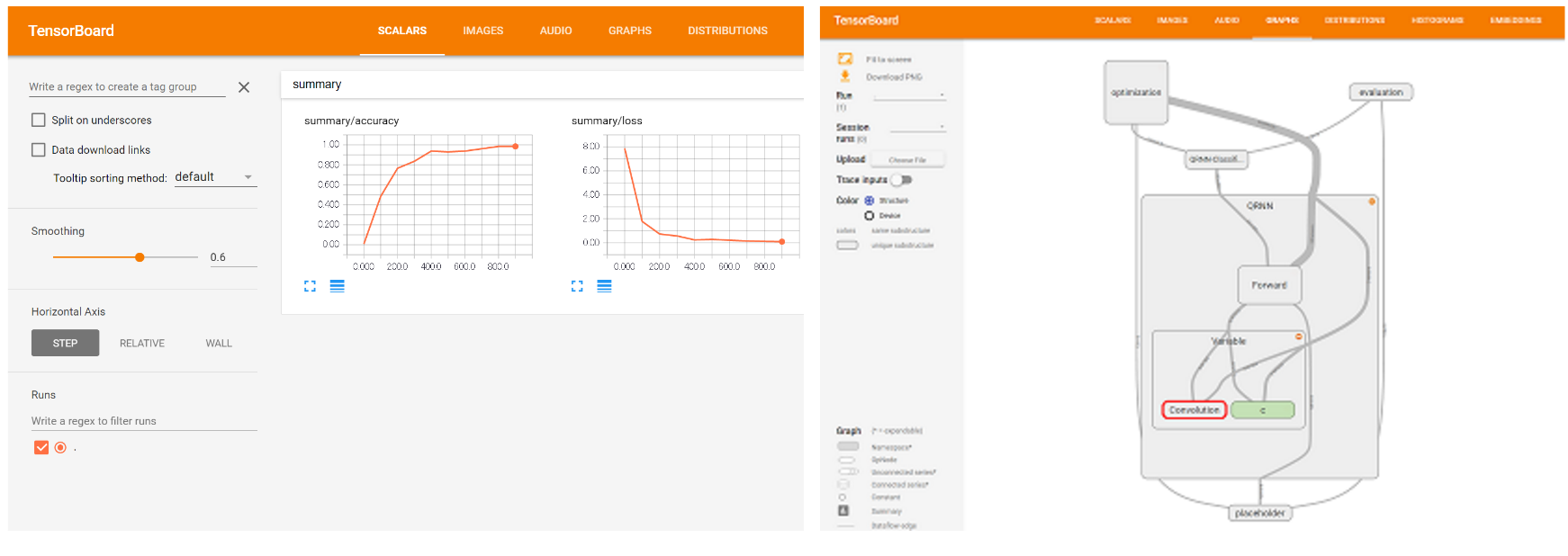

To confirm the performance of QRNN compare with baseline(LSTM), run below script. Dataset is scikit-learn's digit dataset.

python test_tf_qrnn_work.py

You can check the calculation result by TensorBoard.

For example.

tensorboard --logdir=./summary/qrnn

Experiments

Baseline(LSTM) Working check

Iter 0: loss=2.473149299621582, accuracy=0.1171875

Iter 100: loss=0.31235527992248535, accuracy=0.921875

Iter 200: loss=0.1704500913619995, accuracy=0.9453125

Iter 300: loss=0.0782063901424408, accuracy=0.9765625

Iter 400: loss=0.04097321629524231, accuracy=1.0

Iter 500: loss=0.023687714710831642, accuracy=0.9921875

Iter 600: loss=0.07718617469072342, accuracy=0.9765625

Iter 700: loss=0.02005828730762005, accuracy=0.9921875

Iter 800: loss=0.006271282210946083, accuracy=1.0

Iter 900: loss=0.007853344082832336, accuracy=1.0

Testset Accuracy=0.9375

takes 15.83749008178711 seconds.

QRNN Working check

Iter 0: loss=6.942812919616699, accuracy=0.0703125

Iter 100: loss=1.6366937160491943, accuracy=0.59375

Iter 200: loss=0.7058627605438232, accuracy=0.796875

Iter 300: loss=0.3940553069114685, accuracy=0.8984375

Iter 400: loss=0.2623080909252167, accuracy=0.9375

Iter 500: loss=0.3940059542655945, accuracy=0.921875

Iter 600: loss=0.1395827978849411, accuracy=0.96875

Iter 700: loss=0.11944477260112762, accuracy=0.984375

Iter 800: loss=0.1389300674200058, accuracy=0.9765625

Iter 900: loss=0.09582504630088806, accuracy=0.96875

Testset Accuracy=0.9140625

takes 13.540465116500854 seconds.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].