NVIDIA-AI-IOT / Tf_to_trt_image_classification

Programming Languages

Projects that are alternatives of or similar to Tf to trt image classification

TensorFlow->TensorRT Image Classification

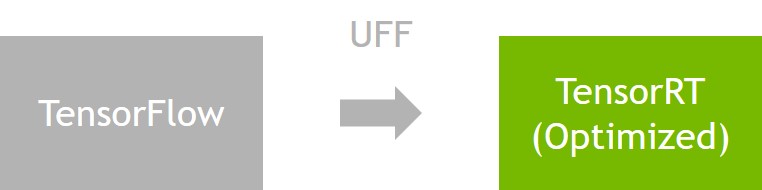

This contains examples, scripts and code related to image classification using TensorFlow models (from here) converted to TensorRT. Converting TensorFlow models to TensorRT offers significant performance gains on the Jetson TX2 as seen below.

- Models

- Setup

- Download models and create frozen graphs

- Convert frozen graph to TensorRT engine

- Execute TensorRT engine

- Benchmark all models

Models

The table below shows various details related to pretrained models ported from the TensorFlow slim model zoo.

| Model | Input Size | TensorRT (TX2 / Half) | TensorRT (TX2 / Float) | TensorFlow (TX2 / Float) | Input Name | Output Name | Preprocessing Fn. |

|---|---|---|---|---|---|---|---|

| inception_v1 | 224x224 | 7.98ms | 12.8ms | 27.6ms | input | InceptionV1/Logits/SpatialSqueeze | inception |

| inception_v3 | 299x299 | 26.3ms | 46.1ms | 98.4ms | input | InceptionV3/Logits/SpatialSqueeze | inception |

| inception_v4 | 299x299 | 52.1ms | 88.2ms | 176ms | input | InceptionV4/Logits/Logits/BiasAdd | inception |

| inception_resnet_v2 | 299x299 | 53.0ms | 98.7ms | 168ms | input | InceptionResnetV2/Logits/Logits/BiasAdd | inception |

| resnet_v1_50 | 224x224 | 15.7ms | 27.1ms | 63.9ms | input | resnet_v1_50/SpatialSqueeze | vgg |

| resnet_v1_101 | 224x224 | 29.9ms | 51.8ms | 107ms | input | resnet_v1_101/SpatialSqueeze | vgg |

| resnet_v1_152 | 224x224 | 42.6ms | 78.2ms | 157ms | input | resnet_v1_152/SpatialSqueeze | vgg |

| resnet_v2_50 | 299x299 | 27.5ms | 44.4ms | 92.2ms | input | resnet_v2_50/SpatialSqueeze | inception |

| resnet_v2_101 | 299x299 | 49.2ms | 83.1ms | 160ms | input | resnet_v2_101/SpatialSqueeze | inception |

| resnet_v2_152 | 299x299 | 74.6ms | 124ms | 230ms | input | resnet_v2_152/SpatialSqueeze | inception |

| mobilenet_v1_0p25_128 | 128x128 | 2.67ms | 2.65ms | 15.7ms | input | MobilenetV1/Logits/SpatialSqueeze | inception |

| mobilenet_v1_0p5_160 | 160x160 | 3.95ms | 4.00ms | 16.9ms | input | MobilenetV1/Logits/SpatialSqueeze | inception |

| mobilenet_v1_1p0_224 | 224x224 | 12.9ms | 12.9ms | 24.4ms | input | MobilenetV1/Logits/SpatialSqueeze | inception |

| vgg_16 | 224x224 | 38.2ms | 79.2ms | 171ms | input | vgg_16/fc8/BiasAdd | vgg |

The times recorded include data transfer to GPU, network execution, and data transfer back from GPU. Time does not include preprocessing. See scripts/test_tf.py, scripts/test_trt.py, and src/test/test_trt.cu for implementation details.

Setup

-

Flash the Jetson TX2 using JetPack 3.2. Be sure to install

- CUDA 9.0

- OpenCV4Tegra

- cuDNN

- TensorRT 3.0

-

Install pip on Jetson TX2.

sudo apt-get install python-pip -

Install TensorFlow on Jetson TX2.

-

Download the TensorFlow 1.5.0 pip wheel from here. This build of TensorFlow is provided as a convenience for the purposes of this project.

-

Install TensorFlow using pip

sudo pip install tensorflow-1.5.0rc0-cp27-cp27mu-linux_aarch64.whl

-

-

Install uff exporter on Jetson TX2.

-

Download TensorRT 3.0.4 for Ubuntu 16.04 and CUDA 9.0 tar package from https://developer.nvidia.com/nvidia-tensorrt-download.

-

Extract archive

tar -xzf TensorRT-3.0.4.Ubuntu-16.04.3.x86_64.cuda-9.0.cudnn7.0.tar.gz -

Install uff python package using pip

sudo pip install TensorRT-3.0.4/uff/uff-0.2.0-py2.py3-none-any.whl

-

-

Clone and build this project

git clone --recursive https://github.com/NVIDIA-Jetson/tf_to_trt_image_classification.git cd tf_to_trt_image_classification mkdir build cd build cmake .. make cd ..

Download models and create frozen graphs

Run the following bash script to download all of the pretrained models.

source scripts/download_models.sh

If there are any models you don't want to use, simply remove the URL from the model list in scripts/download_models.sh.

Next, because the TensorFlow models are provided in checkpoint format, we must convert them to frozen graphs for optimization with TensorRT. Run the scripts/models_to_frozen_graphs.py script.

python scripts/models_to_frozen_graphs.py

If you removed any models in the previous step, you must add 'exclude': true to the corresponding item in the NETS dictionary located in scripts/model_meta.py. If you are following the instructions for executing engines below, you may also need some sample images. Run the following script to download a few images from ImageNet.

source scripts/download_images.sh

Convert frozen graph to TensorRT engine

Run the scripts/convert_plan.py script from the root directory of the project, referencing the models table for relevant parameters. For example, to convert the Inception V1 model run the following

python scripts/convert_plan.py data/frozen_graphs/inception_v1.pb data/plans/inception_v1.plan input 224 224 InceptionV1/Logits/SpatialSqueeze 1 0 float

The inputs to the convert_plan.py script are

- frozen graph path

- output plan path

- input node name

- input height

- input width

- output node name

- max batch size

- max workspace size

- data type (float or half)

This script assumes single output single input image models, and may not work out of the box for models other than those in the table above.

Execute TensorRT engine

Call the examples/classify_image program from the root directory of the project, referencing the models table for relevant parameters. For example, to run the Inception V1 model converted as above

./build/examples/classify_image/classify_image data/images/gordon_setter.jpg data/plans/inception_v1.plan data/imagenet_labels_1001.txt input InceptionV1/Logits/SpatialSqueeze inception

For reference, the inputs to the example program are

- input image path

- plan file path

- labels file (one label per line, line number corresponds to index in output)

- input node name

- output node name

- preprocessing function (either vgg or inception)

We provide two image label files in the data folder. Some of the TensorFlow models were trained with an additional "background" class, causing the model to have 1001 outputs instead of 1000. To determine the number of outputs for each model, reference the NETS variable in scripts/model_meta.py.

Benchmark all models

To benchmark all of the models, first convert all of the models that you downloaded above into TensorRT engines. Run the following script to convert all models

python scripts/frozen_graphs_to_plans.py

If you want to change parameters related to TensorRT optimization, just edit the scripts/frozen_graphs_to_plans.py file. Next, to benchmark all of the models run the scripts/test_trt.py script

python scripts/test_trt.py

Once finished, the timing results will be stored at data/test_output_trt.txt. If you want to also benchmark the TensorFlow models, simply run.

python scripts/test_tf.py

The results will be stored at data/test_output_tf.txt. This benchmarking script loads an example image as input, make sure you have downloaded the sample images as above.