emla2805 / Vision Transformer

Tensorflow implementation of the Vision Transformer (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale)

Stars: ✭ 90

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Vision Transformer

Nlp Tutorial

Natural Language Processing Tutorial for Deep Learning Researchers

Stars: ✭ 9,895 (+10894.44%)

Mutual labels: transformer

Typescript Transform Macros

Typescript Transform Macros

Stars: ✭ 85 (-5.56%)

Mutual labels: transformer

Kaggle Quora Insincere Questions Classification

Kaggle新赛(baseline)-基于BERT的fine-tuning方案+基于tensor2tensor的Transformer Encoder方案

Stars: ✭ 66 (-26.67%)

Mutual labels: transformer

Se3 Transformer Pytorch

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. This specific repository is geared towards integration with eventual Alphafold2 replication.

Stars: ✭ 73 (-18.89%)

Mutual labels: transformer

Multimodal Toolkit

Multimodal model for text and tabular data with HuggingFace transformers as building block for text data

Stars: ✭ 78 (-13.33%)

Mutual labels: transformer

Viewpagertransformer

Viewpager动画,包括渐变,旋转,缩放,3D,立方体等多种酷炫效果动画,实现原理是自定义ViewpagerTransformer,当然你也可以自定义多种动画

Stars: ✭ 62 (-31.11%)

Mutual labels: transformer

Smiles Transformer

Original implementation of the paper "SMILES Transformer: Pre-trained Molecular Fingerprint for Low Data Drug Discovery" by Shion Honda et al.

Stars: ✭ 86 (-4.44%)

Mutual labels: transformer

Gpt2 Chitchat

GPT2 for Chinese chitchat/用于中文闲聊的GPT2模型(实现了DialoGPT的MMI思想)

Stars: ✭ 1,230 (+1266.67%)

Mutual labels: transformer

Mixture Of Experts

A Pytorch implementation of Sparsely-Gated Mixture of Experts, for massively increasing the parameter count of language models

Stars: ✭ 68 (-24.44%)

Mutual labels: transformer

Presento

Presento - Transformer & Presenter Package for PHP

Stars: ✭ 71 (-21.11%)

Mutual labels: transformer

Awesome Typescript Ecosystem

😎 A list of awesome TypeScript transformers, plugins, handbooks, etc

Stars: ✭ 79 (-12.22%)

Mutual labels: transformer

Indonesian Language Models

Indonesian Language Models and its Usage

Stars: ✭ 64 (-28.89%)

Mutual labels: transformer

Pytorch Openai Transformer Lm

🐥A PyTorch implementation of OpenAI's finetuned transformer language model with a script to import the weights pre-trained by OpenAI

Stars: ✭ 1,268 (+1308.89%)

Mutual labels: transformer

Deeplearning Nlp Models

A small, interpretable codebase containing the re-implementation of a few "deep" NLP models in PyTorch. Colab notebooks to run with GPUs. Models: word2vec, CNNs, transformer, gpt.

Stars: ✭ 64 (-28.89%)

Mutual labels: transformer

Distre

[ACL 19] Fine-tuning Pre-Trained Transformer Language Models to Distantly Supervised Relation Extraction

Stars: ✭ 75 (-16.67%)

Mutual labels: transformer

Speech Transformer Tf2.0

transformer for ASR-systerm (via tensorflow2.0)

Stars: ✭ 90 (+0%)

Mutual labels: transformer

Transformer Based Pretrained Model For Event Extraction

使用基于Transformer的预训练模型在ACE2005数据集上进行事件抽取任务

Stars: ✭ 88 (-2.22%)

Mutual labels: transformer

Transformers without tears

Transformers without Tears: Improving the Normalization of Self-Attention

Stars: ✭ 80 (-11.11%)

Mutual labels: transformer

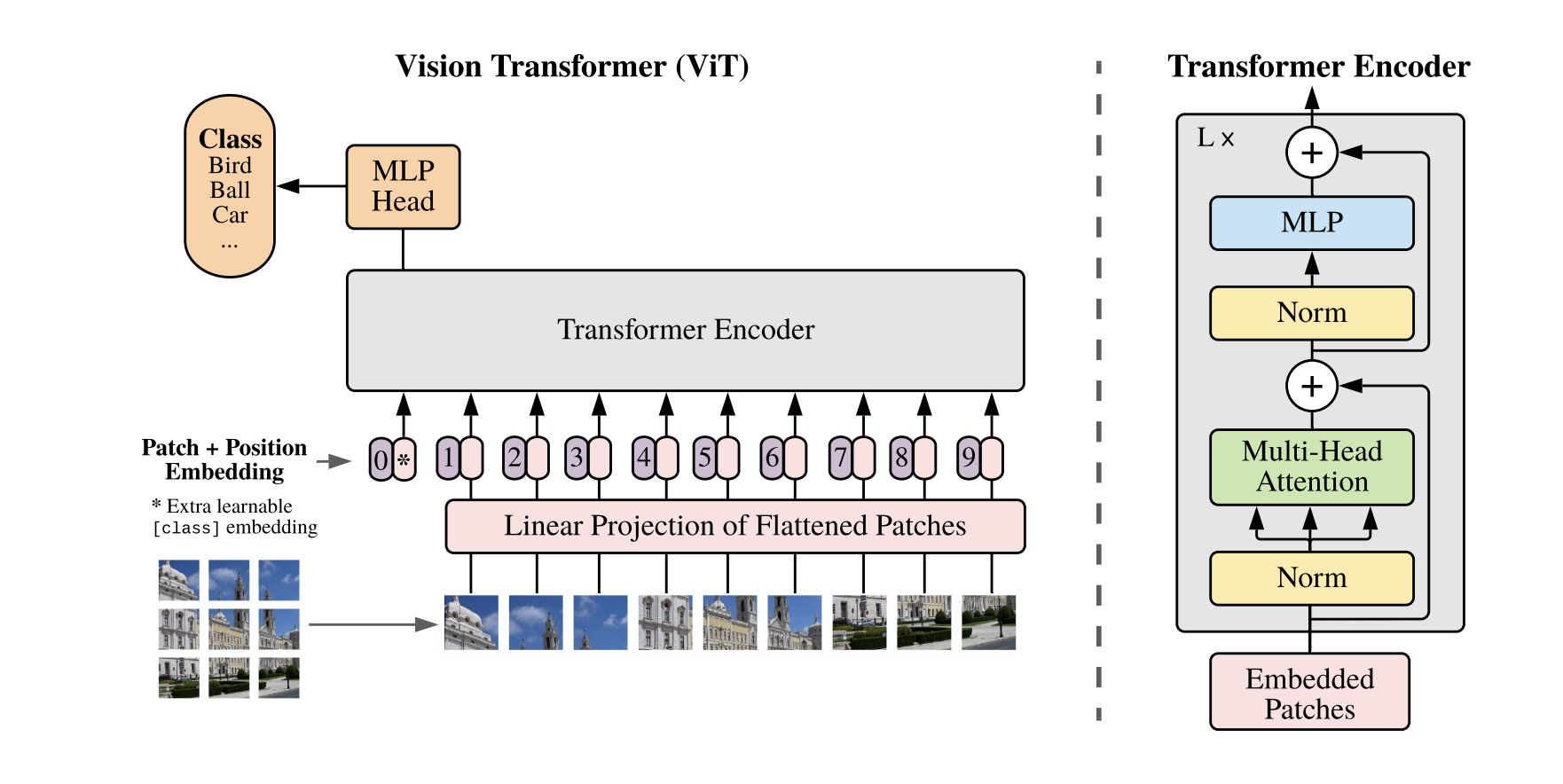

Vision Transformer (ViT)

Tensorflow implementation of the Vision Transformer (ViT) presented in An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, where the authors show that Transformers applied directly to image patches and pre-trained on large datasets work really well on image classification.

Install dependencies

Create a Python 3 virtual environment and activate it:

virtualenv -p python3 venv

source ./venv/bin/activate

Next, install the required dependencies:

pip install -r requirements.txt

Train model

Start the model training by running:

python train.py --logdir path/to/log/dir

To track metrics, start Tensorboard

tensorboard --logdir path/to/log/dir

and then go to localhost:6006.

Citation

@inproceedings{

anonymous2021an,

title={An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale},

author={Anonymous},

booktitle={Submitted to International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=YicbFdNTTy},

note={under review}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].