scut-aitcm / Competitive Inner Imaging Senet

Source code of paper: (not available now)

Stars: ✭ 89

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Competitive Inner Imaging Senet

Sockeye

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Stars: ✭ 990 (+1012.36%)

Mutual labels: attention-mechanism, mxnet

Attend infer repeat

A Tensorfflow implementation of Attend, Infer, Repeat

Stars: ✭ 82 (-7.87%)

Mutual labels: attention-mechanism

Hierarchical Attention Networks

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Stars: ✭ 75 (-15.73%)

Mutual labels: attention-mechanism

Mxnet Gluon Syncbn

MXNet Gluon Synchronized Batch Normalization Preview

Stars: ✭ 78 (-12.36%)

Mutual labels: mxnet

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (-28.09%)

Mutual labels: attention-mechanism

Grounder

Implementation of Grounding of Textual Phrases in Images by Reconstruction in Tensorflow

Stars: ✭ 83 (-6.74%)

Mutual labels: attention-mechanism

Sturcture Inpainting

Source code of AAAI 2020 paper 'Learning to Incorporate Structure Knowledge for Image Inpainting'

Stars: ✭ 78 (-12.36%)

Mutual labels: attention-mechanism

Fake news detection deep learning

Fake News Detection using Deep Learning models in Tensorflow

Stars: ✭ 74 (-16.85%)

Mutual labels: attention-mechanism

Sarcasm Detection

Detecting Sarcasm on Twitter using both traditonal machine learning and deep learning techniques.

Stars: ✭ 73 (-17.98%)

Mutual labels: attention-mechanism

Gluon2pytorch

Gluon to PyTorch deep neural network model converter

Stars: ✭ 70 (-21.35%)

Mutual labels: mxnet

Simplednn

SimpleDNN is a machine learning lightweight open-source library written in Kotlin designed to support relevant neural network architectures in natural language processing tasks

Stars: ✭ 81 (-8.99%)

Mutual labels: attention-mechanism

Pytorch Attention Guided Cyclegan

Pytorch implementation of Unsupervised Attention-guided Image-to-Image Translation.

Stars: ✭ 67 (-24.72%)

Mutual labels: attention-mechanism

Deepattention

Deep Visual Attention Prediction (TIP18)

Stars: ✭ 65 (-26.97%)

Mutual labels: attention-mechanism

Se3 Transformer Pytorch

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. This specific repository is geared towards integration with eventual Alphafold2 replication.

Stars: ✭ 73 (-17.98%)

Mutual labels: attention-mechanism

Deepaffinity

Protein-compound affinity prediction through unified RNN-CNN

Stars: ✭ 75 (-15.73%)

Mutual labels: attention-mechanism

Attention unet

Raw implementation of attention gated U-Net by Keras

Stars: ✭ 85 (-4.49%)

Mutual labels: attention-mechanism

Insightface

State-of-the-art 2D and 3D Face Analysis Project

Stars: ✭ 10,886 (+12131.46%)

Mutual labels: mxnet

Competitive-Inner-Imaging-SENet

Source code of paper:

(not availbale now)

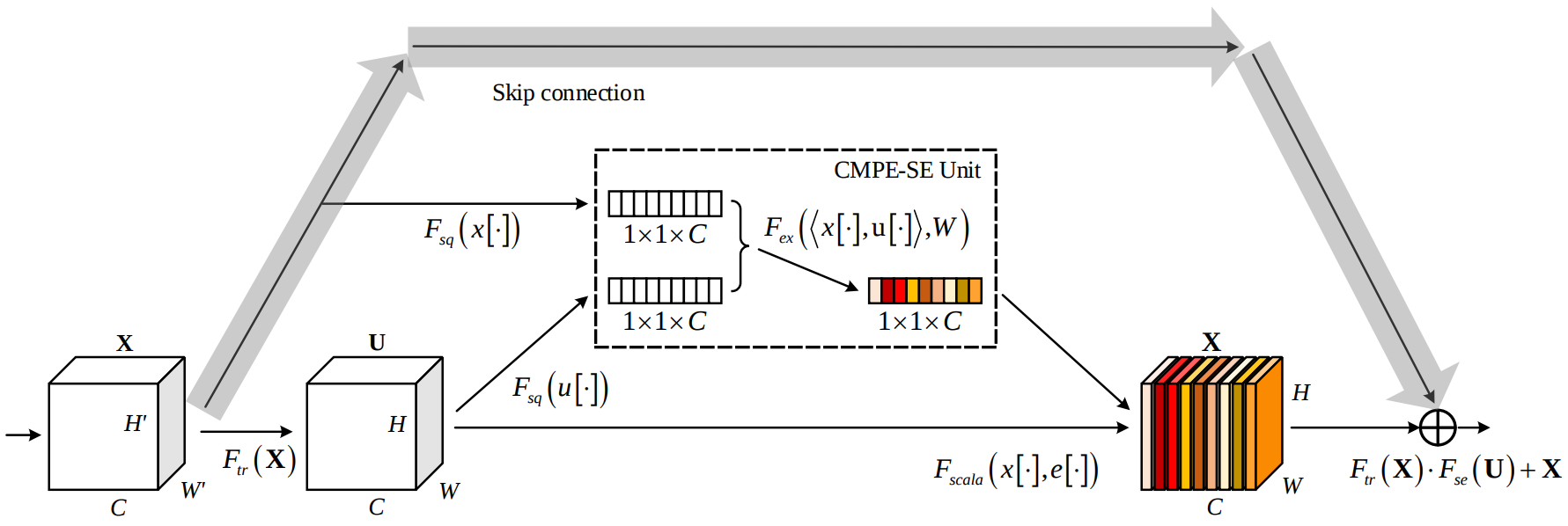

Architecture

| Competitive Squeeze-Exciation Architecutre for Residual block |

|---|

|

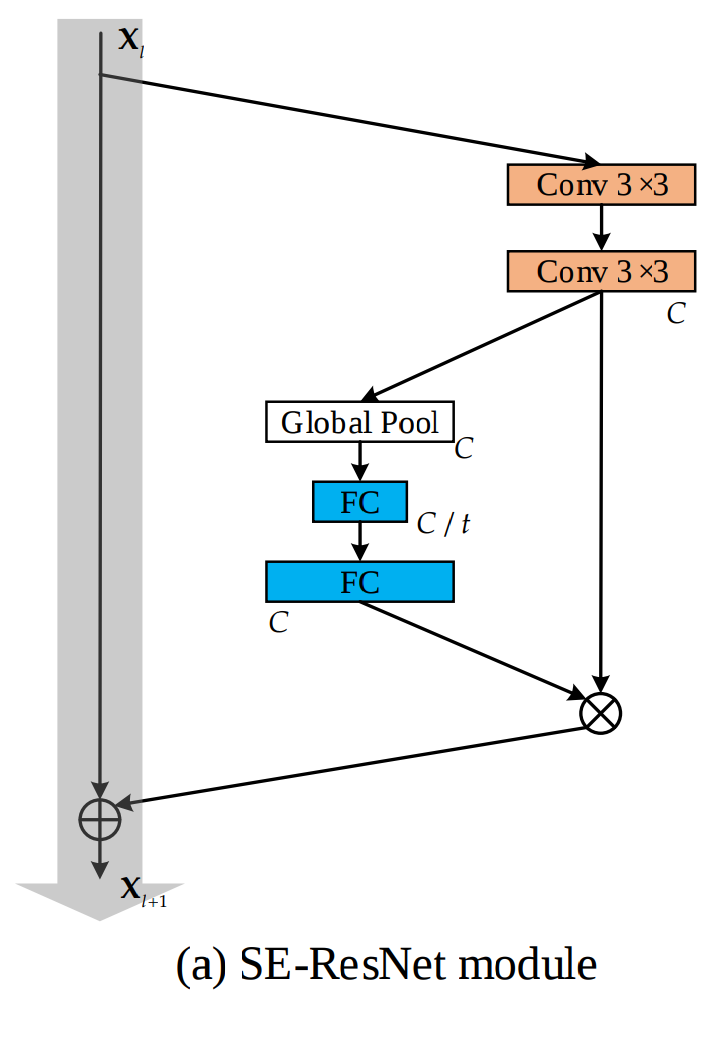

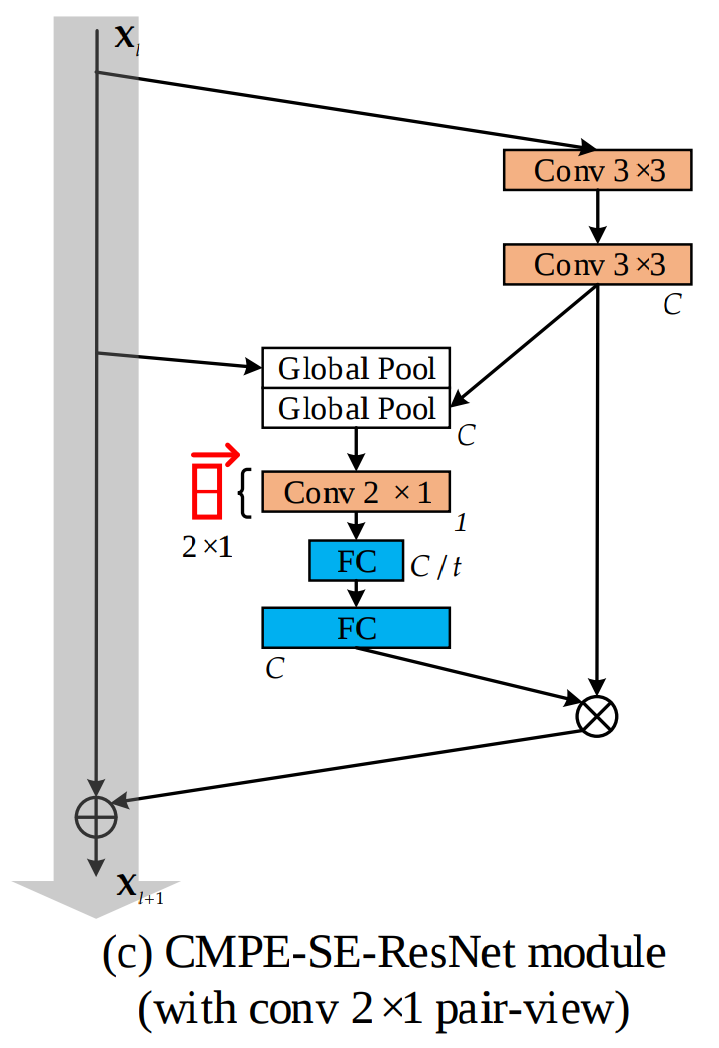

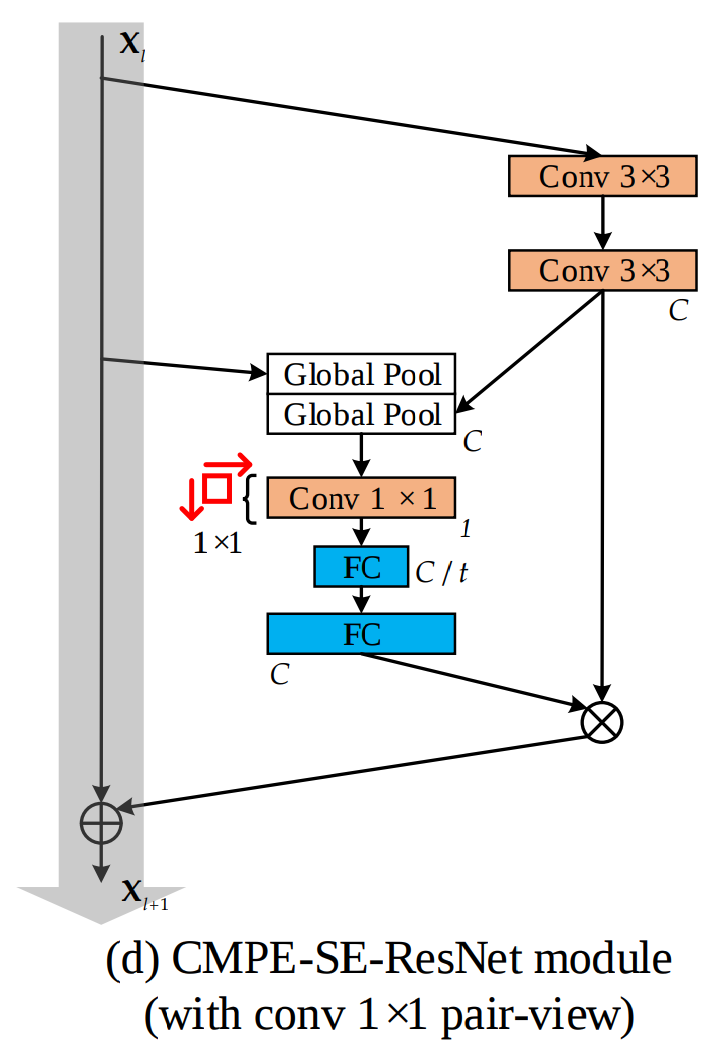

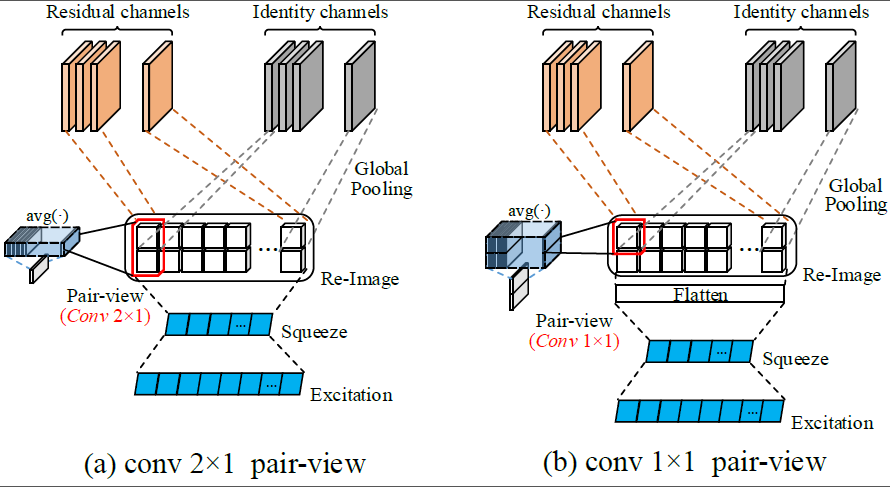

SE-ResNet module and CMPE-SE-ResNet modules:

| Normal SE | Double FC squeezes | Conv 2x1 pair-view | Conv 1x1 pair-view |

|---|---|---|---|

|

|

|

|

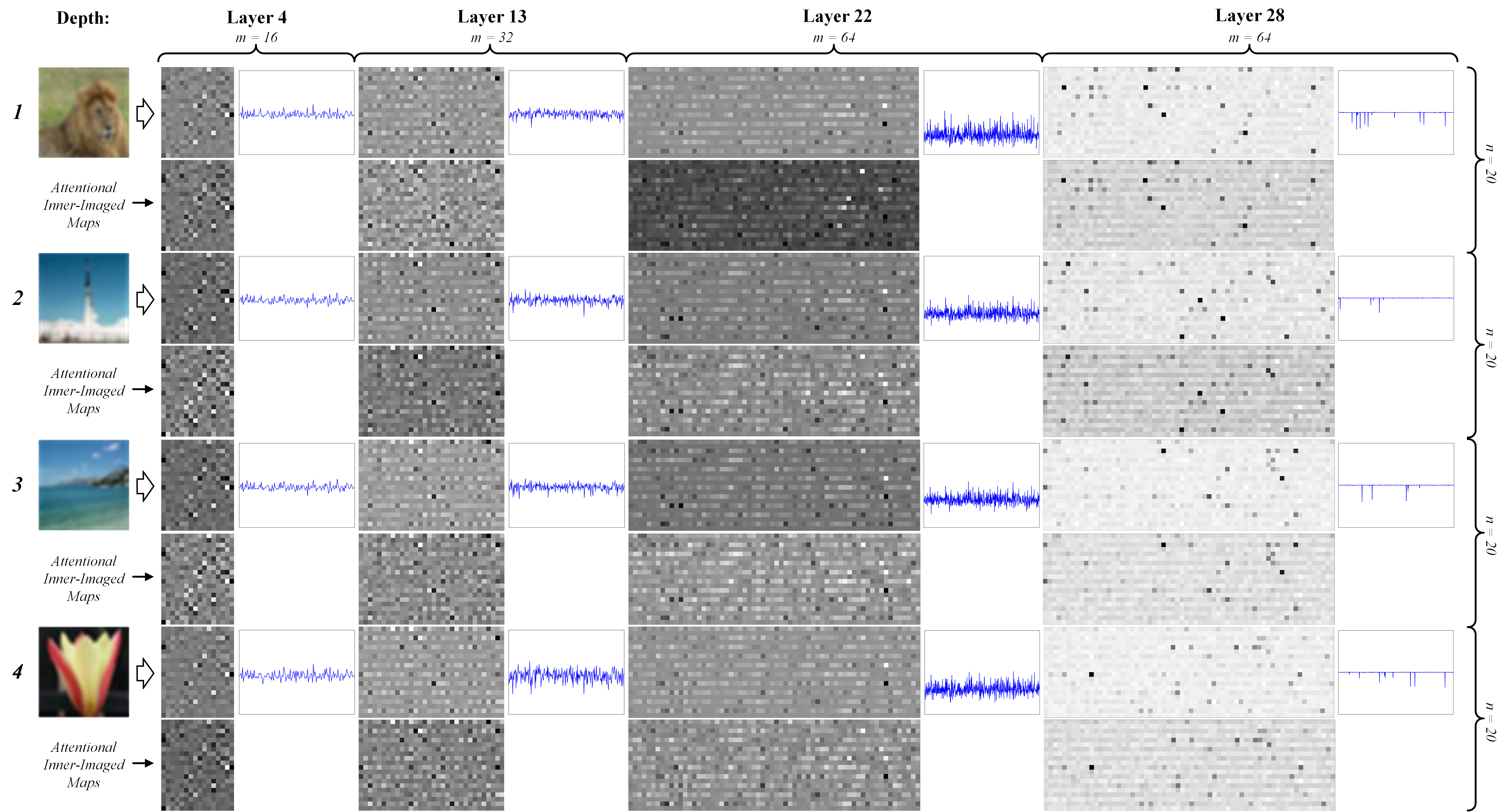

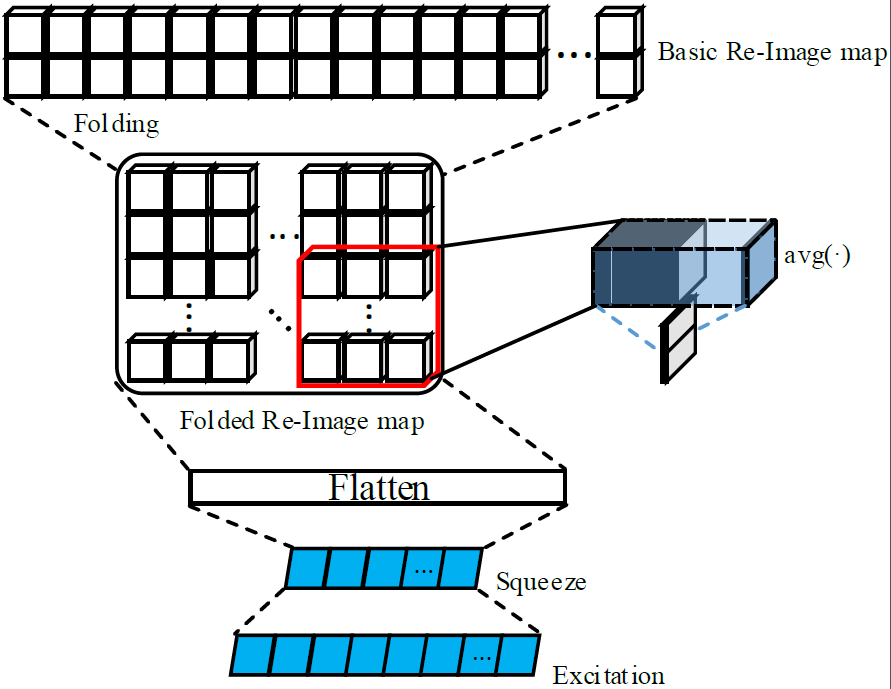

The Novel Inner-Imaging Mechanism for Channel Relation Modeling in Channel-wise Attention of ResNets (even All CNNs):

| Basic Inner-Imaing Mode | Folded Inner-Imaging Mode |

|---|---|

|

|

Requirements

- MXNet 1.2.0

- Python 2.7

- CUDA 8.0+(for GPU)

Citation

not available now

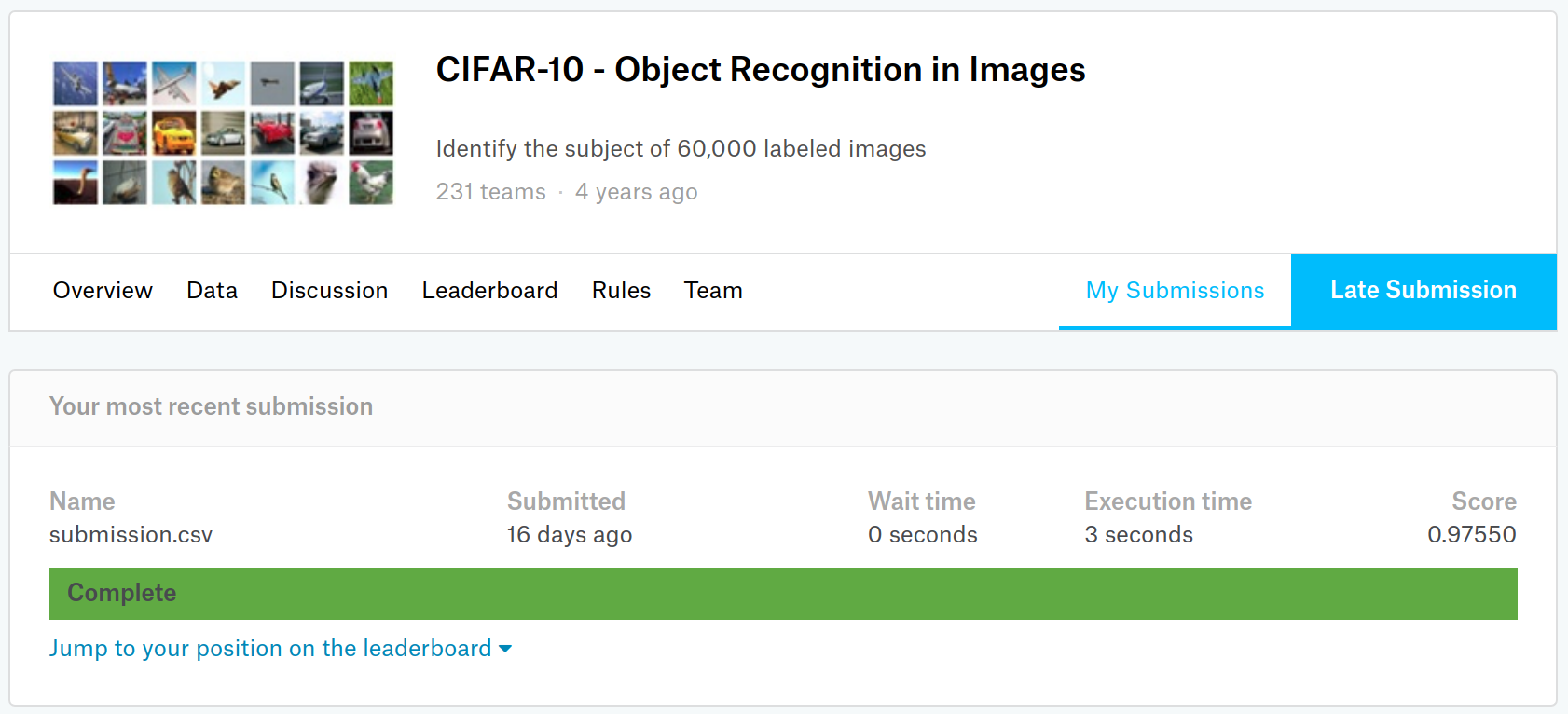

Essential Results

Best record of this novel model on CIFAR-10 and CIFAR-100 (used "mixup" (https://arxiv.org/abs/1710.09412)) can achieve: 97.55% and 84.38%.

The test result on Kaggle: CIFAR-10 - Object Recognition in Images

Inner-Imaging Examples & Channel-wise Attention Outputs

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].