maximecb / Gym Miniworld

Programming Languages

Projects that are alternatives of or similar to Gym Miniworld

MiniWorld (gym-miniworld)

Contents:

Introduction

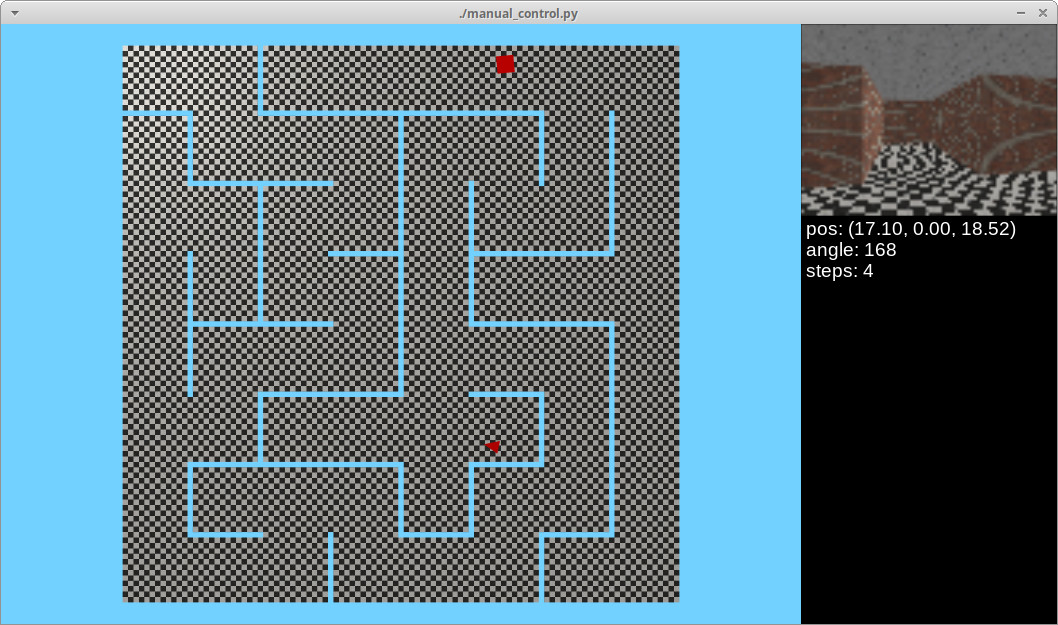

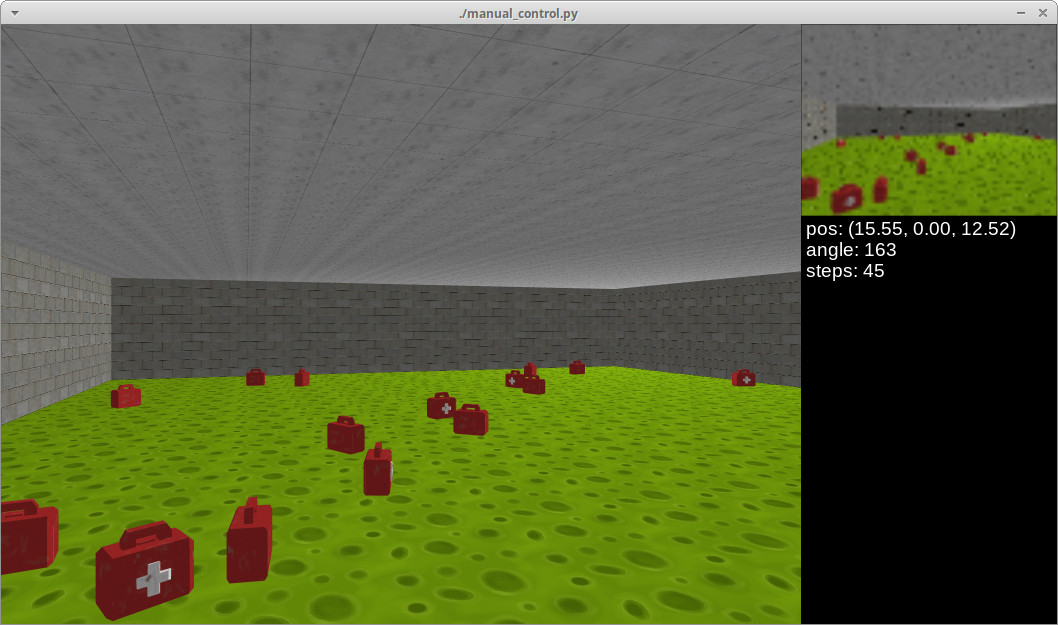

MiniWorld is a minimalistic 3D interior environment simulator for reinforcement learning & robotics research. It can be used to simulate environments with rooms, doors, hallways and various objects (eg: office and home environments, mazes). MiniWorld can be seen as an alternative to VizDoom or DMLab. It is written 100% in Python and designed to be easily modified or extended.

Features:

- Few dependencies, less likely to break, easy to install

- Easy to create your own levels, or modify existing ones

- Good performance, high frame rate, support for multiple processes

- Lightweight, small download, low memory requirements

- Provided under a permissive MIT license

- Comes with a variety of free 3D models and textures

- Fully observable top-down/overhead view available

- Domain randomization support, for sim-to-real transfer

- Ability to display alphanumeric strings on walls

- Ability to produce depth maps matching camera images (RGB-D)

Limitations:

- Graphics are basic, nowhere near photorealism

- Physics are very basic, not sufficient for robot arms or manipulation

Please use this bibtex if you want to cite this repository in your publications:

@misc{gym_miniworld,

author = {Chevalier-Boisvert, Maxime},

title = {gym-miniworld environment for OpenAI Gym},

year = {2018},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/maximecb/gym-miniworld}},

}

List of publications & submissions using MiniWorld (please open a pull request to add missing entries):

- Rank the Episodes: A Simple Approach for Exploration in Procedurally-Generated Environments (Texas A&M University, Kuai Inc., ICLR 2021)

- DeepAveragers: Offline Reinforcement Learning by Solving Derived Non-Parametric MDPs (NeurIPS Offline RL Workshop, Oct 2020)

- Explore then Execute: Adapting without Rewards via Factorized Meta-Reinforcement Learning (Stanford University, Aug 2020)

- Pre-trained Word Embeddings for Goal-conditional Transfer Learning in Reinforcement Learning (University of Antwerp, Jul 2020, ICML 2020 LaReL Workshop)

- Temporal Abstraction with Interest Functions (Mila, Feb 2020, AAAI 2020)

- Avoidance Learning Using Observational Reinforcement Learning (Mila, McGill, Sept 2019)

- Visual Hindsight Experience Replay (Georgia Tech, UC Berkeley, Jan 2019)

This simulator was created as part of work done at Mila.

Installation

Requirements:

- Python 3.5+

- OpenAI Gym

- NumPy

- Pyglet (OpenGL 3D graphics)

- GPU for 3D graphics acceleration (optional)

You can install all the dependencies with pip3:

git clone https://github.com/maximecb/gym-miniworld.git

cd gym-miniworld

pip3 install -e .

If you run into any problems, please take a look at the troubleshooting guide, and if you're still stuck, please open an issue on this repository to let us know something is wrong.

Usage

Testing

There is a simple UI application which allows you to control the simulation or real robot manually. The manual_control.py application will launch the Gym environment, display camera images and send actions (keyboard commands) back to the simulator or robot. The --env-name argument specifies which environment to load. See the list of available environments for more information.

./manual_control.py --env-name MiniWorld-Hallway-v0

# Display an overhead view of the environment

./manual_control.py --env-name MiniWorld-Hallway-v0 --top_view

There is also a script to run automated tests (run_tests.py) and a script to gather performance metrics (benchmark.py).

Reinforcement Learning

To train a reinforcement learning agent, you can use the code provided in the /pytorch-a2c-ppo-acktr directory. This code is a modified version of the RL code found in this repository. I recommend using the PPO algorithm and 16 processes or more. A sample command to launch training is:

python3 main.py --algo ppo --num-frames 5000000 --num-processes 16 --num-steps 80 --lr 0.00005 --env-name MiniWorld-Hallway-v0

Then, to visualize the results of training, you can run the following command. Note that you can do this while the training process is still running. Also note that if you are running this through SSH, you will need to enable X forwarding to get a display:

python3 enjoy.py --env-name MiniWorld-Hallway-v0 --load-dir trained_models/ppo