Phylliade / Ikpy

Programming Languages

Labels

Projects that are alternatives of or similar to Ikpy

IKPy

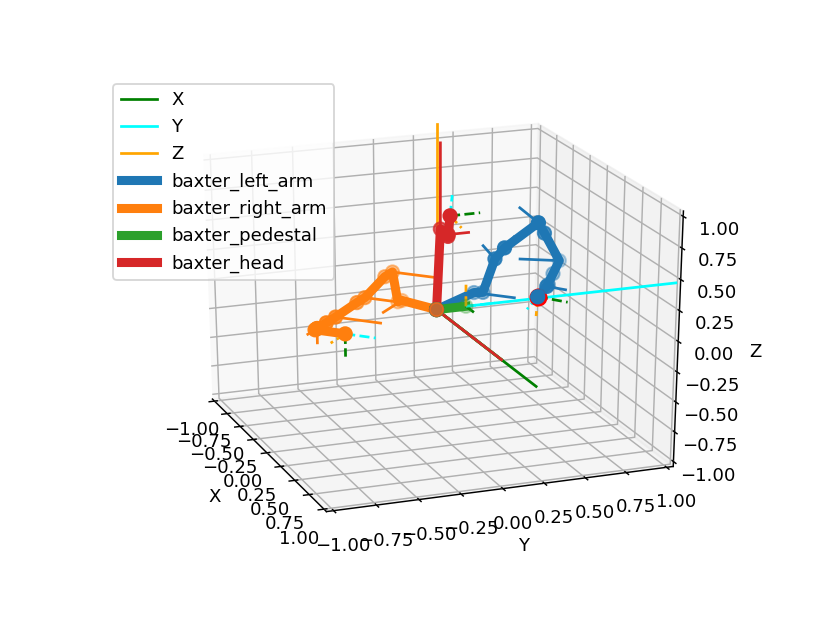

Demo

Live demos of what IKPy can do (click on the image below to see the video):

Also, a presentation of IKPy: Presentation.

Features

With IKPy, you can:

- Compute the Inverse Kinematics of every existing robot.

- Compute the Inverse Kinematics in position, orientation, or both

- Define your kinematic chain using arbitrary representations: DH (Denavit–Hartenberg), URDF, custom...

- Automaticly import a kinematic chain from a URDF file.

- Use pre-configured robots, such as baxter or the poppy-torso

- IKPy is precise (up to 7 digits): the only limitation being your underlying model's precision, and fast: from 7 ms to 50 ms (depending on your precision) for a complete IK computation.

- Plot your kinematic chain: no need to use a real robot (or a simulator) to test your algorithms!

- Define your own Inverse Kinematics methods.

- Utils to parse and analyze URDF files:

Moreover, IKPy is a pure-Python library: the install is a matter of seconds, and no compiling is required.

Installation

You have three options:

-

From PyPI (recommended) - simply run:

pip install ikpyIf you intend to plot your robot, you can install the plotting dependencies (mainly

matplotlib):pip install 'ikpy[plot]'

-

If you work with Anaconda, there's also a Conda package of IKPy:

conda install -c https://conda.anaconda.org/phylliade ikpy

-

From source - first download and extract the archive, then run:

pip install ./NB: You must have the proper rights to execute this command

Quickstart

Follow this IPython notebook.

Guides and Tutorials

Go to the wiki. It should introduce you to the basic concepts of IKPy.

API Documentation

An extensive documentation of the API can be found here.

Dependencies and compatibility

The library can work with both versions of Python (2.7 and 3.x). It requires numpy and scipy.

sympy is highly recommended, for fast hybrid computations, that's why it is installed by default.

matplotlib is optional: it is used to plot your models (in 3D).

Contributing

IKPy is designed to be easily customisable: you can add your own IK methods or robot representations (such as DH-Parameters) using a dedicated developer API.

Contributions are welcome: if you have an awesome patented (but also open-source!) IK method, don't hesitate to propose adding it to the library!

Links

- If performance is your main concern,

aversive++has an inverse kinematics module written in C++, which works the same way IKPy does.