gcunhase / Nlpmetrics

Licence: mit

Python code for various NLP metrics

Stars: ✭ 126

Projects that are alternatives of or similar to Nlpmetrics

Promplot

Create plots from Prometheus metrics and send them to you

Stars: ✭ 125 (-0.79%)

Mutual labels: metrics

Dive Into Machine Learning

Dive into Machine Learning with Python Jupyter notebook and scikit-learn! First posted in 2016, maintained as of 2021. Pull requests welcome.

Stars: ✭ 10,810 (+8479.37%)

Mutual labels: jupyter-notebook

Scir Training Day

a small training program for new crews of HIT-SCIR

Stars: ✭ 125 (-0.79%)

Mutual labels: jupyter-notebook

The Data Science Workshop

A New, Interactive Approach to Learning Data Science

Stars: ✭ 126 (+0%)

Mutual labels: jupyter-notebook

Pytorch Model Zoo

A collection of deep learning models implemented in PyTorch

Stars: ✭ 125 (-0.79%)

Mutual labels: jupyter-notebook

Simplestockanalysispython

Stock Analysis Tutorial in Python

Stars: ✭ 126 (+0%)

Mutual labels: jupyter-notebook

Modular Rl

[ICML 2020] PyTorch Code for "One Policy to Control Them All: Shared Modular Policies for Agent-Agnostic Control"

Stars: ✭ 126 (+0%)

Mutual labels: jupyter-notebook

Cmucomputationalphotography

Jupyter Notebooks for CMU Computational Photography Course 15.463

Stars: ✭ 126 (+0%)

Mutual labels: jupyter-notebook

First Order Model

This repository contains the source code for the paper First Order Motion Model for Image Animation

Stars: ✭ 11,964 (+9395.24%)

Mutual labels: jupyter-notebook

Python Audio

Some Jupyter notebooks about audio signal processing with Python

Stars: ✭ 125 (-0.79%)

Mutual labels: jupyter-notebook

Skills Ml

Data Processing and Machine learning methods for the Open Skills Project

Stars: ✭ 125 (-0.79%)

Mutual labels: jupyter-notebook

Alfnet

Code for 'Learning Efficient Single-stage Pedestrian Detectors by Asymptotic Localization Fitting' in ECCV2018

Stars: ✭ 126 (+0%)

Mutual labels: jupyter-notebook

Deep Auto Punctuation

a pytorch implementation of auto-punctuation learned character by character

Stars: ✭ 125 (-0.79%)

Mutual labels: jupyter-notebook

About

Natural Language Processing Performance Metrics [ppt]

Contents

Requirements • How to Use • Notebooks • Quick Notes • How to Cite

Requirements

Tested on Python 2.7

pip install -r requirements.txt

How to Use

- Run:

python test/test_mt_text_score.py - Currently only supporting MT metrics

Notebooks

| Metric | Application | Notebook | |

| BLEU | Machine Translation | Jupyter | Colab |

| GLEU (Google-BLEU) | Machine Translation | Jupyter | Colab |

| WER (Word Error Rate) | Transcription Accuracy Machine Translation |

Jupyter | Colab |

- TODO:

- Generalized BLEU (?), METEOR, ROUGE, CIDEr

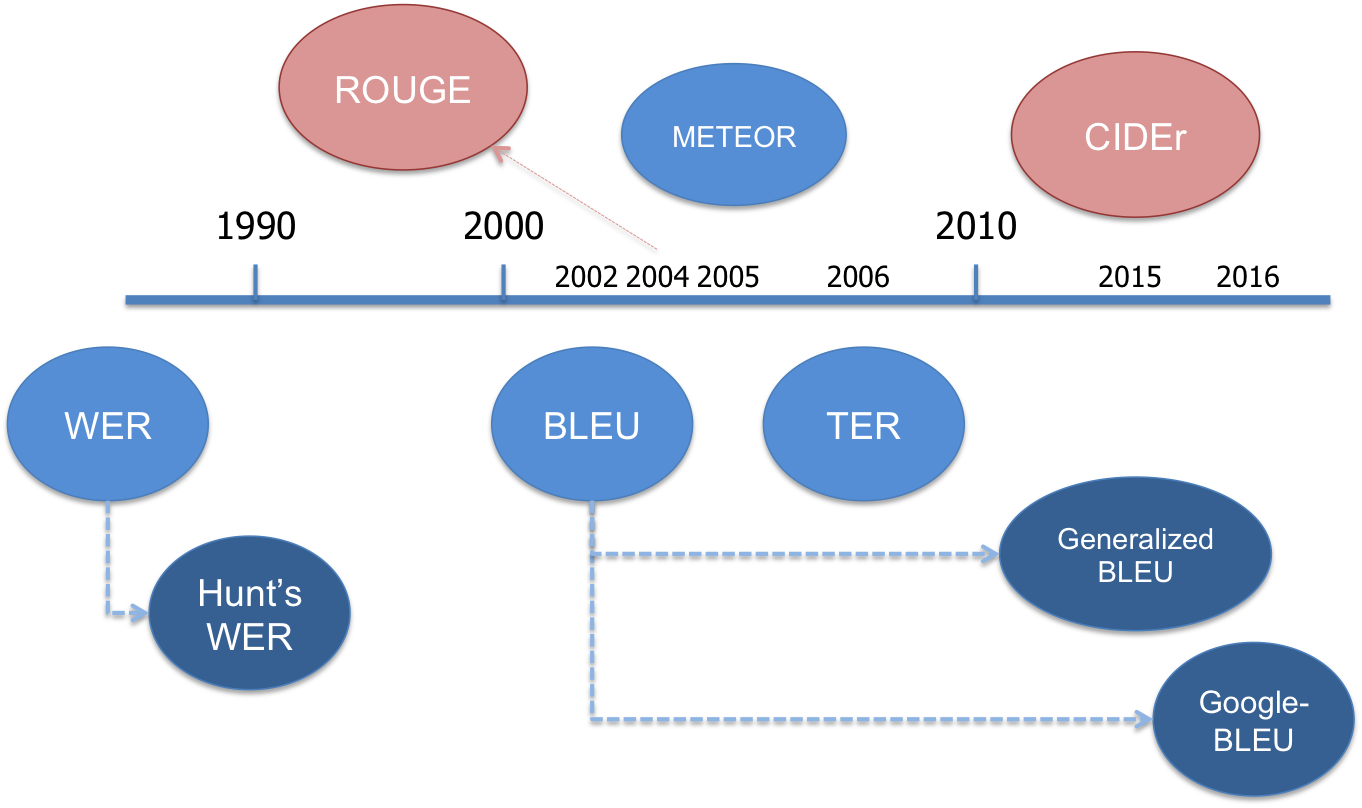

Evaluation Metrics: Quick Notes

Average precision

- Macro: average of sentence scores

- Micro: corpus (sums numerators and denominators for each hypothesis-reference(s) pairs before division)

Machine Translation

-

BLEU (BiLingual Evaluation Understudy)

- Papineni 2002

- 'Measures how many words overlap in a given translation when compared to a reference translation, giving higher scores to sequential words.' (recall)

- Limitation:

- Doesn't consider different types of errors (insertions, substitutions, synonyms, paraphrase, stems)

- Designed to be a corpus measure, so it has undesirable properties when used for single sentences.

-

GLEU (Google-BLEU)

- Wu et al. 2016

- Minimum of BLEU recall and precision applied to 1, 2, 3 and 4grams

- Recall: (number of matching n-grams) / (number of total n-grams in the target)

- Precision: (number of matching n-grams) / (number of total n-grams in generated sequence)

- Correlates well with BLEU metric on a corpus metric but does not have its drawbacks for per sentence reward objective.

- Not to be confused with Generalized Language Evaluation Understanding or Generalized BLEU, also known as GLEU

- Napoles et al. 2015's ACL paper: Ground Truth for Grammatical Error Correction Metrics

- Napoles et al. 2016: GLEU Without Tuning

- Minor adjustment required as the number of references increases.

- Simple variant of BLEU, it hews much more closely to human judgements.

- "In MT, an untranslated word or phrase is almost always an error, but in GEC, this is not the case."

- GLEU: "computes n-gram precisions over the reference but assigns more weight to n-grams that have been correctly changed from the source."

- Python code

-

WER (Word Error Rate)

- Levenshtein distance (edit distance) for words: minimum number of edits (insertion, deletions or substitutions) required to change the hypotheses sentence into the reference.

- Range: greater than 0 (ref = hyp), no max range as Automatic Speech Recognizer (ASR) can insert an arbitrary number of words

- $ WER = \frac{S+D+I}{N} = \frac{S+D+I}{S+D+C} $

- S: number of substitutions, D: number of deletions, I: number of insertions, C: number of the corrects, N: number of words in the reference ($N=S+D+C$)

- WAcc (Word Accuracy) or Word Recognition Rate (WRR): $1 - WER$

- Limitation: provides no details on the nature of translation errors

- Different errors are treated equally, even thought they might influence the outcome differently (being more disruptive or more difficult/easier to be corrected).

- If you look at the formula, there's no distinction between a substitution error and a deletion followed by an insertion error.

- Possible solution proposed by Hunt (1990):

- Use of a weighted measure

- $ WER = \frac{S+0.5D+0.5I}{N} $

- Problem:

- Metric is used to compare systems, so it's unclear whether Hunt's formula could be used to assess the performance of a single system

- How effective this measure is in helping a user with error correction

- See more info

-

METEOR (Metric for Evaluation of Translation with Explicit ORdering):

- Banerjee 2005's paper: Meteor: An Automatic Metric for MT Evaluation with High Levels of Correlation with Human Judgments

- About: "based on the harmonic mean of unigram precision and recall (weighted higher than precision)"

- Includes: exact word, stem and synonym matching

- Designed to fix some of the problems found in the BLEU metric, while also producing good correlation with human judgement at the sentence or segment level (unlike BLEU which seeks correlation at the corpus level).

- Python jar wrapper

-

TER (Translation Edit Rate)

- Snover et al. 2006's paper: A study of translation edit rate with targeted human annotation

- Number of edits (words deletion, addition and substitution) required to make a machine translation match exactly to the closest reference translation in fluency and semantics

- TER = $\frac{E}{R}$ = (minimum number of edits) / (average length of reference text)

- It is generally preferred to BLEU for estimation of sentence post-editing effort. Source.

- PyTER

- char-TER: character level TER

Summarization

-

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

- Lin 2004: ROUGE: A Package for Automatic Evaluation of Summaries

- Package for automatic evaluation of summaries

Image Caption Quality

-

CIDEr (Consensus-based Image Description Evaluation)

- Vedantam et al. 2015: CIDEr: Consensus-based Image Description Evaluation

- Used as a measurement for image caption quality

Acknowledgement

Please star or fork if this code was useful for you. If you use it in a paper, please cite as:

@software{cunha_sergio2019nlp_metrics,

author = {Gwenaelle Cunha Sergio},

title = {{gcunhase/NLPMetrics: The Natural Language

Processing Metrics Python Repository}},

month = oct,

year = 2019,

doi = {10.5281/zenodo.3496559},

version = {v1.0},

publisher = {Zenodo},

url = {https://github.com/gcunhase/NLPMetrics}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].