ShengyuH / Overlappredator

Programming Languages

Projects that are alternatives of or similar to Overlappredator

PREDATOR: Registration of 3D Point Clouds with Low Overlap (CVPR 2021, Oral)

This repository represents the official implementation of the paper:

PREDATOR: Registration of 3D Point Clouds with Low Overlap

*Shengyu Huang, *Zan Gojcic, Mikhail Usvyatsov, Andreas Wieser, Konrad Schindler

|ETH Zurich | * Equal contribution

For implementation using MinkowskiEngine backbone, please check this

For more information, please see the project website

Contact

If you have any questions, please let us know:

- Shengyu Huang {[email protected]}

- Zan Gojcic {[email protected]}

News

- 2021-02-28: MinkowskiEngine-based PREDATOR release

- 2021-02-23: Modelnet and KITTI release

- 2020-11-30: Code and paper release

Instructions

This code has been tested on

- Python 3.8.5, PyTorch 1.7.1, CUDA 11.2, gcc 9.3.0, GeForce RTX 3090/GeForce GTX 1080Ti

Note: We observe random data loader crashes due to memory issues, if you observe similar issues, please consider reducing the number of workers or increasing CPU RAM. We now released a sparse convolution-based Predator, have a look here!

Requirements

To create a virtual environment and install the required dependences please run:

git clone https://github.com/ShengyuH/OverlapPredator.git

virtualenv predator; source predator/bin/activate

cd OverlapPredator; pip install -r requirements.txt

cd cpp_wrappers; sh compile_wrappers.sh; cd ..

in your working folder.

Datasets and pretrained models

For KITTI dataset, please follow the instruction on KITTI Odometry website to download the KITTI odometry training set.

We provide

- preprocessed 3DMatch pairwise datasets (voxel-grid subsampled fragments together with their ground truth transformation matrices)

- modelnet dataset

- pretrained models on 3DMatch, KITTI and Modelnet

The preprocessed data and models can be downloaded by running:

sh scripts/download_data_weight.sh

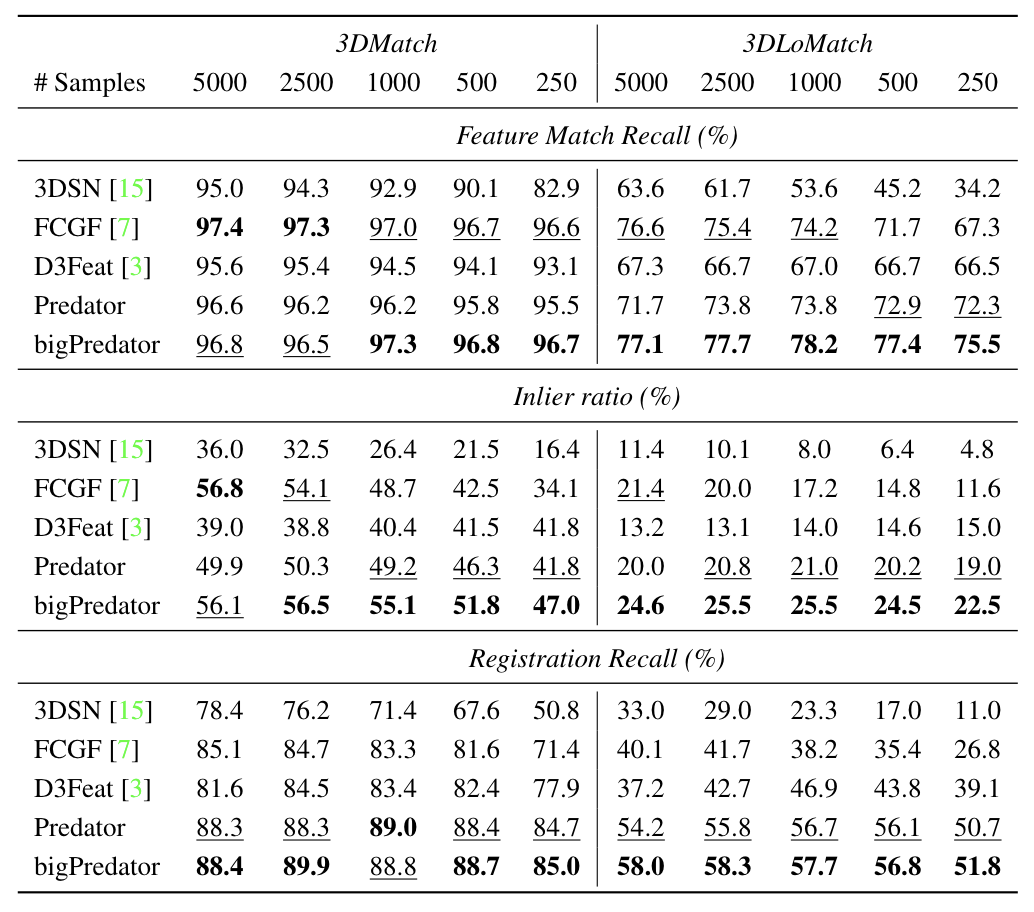

Predator is the model evaluated in the paper whereas bigPredator is a wider network that is trained on a single GeForce RTX 3090.

| Model | first_feats_dim | gnn_feats_dim | # parameters |

|---|---|---|---|

| Predator | 128 | 256 | 7.43M |

| bigPredator | 256 | 512 | 29.67M |

The results of both Predator and bigPredator, obtained using the evaluation protocol described in the paper, are available in the bottom table:

3DMatch(Indoor)

Train

After creating the virtual environment and downloading the datasets, Predator can be trained using:

python main.py configs/train/indoor.yaml

Evaluate

For 3DMatch, to reproduce Table 2 in our main paper, we first extract features and overlap/matachability scores by running:

python main.py configs/test/indoor.yaml

the features will be saved to snapshot/indoor/3DMatch. The estimation of the transformation parameters using RANSAC can then be carried out using:

for N_POINTS in 250 500 1000 2500 5000

do

python scripts/evaluate_predator.py --source_path snapshot/indoor/3DMatch --n_points $N_POINTS --benchmark 3DMatch

done

dependent on n_points used by RANSAC, this might take a few minutes. The final results are stored in est_traj/{benchmark}/{n_points}/result. To evaluate PREDATOR on 3DLoMatch benchmark, please also change 3DMatch to 3DLoMatch in configs/test/indoor.yaml.

Demo

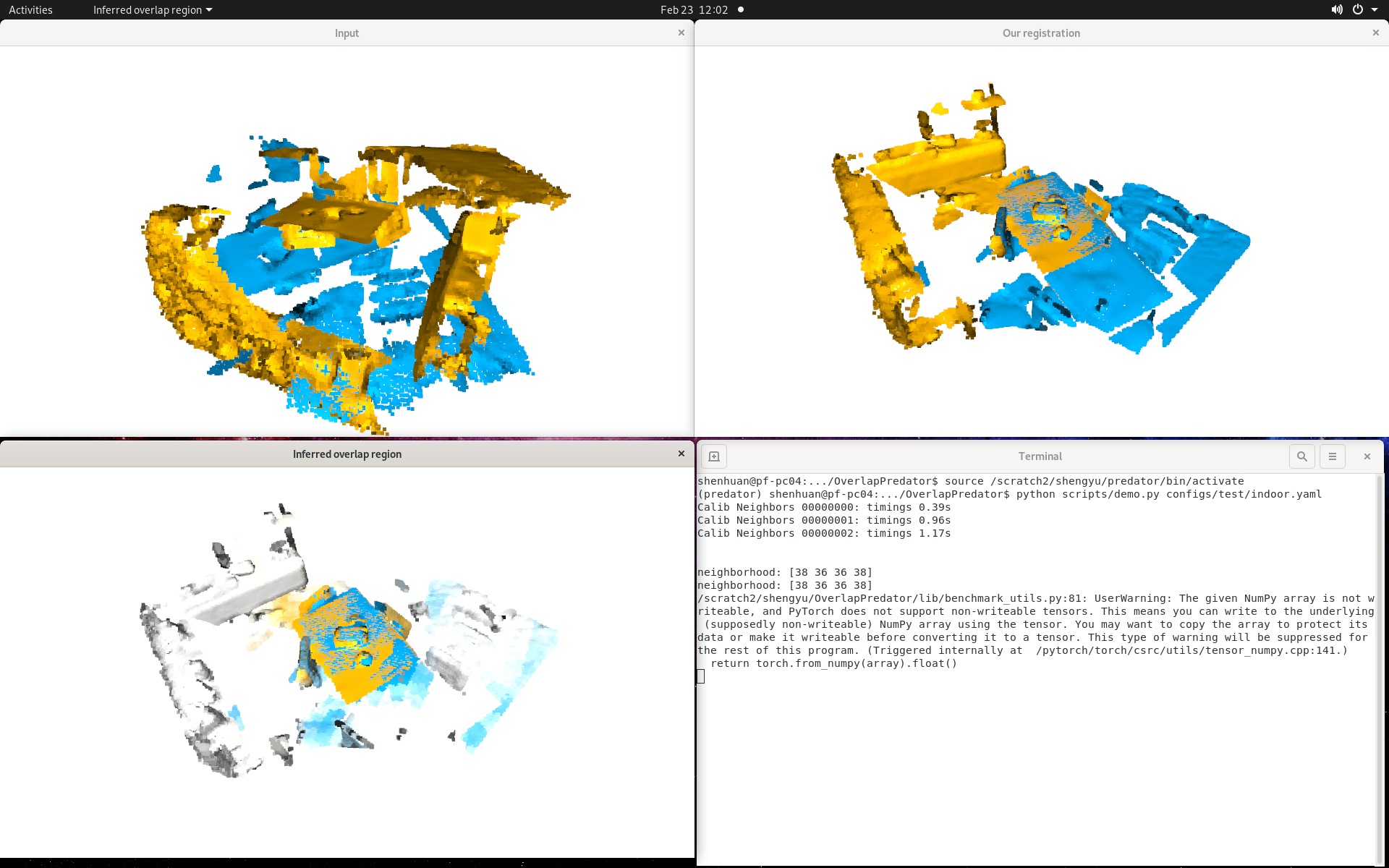

We prepared a small demo, which demonstrates the whole Predator pipeline using two random fragments from the 3DMatch dataset. To carry out the demo, please run:

python scripts/demo.py configs/test/indoor.yaml

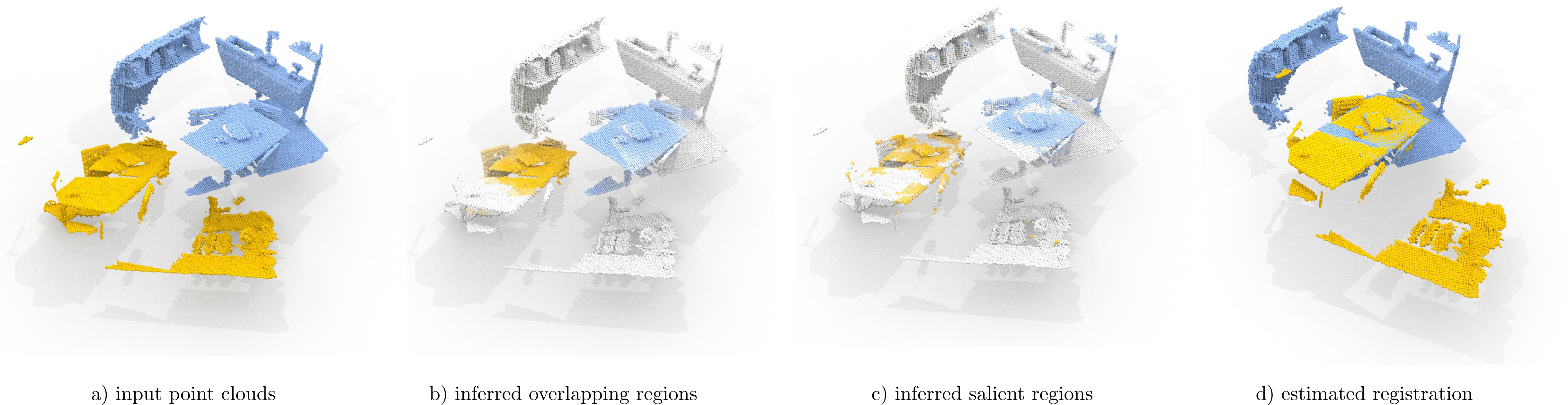

The demo script will visualize input point clouds, inferred overlap regions, and point cloud aligned with the estimated transformation parameters:

KITTI(Outdoor)

We provide a small script to evaluate Predator on KITTI test set, after configuring KITTI dataset, please run:

python main.py configs/test/kitti.yaml

the results will be saved to the log file.

ModelNet(Synthetic)

We provide a small script to evaluate Predator on ModelNet test set, please run:

python main.py configs/test/modelnet.yaml

The rotation and translation errors could be better/worse than the reported ones due to randomness in RANSAC.

Citation

If you find this code useful for your work or use it in your project, please consider citing:

@article{huang2020predator,

title={PREDATOR: Registration of 3D Point Clouds with Low Overlap},

author={Shengyu Huang, Zan Gojcic, Mikhail Usvyatsov, Andreas Wieser, Konrad Schindler},

journal={CVPR},

year={2021}

}

Acknowledgments

In this project we use (parts of) the official implementations of the followin works:

- FCGF (KITTI preprocessing)

- D3Feat (KPConv backbone)

- 3DSmoothNet (3DMatch preparation)

- MultiviewReg (3DMatch benchmark)

- SuperGlue (Transformer part)

- DGCNN (self-gnn)

- RPMNet (ModelNet preprocessing and evaluation)

We thank the respective authors for open sourcing their methods. We would also like to thank reviewers, especially reviewer 2 for his/her valuable inputs.