l11x0m7 / Question_answering_models

Programming Languages

Projects that are alternatives of or similar to Question answering models

Question_Answering_Models

This repo collects and re-produces models related to domains of question answering and machine reading comprehension.

It's now still in the process of supplement.

comunity QA

Dataset

WikiQA, TrecQA, InsuranceQA

data preprocess on WikiQA

cd cQA

bash download.sh

python preprocess_wiki.py

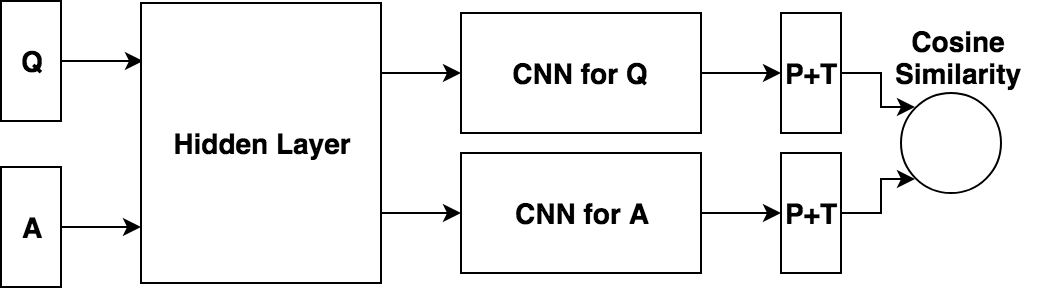

Siamese-NN model

This model is a simple complementation of a Siamese NN QA model with a pointwise way.

train model

python siamese.py --train

test model

python siamese.py --test

Siamese-CNN model

This model is a simple complementation of a Siamese CNN QA model with a pointwise way.

train model

python siamese.py --train

test model

python siamese.py --test

Siamese-RNN model

This model is a simple complementation of a Siamese RNN/LSTM/GRU QA model with a pointwise way.

train model

python siamese.py --train

test model

python siamese.py --test

note

All these three models above are based on the vanilla siamese structure. You can easily combine these basic deep learning module cells together and build your own models.

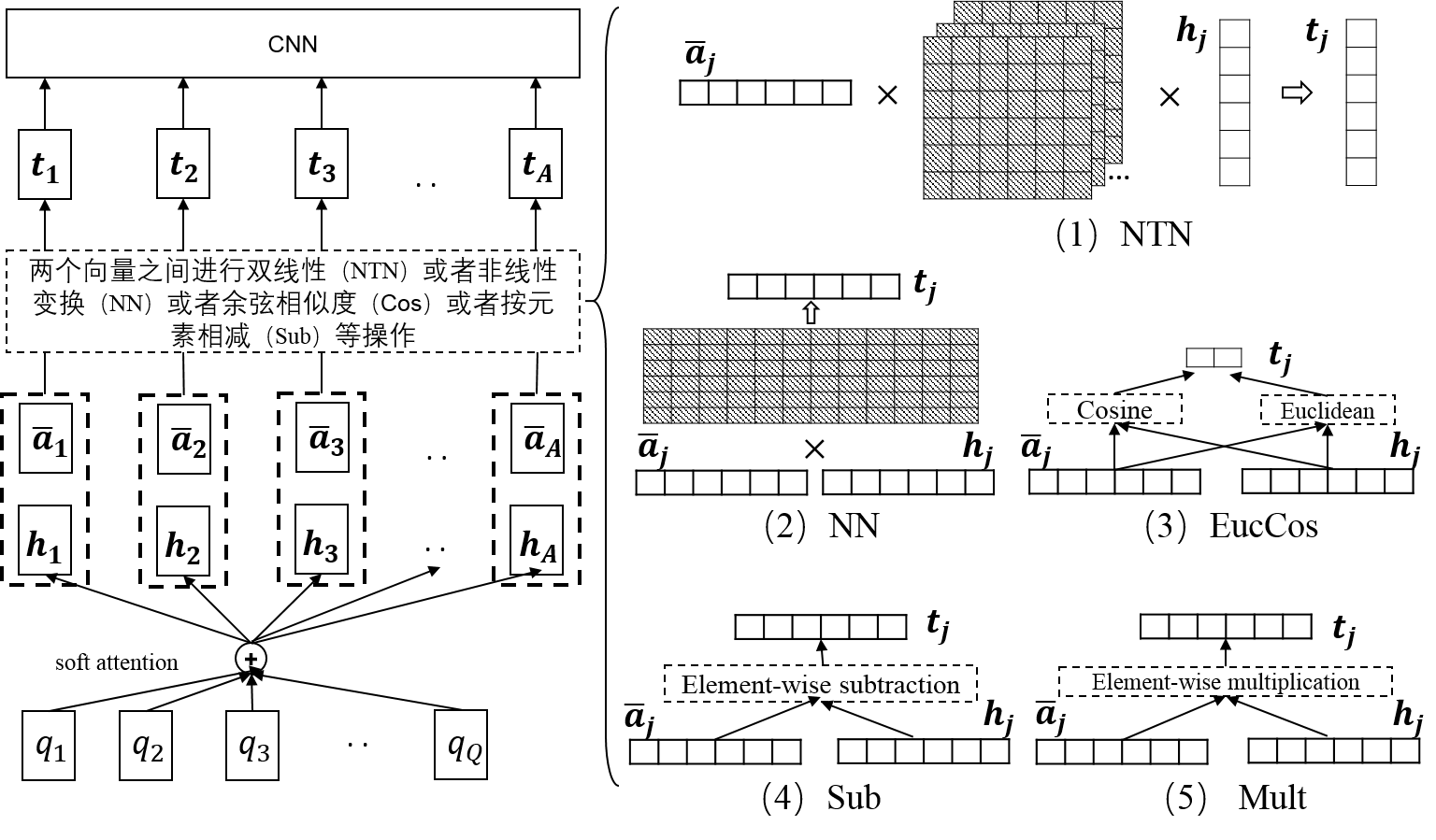

QACNN

Given a question, a positive answer and a negative answer, this pairwise model can rank two answers with higher ranking in terms of the right answer.

train model

python qacnn.py --train

test model

python qacnn.py --test

Refer to:

Decomposable Attention Model

train model

python decomp_att.py --train

test model

python decomp_att.py --test

Refer to:

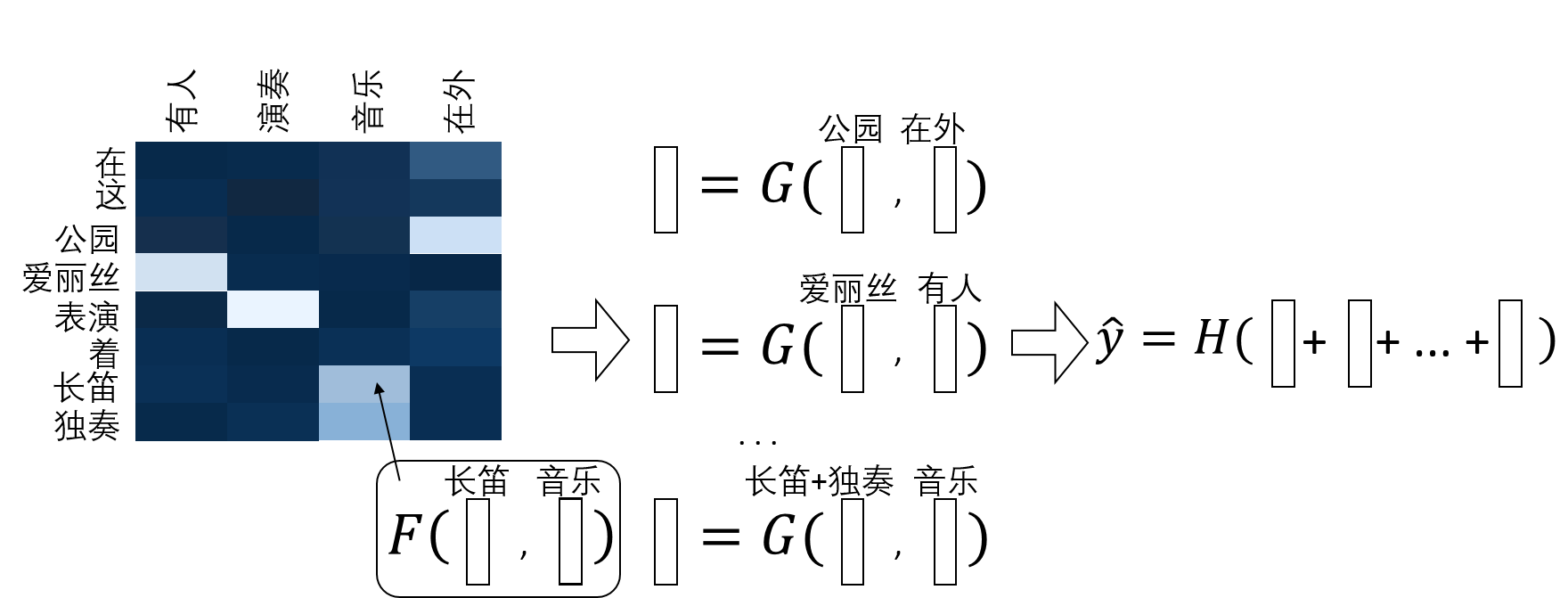

Compare-Aggregate Model with Multi-Compare

train model

python seq_match_seq.py --train

test model

python seq_match_seq.py --test

Refer to:

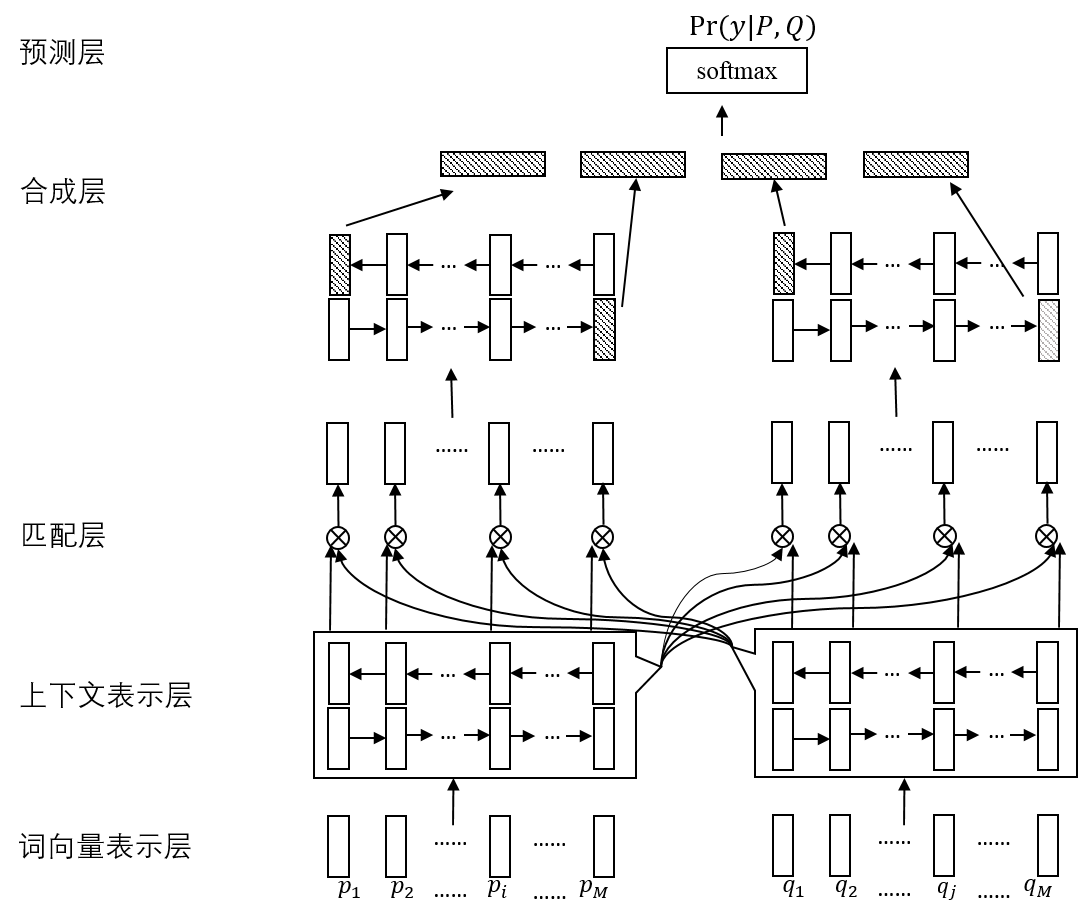

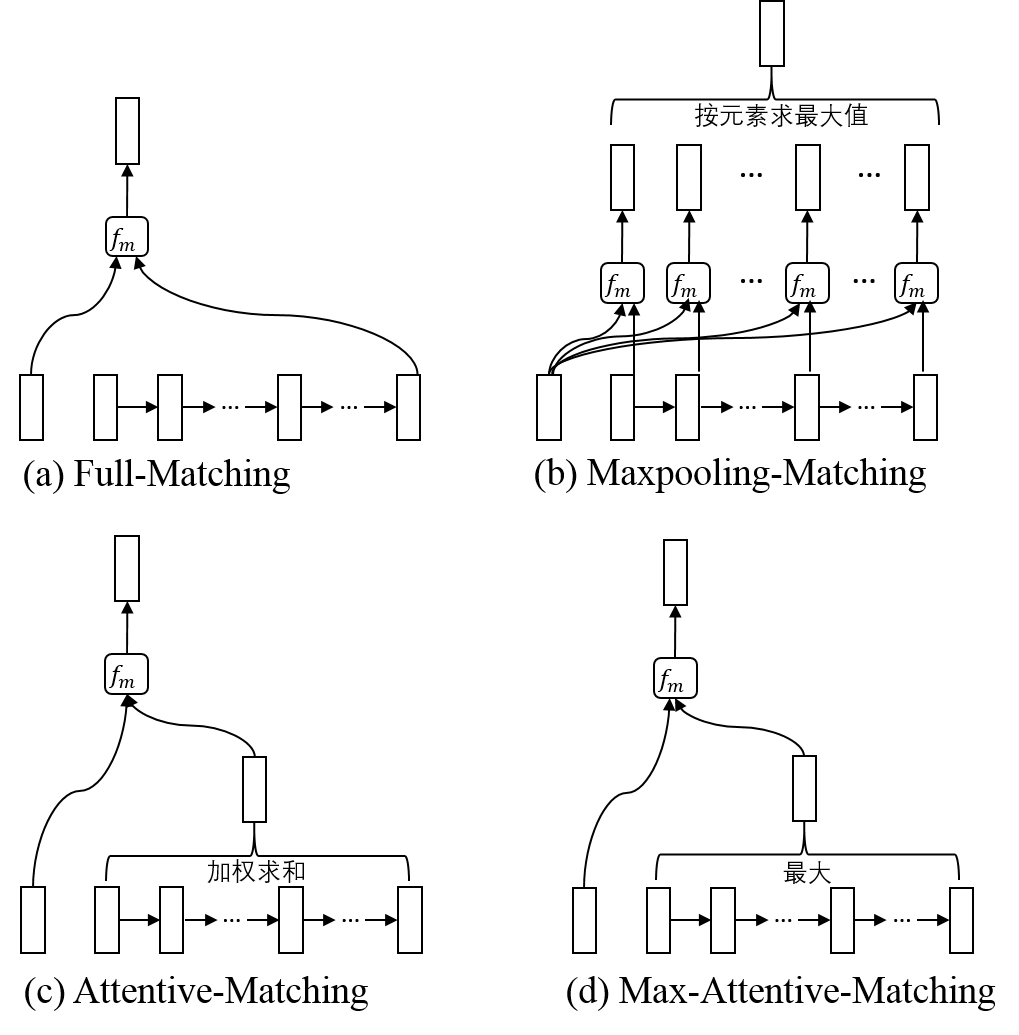

BiMPM

train model

python bimpm.py --train

test model

python bimpm.py --test

Refer to:

Machine Reading Comprehension

Dataset

CNN/Daily mail, CBT, SQuAD, MS MARCO, RACE

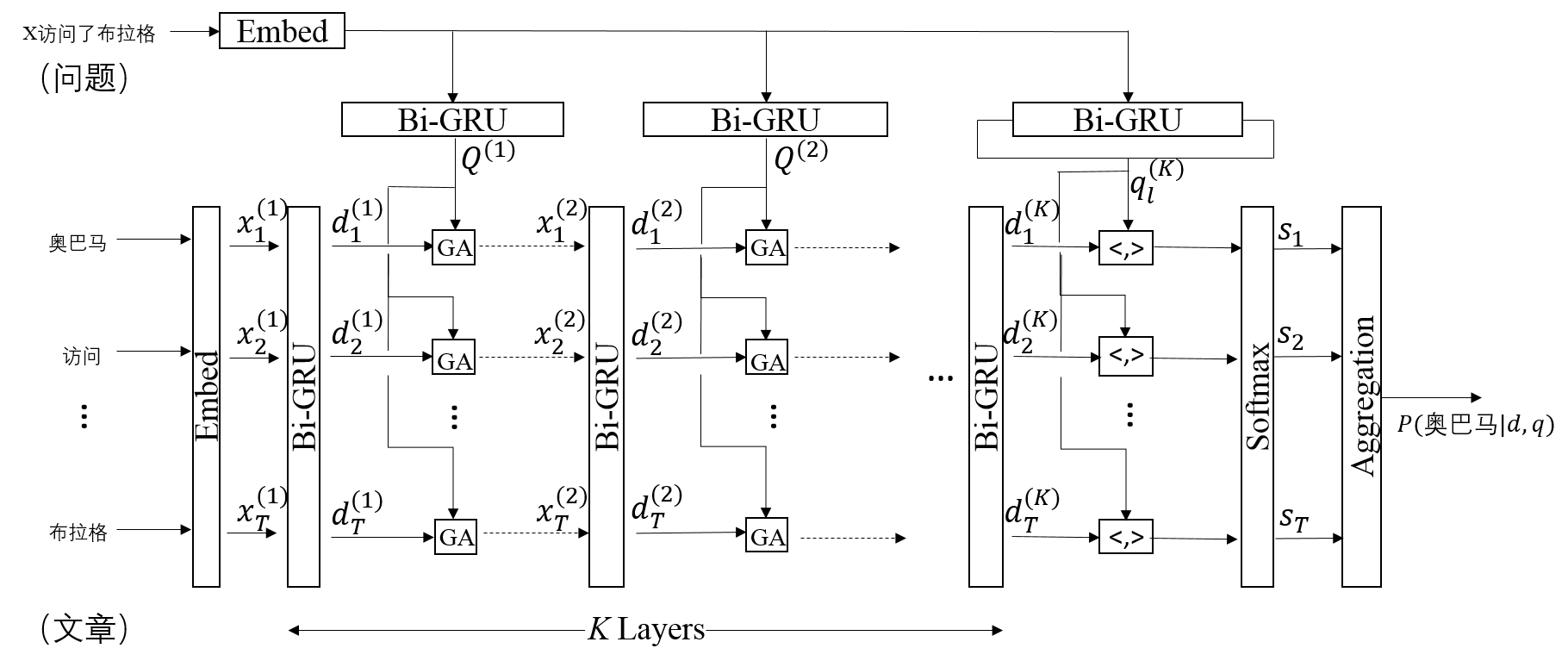

GA Reader

To be done

Refer to:

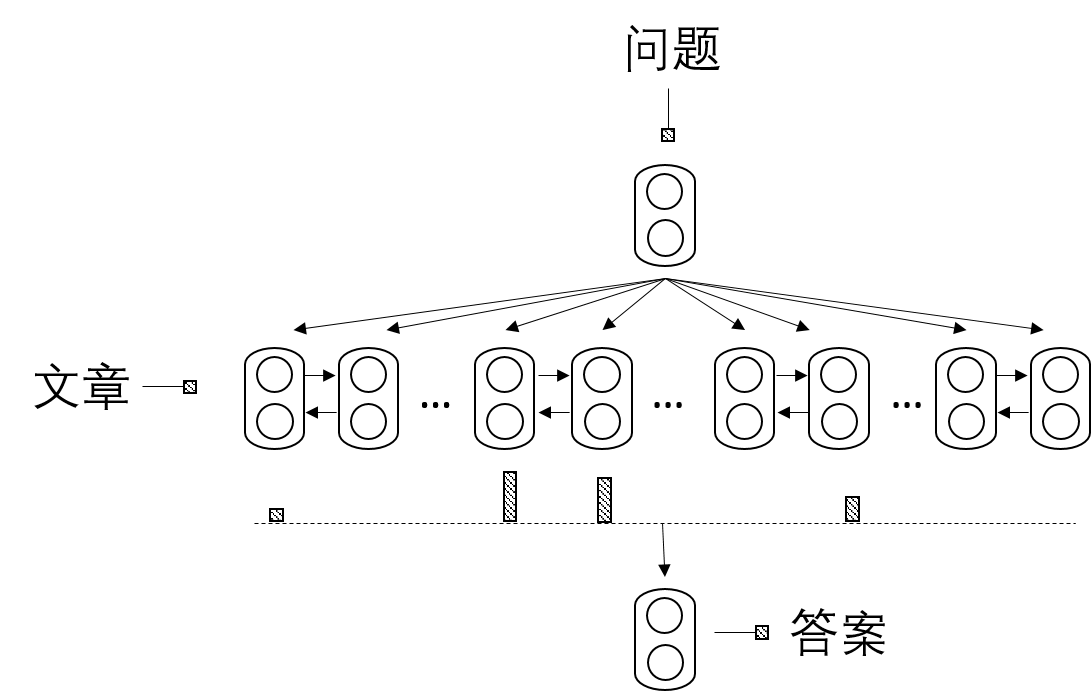

SA Reader

To be done

Refer to:

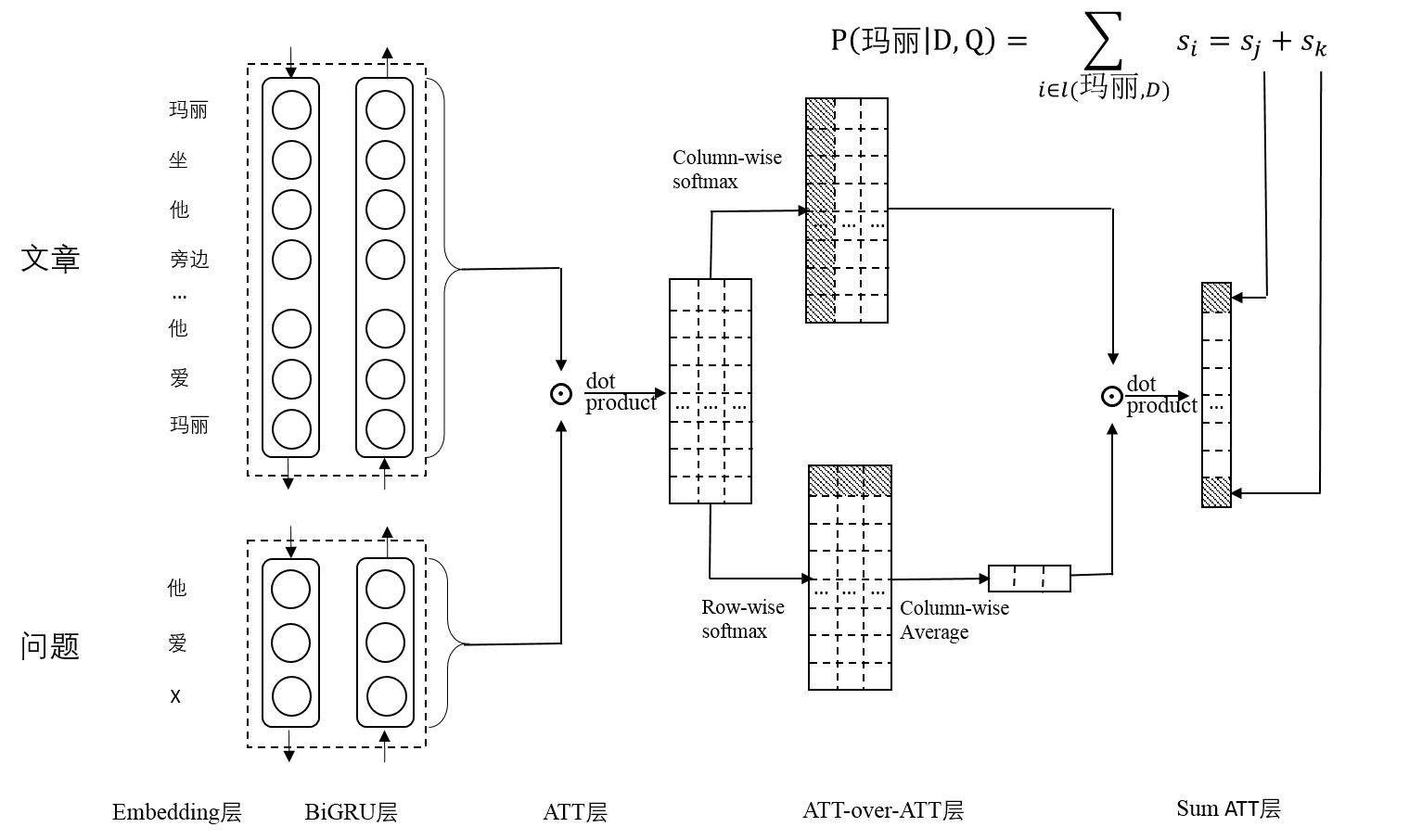

AoA Reader

To be done

Refer to:

- Attention-over-Attention Neural Networks for Reading Comprehension

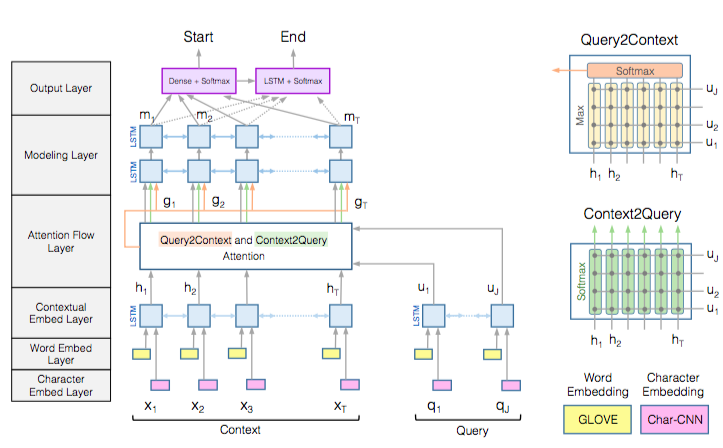

BiDAF

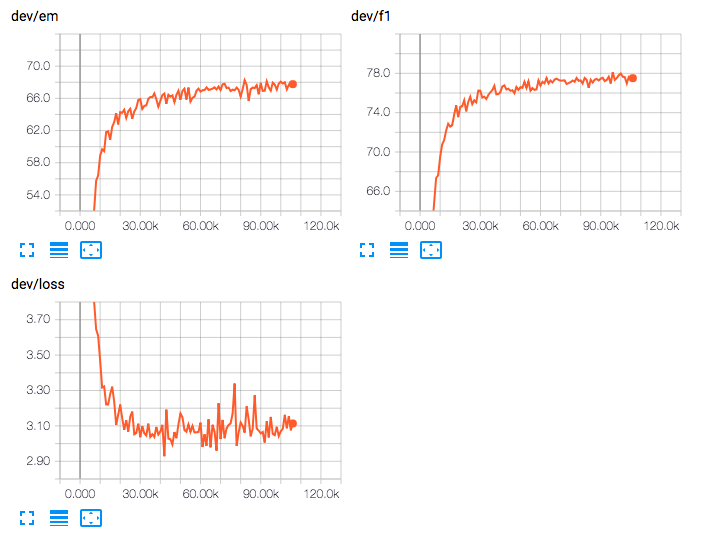

The result on dev set(single model) under my experimental environment is shown as follows:

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device |

|---|---|---|---|---|---|---|

| 12W | 32 | 75 | 67.7 | 77.3 | 3.40 it/s | 1 GTX 1080 Ti |

Refer to:

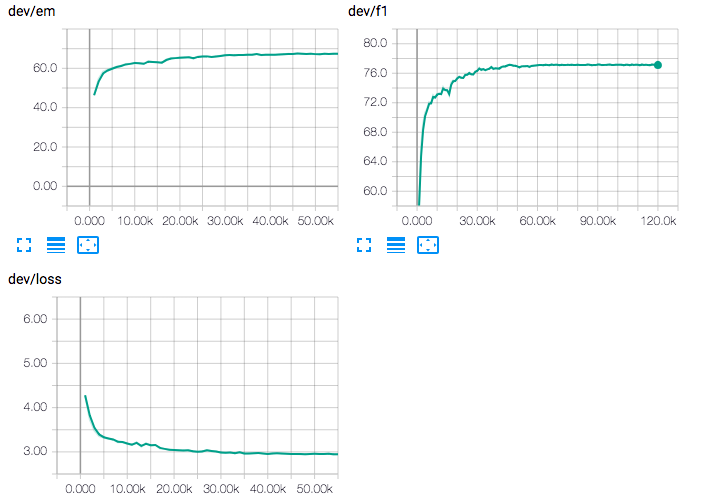

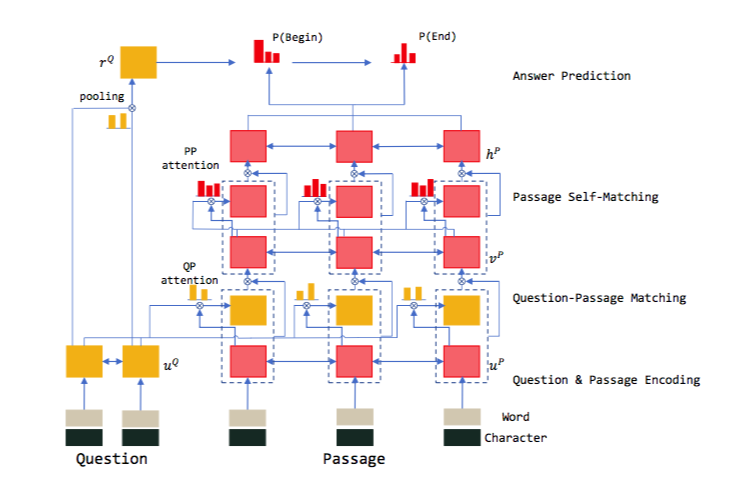

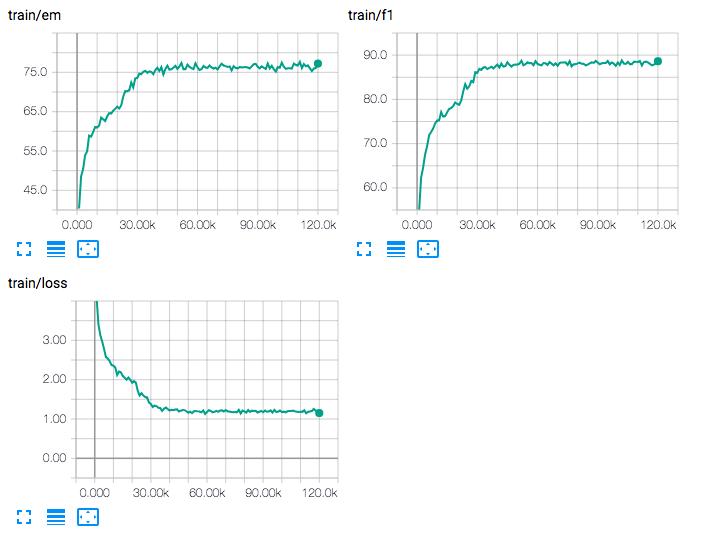

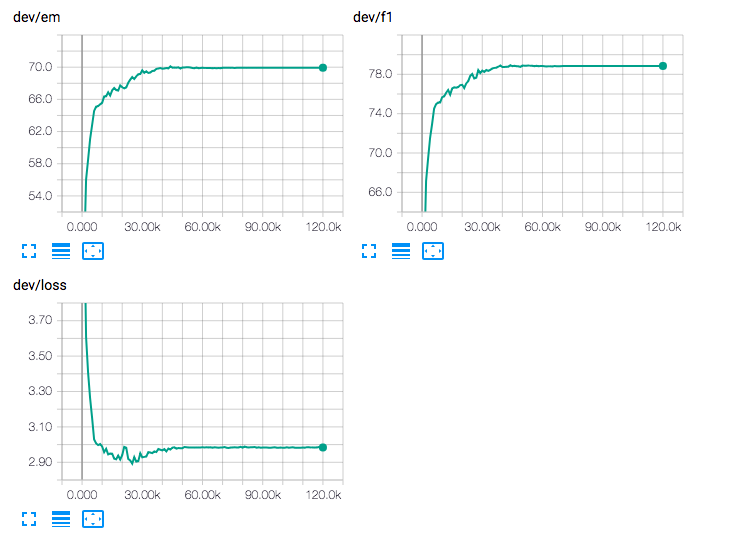

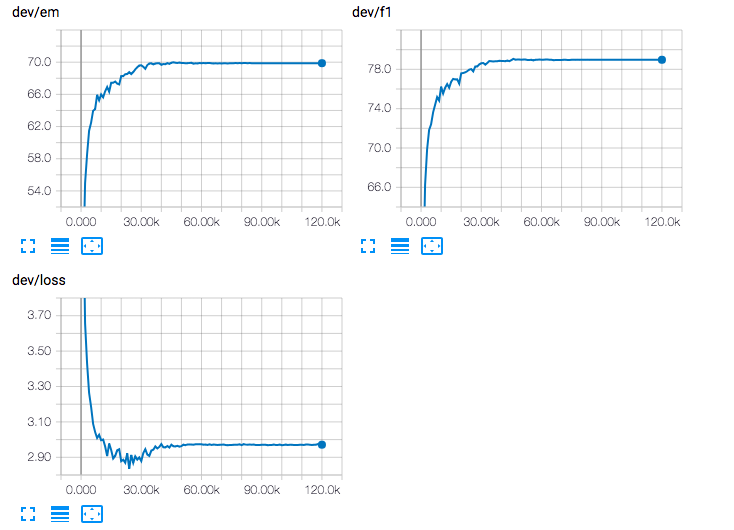

RNet

The result on dev set(single model) under my experimental environment is shown as follows:

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device | RNN type |

|---|---|---|---|---|---|---|---|

| 12W | 32 | 75 | 69.1 | 78.2 | 1.35 it/s | 1 GTX 1080 Ti | cuDNNGRU |

| 6W | 64 | 75 | 66.1 | 75.6 | 2.95 s/it | 1 GTX 1080 Ti | SRU |

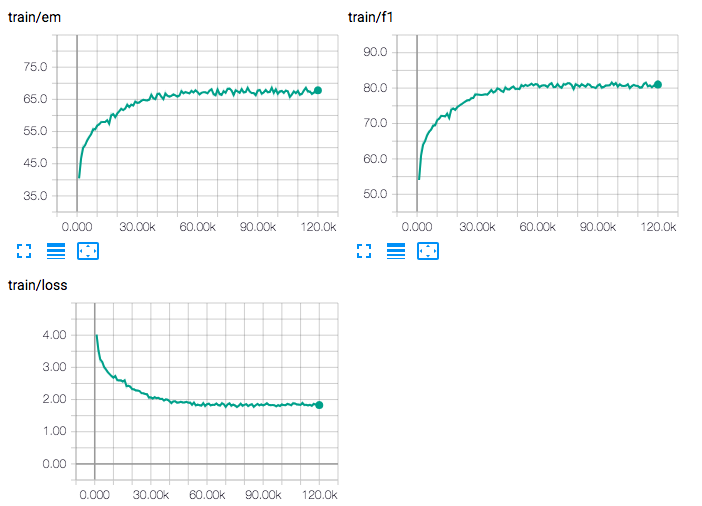

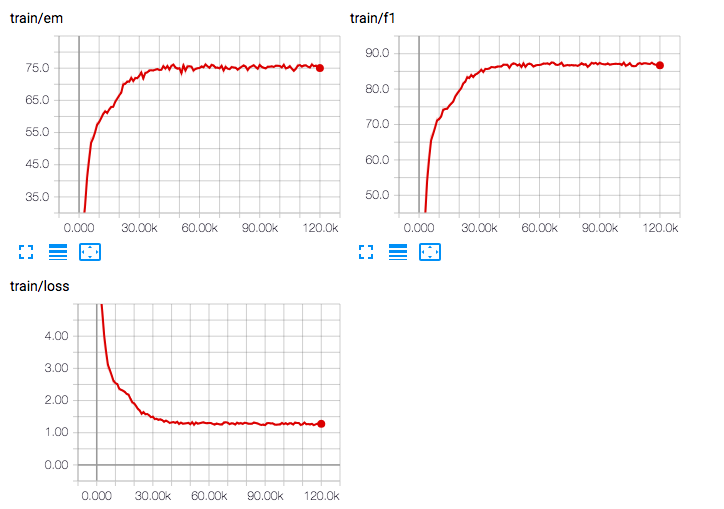

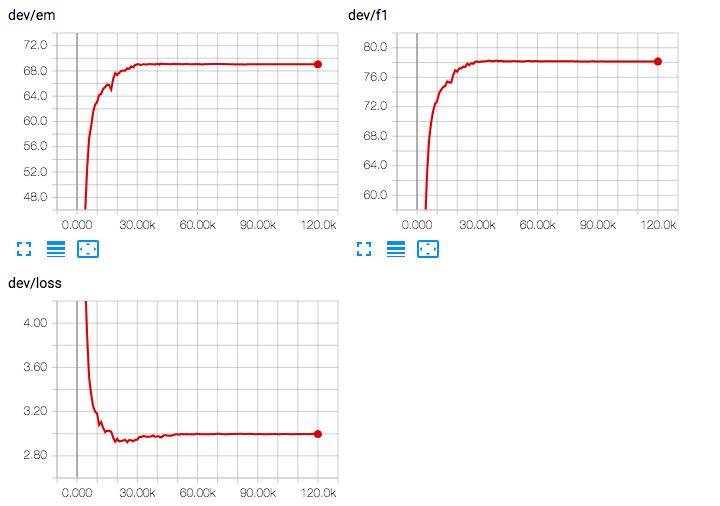

RNet trained with cuDNNGRU:

RNet trained with SRU(without optimization on operation efficiency):

Refer to:

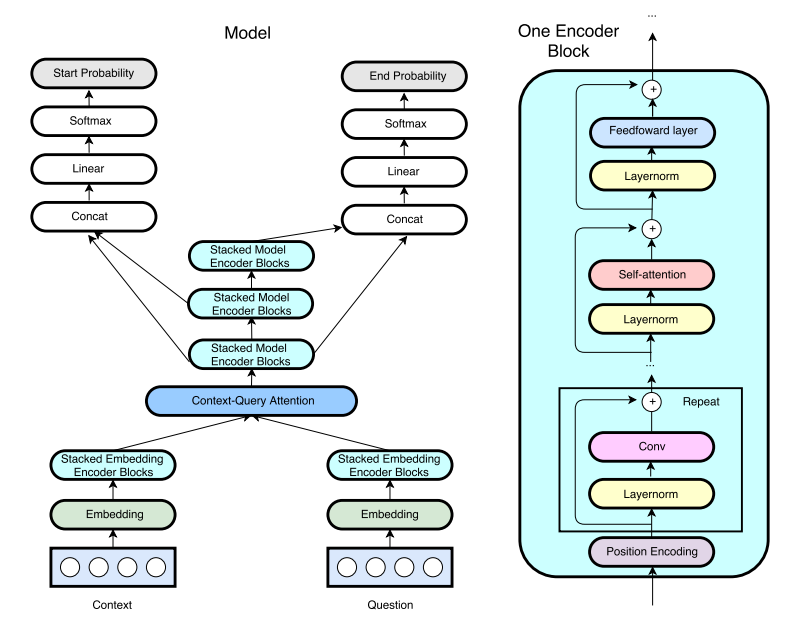

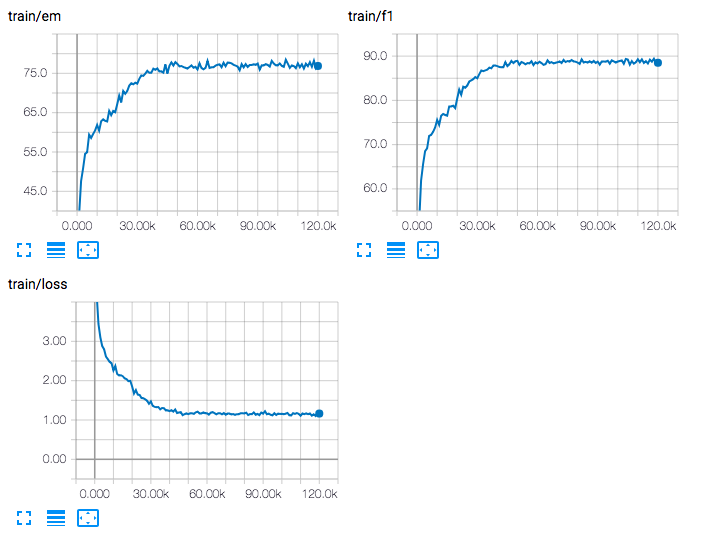

QANet

The result on dev set(single model) under my experimental environment is shown as follows:

| training step | batch size | attention heads | hidden size | EM (%) | F1 (%) | speed | device |

|---|---|---|---|---|---|---|---|

| 6W | 32 | 1 | 96 | 70.2 | 79.7 | 2.4 it/s | 1 GTX 1080 Ti |

| 12W | 32 | 1 | 75 | 70.1 | 79.4 | 2.4 it/s | 1 GTX 1080 Ti |

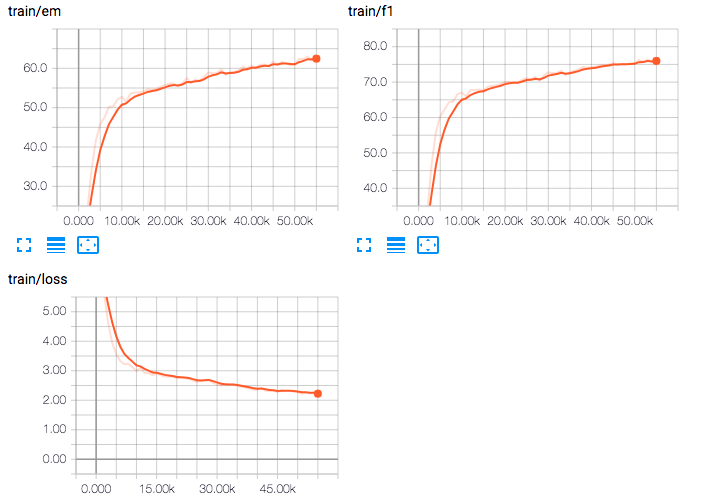

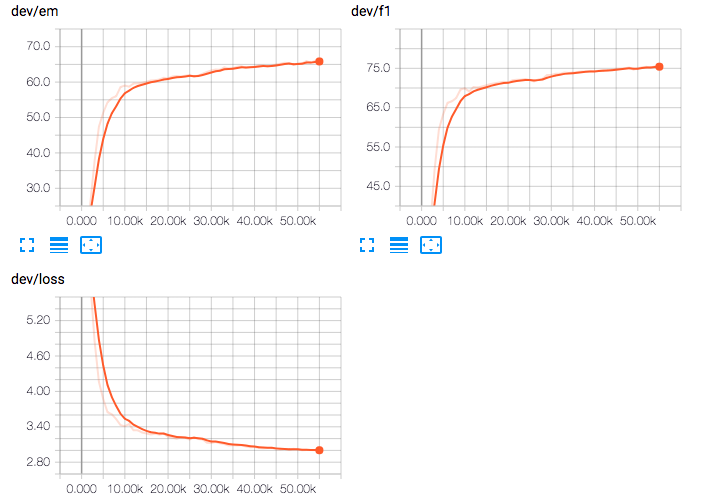

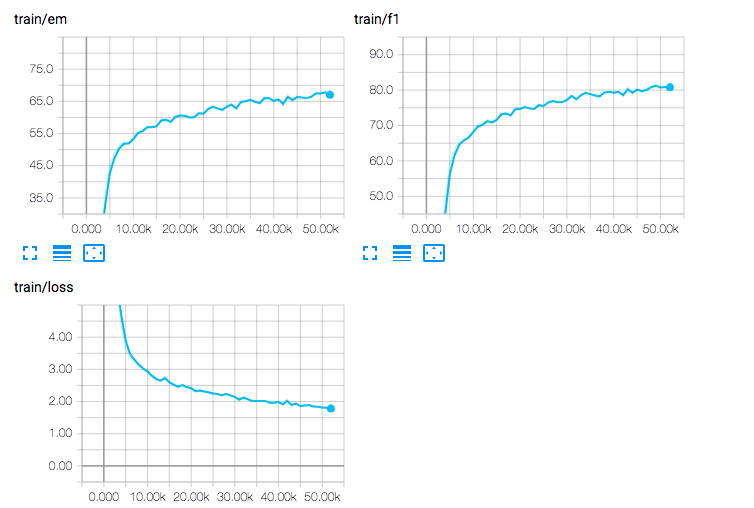

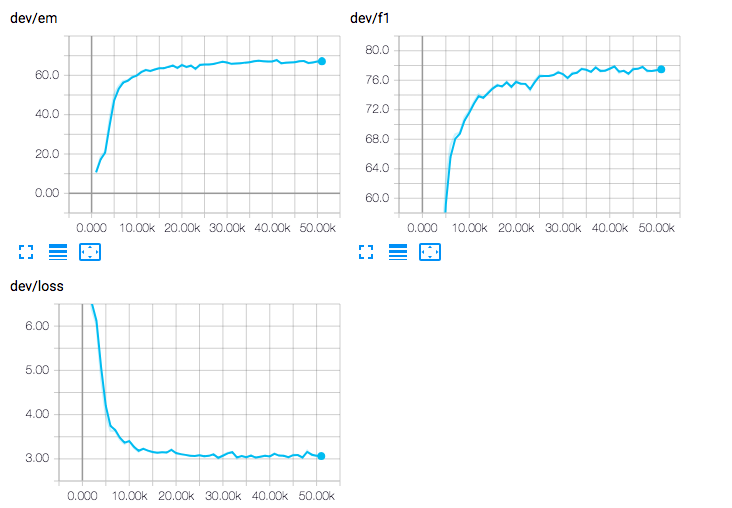

Experimental records for the first experiment:

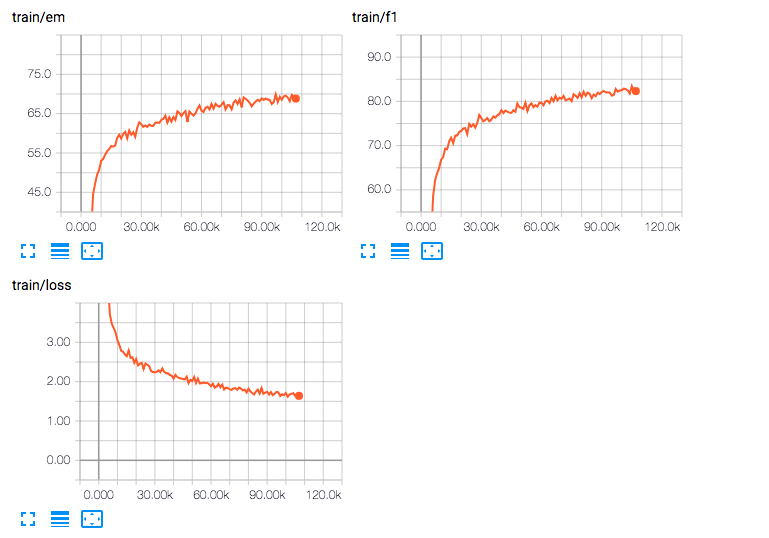

Experimental records for the second experiment(without smooth):

Refer to:

- QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension

- github repo of NLPLearn/QANet

Hybrid Network

This repo contains my experiments and attempt for MRC problems, and I'm still working on it.

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device | description |

|---|---|---|---|---|---|---|---|

| 12W | 32 | 100 | 70.1 | 78.9 | 1.6 it/s | 1 GTX 1080 Ti | \ |

| 12W | 32 | 75 | 70.0 | 79.1 | 1.8 it/s | 1 GTX 1080 Ti | \ |

| 12W | 32 | 75 | 69.5 | 78.8 | 1.8 it/s | 1 GTX 1080 Ti | with spatial dropout on embeddings |

Experimental records for the first experiment(without smooth):

Experimental records for the second experiment(without smooth):

Information

For more information, please visit http://skyhigh233.com/blog/2018/04/26/cqa-intro/.