leftthomas / Simclr

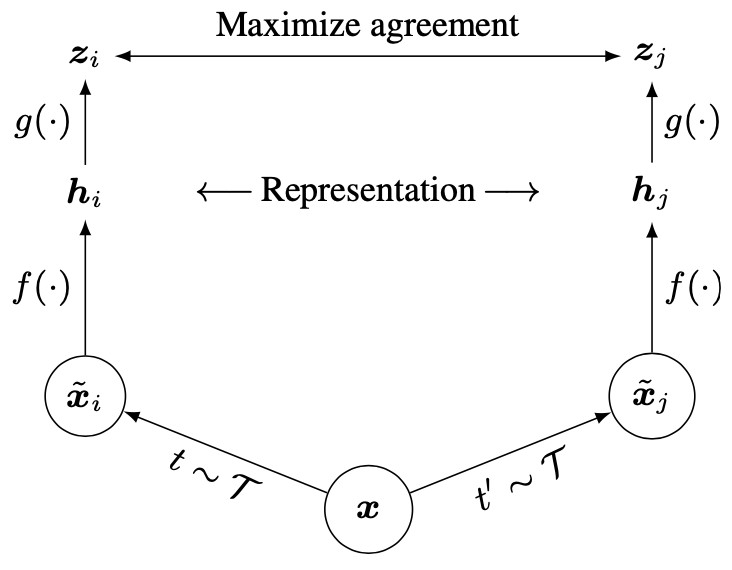

A PyTorch implementation of SimCLR based on ICML 2020 paper "A Simple Framework for Contrastive Learning of Visual Representations"

Stars: ✭ 198

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Simclr

srVAE

VAE with RealNVP prior and Super-Resolution VAE in PyTorch. Code release for https://arxiv.org/abs/2006.05218.

Stars: ✭ 56 (-71.72%)

Mutual labels: unsupervised-learning, cifar10

Graph Transformer

Transformer for Graph Classification (Pytorch and Tensorflow)

Stars: ✭ 191 (-3.54%)

Mutual labels: unsupervised-learning

Dynamics

A Compositional Object-Based Approach to Learning Physical Dynamics

Stars: ✭ 159 (-19.7%)

Mutual labels: unsupervised-learning

Hidden Two Stream

Caffe implementation for "Hidden Two-Stream Convolutional Networks for Action Recognition"

Stars: ✭ 179 (-9.6%)

Mutual labels: unsupervised-learning

Resnet Cifar10 Caffe

ResNet-20/32/44/56/110 on CIFAR-10 with Caffe

Stars: ✭ 161 (-18.69%)

Mutual labels: cifar10

Naszilla

Naszilla is a Python library for neural architecture search (NAS)

Stars: ✭ 181 (-8.59%)

Mutual labels: cifar10

Stanford Cs 229 Machine Learning

VIP cheatsheets for Stanford's CS 229 Machine Learning

Stars: ✭ 12,827 (+6378.28%)

Mutual labels: unsupervised-learning

Fleetx

Paddle Distributed Training Extended. 飞桨分布式训练扩展包

Stars: ✭ 196 (-1.01%)

Mutual labels: unsupervised-learning

Torchdistill

PyTorch-based modular, configuration-driven framework for knowledge distillation. 🏆18 methods including SOTA are implemented so far. 🎁 Trained models, training logs and configurations are available for ensuring the reproducibiliy.

Stars: ✭ 177 (-10.61%)

Mutual labels: cifar10

Opencog

A framework for integrated Artificial Intelligence & Artificial General Intelligence (AGI)

Stars: ✭ 2,132 (+976.77%)

Mutual labels: unsupervised-learning

Tensorflowprojects

Deep learning using tensorflow

Stars: ✭ 167 (-15.66%)

Mutual labels: unsupervised-learning

Nnpulearning

Non-negative Positive-Unlabeled (nnPU) and unbiased Positive-Unlabeled (uPU) learning reproductive code on MNIST and CIFAR10

Stars: ✭ 181 (-8.59%)

Mutual labels: cifar10

Danmf

A sparsity aware implementation of "Deep Autoencoder-like Nonnegative Matrix Factorization for Community Detection" (CIKM 2018).

Stars: ✭ 161 (-18.69%)

Mutual labels: unsupervised-learning

Free Ai Resources

🚀 FREE AI Resources - 🎓 Courses, 👷 Jobs, 📝 Blogs, 🔬 AI Research, and many more - for everyone!

Stars: ✭ 192 (-3.03%)

Mutual labels: unsupervised-learning

Remixautoml

R package for automation of machine learning, forecasting, feature engineering, model evaluation, model interpretation, data generation, and recommenders.

Stars: ✭ 159 (-19.7%)

Mutual labels: unsupervised-learning

Factorvae

Pytorch implementation of FactorVAE proposed in Disentangling by Factorising(http://arxiv.org/abs/1802.05983)

Stars: ✭ 176 (-11.11%)

Mutual labels: unsupervised-learning

Distancegan

Pytorch implementation of "One-Sided Unsupervised Domain Mapping" NIPS 2017

Stars: ✭ 180 (-9.09%)

Mutual labels: unsupervised-learning

Variational Ladder Autoencoder

Implementation of VLAE

Stars: ✭ 196 (-1.01%)

Mutual labels: unsupervised-learning

Pixelnet

The repository contains source code and models to use PixelNet architecture used for various pixel-level tasks. More details can be accessed at <http://www.cs.cmu.edu/~aayushb/pixelNet/>.

Stars: ✭ 194 (-2.02%)

Mutual labels: unsupervised-learning

SimCLR

A PyTorch implementation of SimCLR based on ICML 2020 paper A Simple Framework for Contrastive Learning of Visual Representations.

Requirements

conda install pytorch torchvision cudatoolkit=10.0 -c pytorch

- thop

pip install thop

Dataset

CIFAR10 dataset is used in this repo, the dataset will be downloaded into data directory by PyTorch automatically.

Usage

Train SimCLR

python main.py --batch_size 1024 --epochs 1000

optional arguments:

--feature_dim Feature dim for latent vector [default value is 128]

--temperature Temperature used in softmax [default value is 0.5]

--k Top k most similar images used to predict the label [default value is 200]

--batch_size Number of images in each mini-batch [default value is 512]

--epochs Number of sweeps over the dataset to train [default value is 500]

Linear Evaluation

python linear.py --batch_size 1024 --epochs 200

optional arguments:

--model_path The pretrained model path [default value is 'results/128_0.5_200_512_500_model.pth']

--batch_size Number of images in each mini-batch [default value is 512]

--epochs Number of sweeps over the dataset to train [default value is 100]

Results

There are some difference between this implementation and official implementation, the model (ResNet50) is trained on

one NVIDIA TESLA V100(32G) GPU:

- No

Gaussian blurused; -

Adamoptimizer with learning rate1e-3is used to replaceLARSoptimizer; - No

Linear learning rate scalingused; - No

Linear WarmupandCosineLR Scheduleused.

| Evaluation Protocol | Params (M) | FLOPs (G) | Feature Dim | Batch Size | Epoch Num | τ | K | Top1 Acc % | Top5 Acc % | Download |

|---|---|---|---|---|---|---|---|---|---|---|

| KNN | 24.62 | 1.31 | 128 | 512 | 500 | 0.5 | 200 | 89.1 | 99.6 | model | gc5k |

| Linear | 23.52 | 1.30 | - | 512 | 100 | - | - | 92.0 | 99.8 | model | f7j2 |

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].