kaiwaehner / Hivemq Mqtt Tensorflow Kafka Realtime Iot Machine Learning Training Inference

Programming Languages

Labels

Projects that are alternatives of or similar to Hivemq Mqtt Tensorflow Kafka Realtime Iot Machine Learning Training Inference

Streaming Machine Learning at Scale from 100000 IoT Devices with HiveMQ, Apache Kafka and TensorFLow

If you just want to get started and quickly start the demo in a few minutes, go to the quick start to setup the infrastructure (on GCP) and run the demo.

You can also check out the 20min video recording with a live demo: Streaming Machine Learning at Scale from 100000 IoT Devices with HiveMQ, Apache Kafka and TensorFLow.

There is also a blog post with more details about use cases for event streaming and streaming analytics in the automotive industry.

Motivation: Demo an IoT Scenario at Scale

You want to see an IoT example at huge scale? Not just 100 or 1000 devices producing data, but a really scalable demo with millions of messages per second from tens of thousands of devices?

This is the right demo for you! The demo shows how you can integrate with tens or hundreds of thousands IoT devices and process the data in real time. The demo use case is predictive maintenance (i.e. anomaly detection) in a connected car infrastructure to predict motor engine failures.

Background: Cloud-Native MQTT and Kafka

If you need more background about the challenges of building a scalable IoT infrastructure, the differences and relation between MQTT and Apache Kafka, and best practices for realizing a cloud-native IoT infrastructure based on Kubernetes, check out the slide deck "Best Practices for Streaming IoT Data with MQTT and Apache Kafka".

Background: Digital Twin with Kafka

In addition to the predictive maintenance scenario with machine learning, we also implemented an example of a Digital Twin. More thoughts on this here: "Apache Kafka as Digital Twin for Open, Scalable, Reliable Industrial IoT (IIoT)".

In our example, we use Kafka as ingestion layer and MongoDB for storage and analytics. However, this is just one of various IoT architectures for building a digital twin with Apache Kafka.

Use Case: Anomaly Detection in Real Time for 100000+ Connected Cars

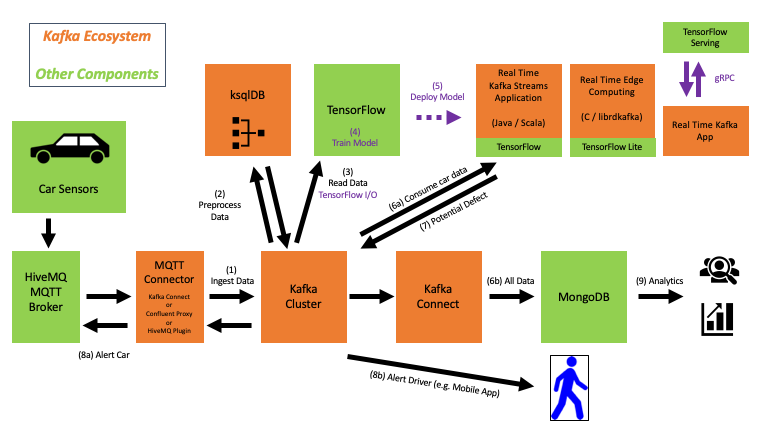

Streaming Machine Learning in Real Time at Scale with MQTT, Apache Kafka, TensorFlow and TensorFlow I/O:

- Data Integration

- Data Preprocessing

- Model Training

- Model Deployment

- Real Time Scoring

- Real Time Monitoring

This project implements a scenario where you can integrate with tens of thousands (simulated) cars using a scalable MQTT platform and an event streaming platform. The demo trains new analytic models from streaming data - without the need for an additional data store - to do predictive maintenance on real time sensor data from cars:

We built two different analytic models using different approaches:

-

Unsupervised learning (used by default in our project): An Autoencoder neural network is trained to detect anomaly detection without any labeled data.

-

Supervised learning: A LSTM neural network is trained on labeled data to be able to do predictions about future sensor events.

The digital twin implementation with Kafka and MongoDB is discussed on its own page, including implementation details and configuration examples.

Another example demonstrates how you can store all sensor data in a data lake - in this case GCP Google Cloud Storage (GCS) - for further analytics.

Architecture

We use HiveMQ as open source MQTT broker to ingest data from IoT devices, ingest the data in real time into an Apache Kafka cluster for preprocessing (using Kafka Streams / KSQL), and model training + inference (using TensorFlow 2.0 and its TensorFlow I/O Kafka plugin).

We leverage additional enterprise components from HiveMQ and Confluent to allow easy operations, scalability and monitoring.

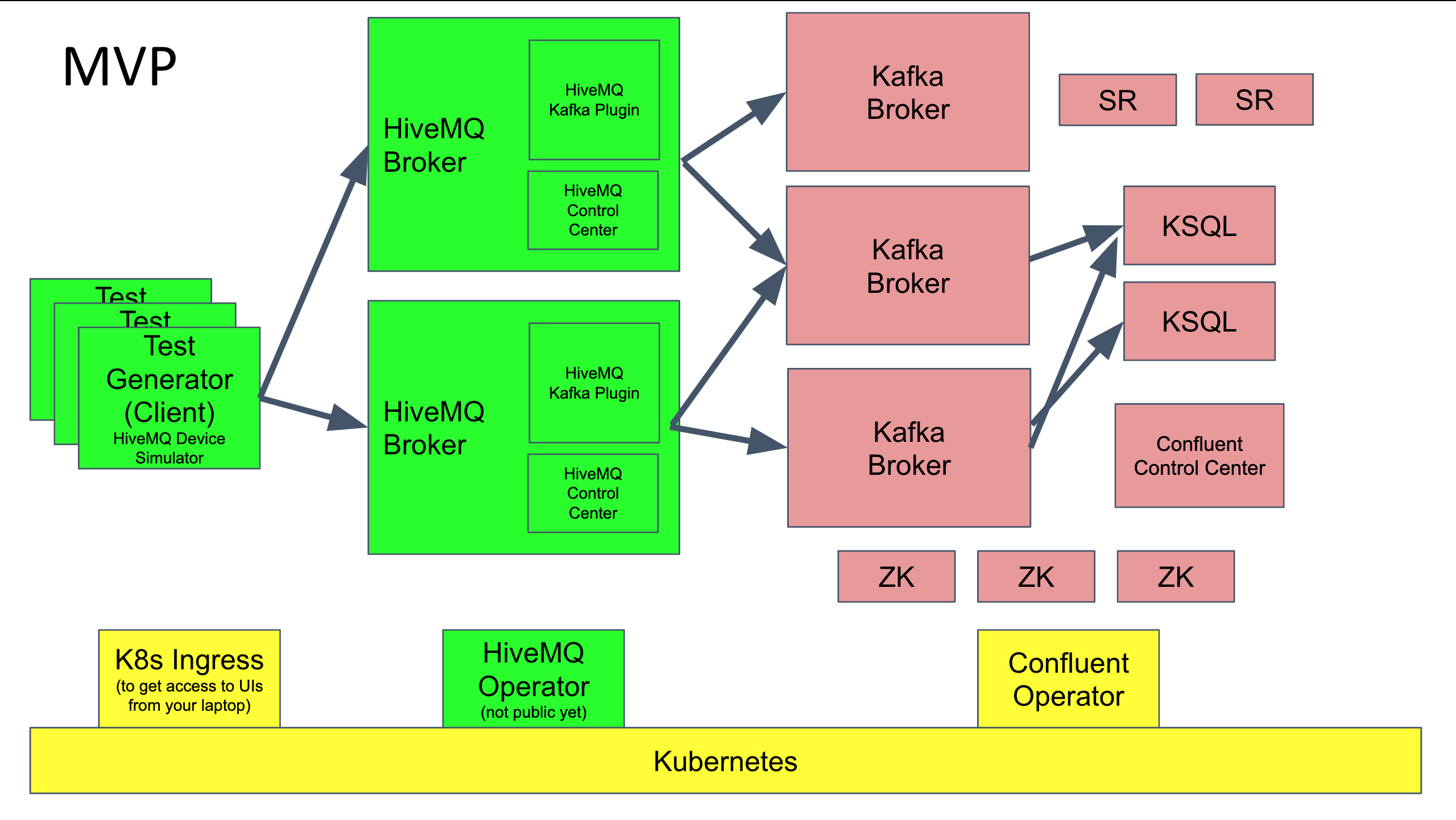

Here is the architecture of the MVP we created for setting up the MQTT and Kafka infrastructure on Kubernetes:

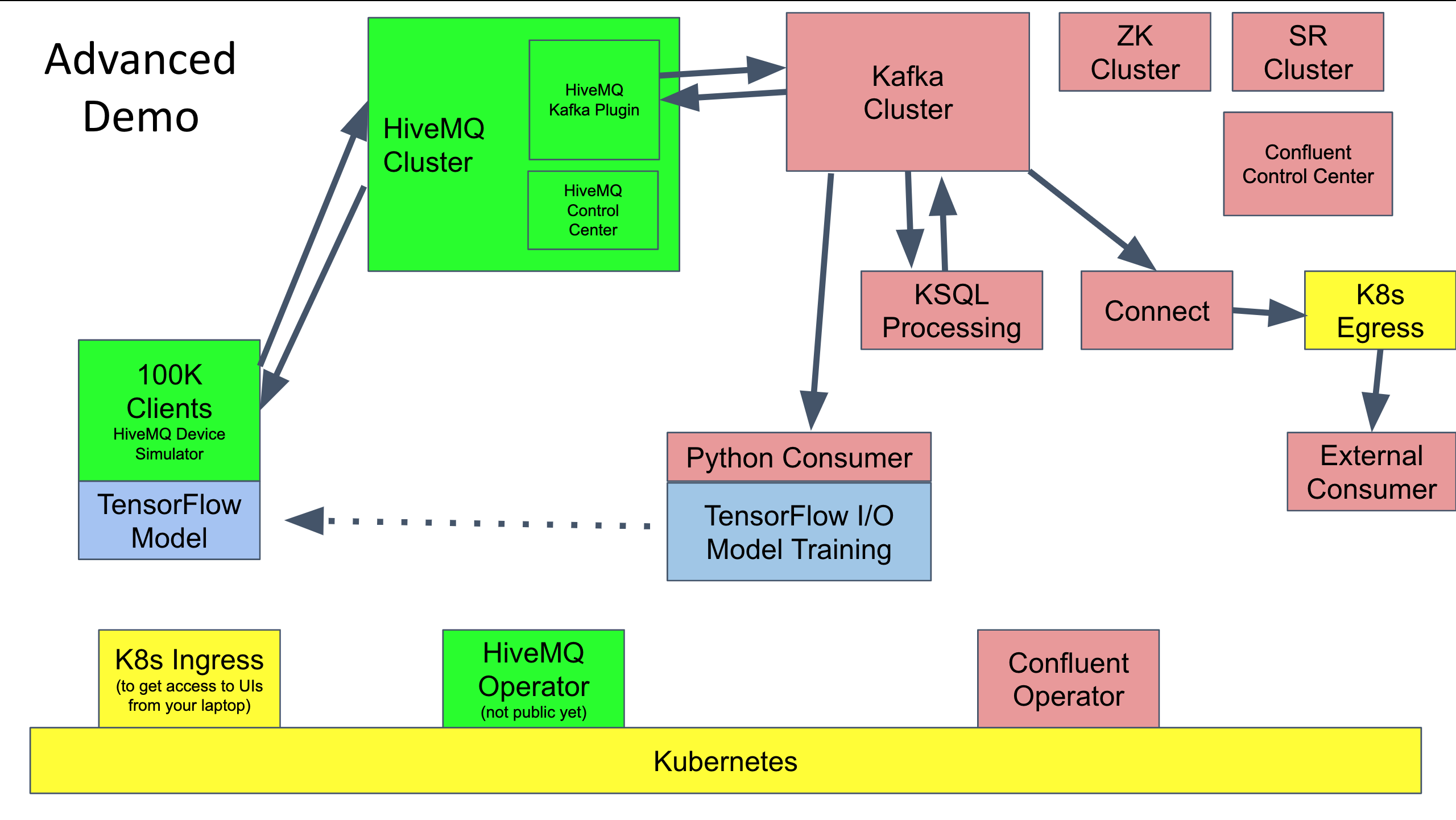

And this is the architecture of the final demo where we included TensorFlow and TF I/O's Kafka plugin for model training and model inference:

Deployment on Google Cloud Platoform (GCP) and Google Kubernetes Engine (GKE)

We used Google Cloud Platform (GCP) and Google Kubernetes Engine (GKE) for different reasons. What you get here is not a fully-managed solution, but a mix of a cloud provider, a fully managed Kubernetes service, and self-managed components for MQTT, Kafka, TensorFlow and client applications. Here is why we chose this setup:

-

Get started quickly with two or three Terraform and Shell commands to setup the whole infrastructure; but being able to configure and change the details for playing around and starting your own POC for your specific problem and use case.

-

Show how to build a cloud-native infrastructure which is flexible, scalable and elastic.

-

Demonstrate an architecture which is applicable on different cloud providers like GCP, AWS, Azure, Alibaba, and on premises.

-

Go deep dive into configuration to show different options and allow flexible S, L, XL or XLL setups for connectivity, throughput, data processing, model training and model inference.

-

Understand the complexity of self-managed distributed systems (even with Kubernetes, Terraform and a cloud provider like GCP, you have to do a lot to do such a deployment in a reliable, scalable and elastic way).

-

Get motivated to cloud out fully-managed services for parts of your infrastructure if it makes sense, e.g. Google's Cloud Machine Learning Engine for model training and model inference using TensorFlow ecosystem or Confluent Cloud as fully managed Kafka as a Service offering with consumption-based pricing and SLAs.

Test Data - Car Sensors

We generate streaming test data at scale using a Car Data Simulator. The test data uses Apache Avro file format to leverage features like compression, schema versioning and Confluent features like Schema Registry or KSQL's schema inference.

You can either use some test data stored in the CSV file car-sensor-data.csv or better generate continuous streaming data using the included script (as described in the quick start). Check out the Avro file format here: cardata-v1.avsc.

Here is the schema and one row of the test data:

time,car,coolant_temp,intake_air_temp,intake_air_flow_speed,battery_percentage,battery_voltage,current_draw,speed,engine_vibration_amplitude,throttle_pos,tire_pressure_1_1,tire_pressure_1_2,tire_pressure_2_1,tire_pressure_2_2,accelerometer_1_1_value,accelerometer_1_2_value,accelerometer_2_1_value,accelerometer_2_2_value,control_unit_firmware

1567606196,car1,39.395103,34.53991,123.317406,0.82654595,246.12367,0.6586535,24.934872,2493.487,0.034893095,32,31,34,34,0.5295712,0.9600553,0.88389874,0.043890715,2000

Streaming Ingestion and Model Training with Kafka and TensorFlow-IO

Typically, analytic models are trained in batch mode where you first ingest all historical data in a data store like HDFS, AWS S3 or GCS. Then you train the model using a framework like Spark MLlib, TensorFlow or Google ML.

TensorFlow I/O is a component of the TensorFlow framework which allows native integration with various technologies.

One of these integrations is tensorflow_io.kafka which allows streaming ingestion into TensorFlow from Kafka WITHOUT the need for an additional data store! This significantly simplifies the architecture and reduces development, testing and operations costs.

Yong Tang, member of the SIG TensorFlow I/O team, did a great presentation about this at Kafka Summit 2019 in New York (video and slide deck available for free).

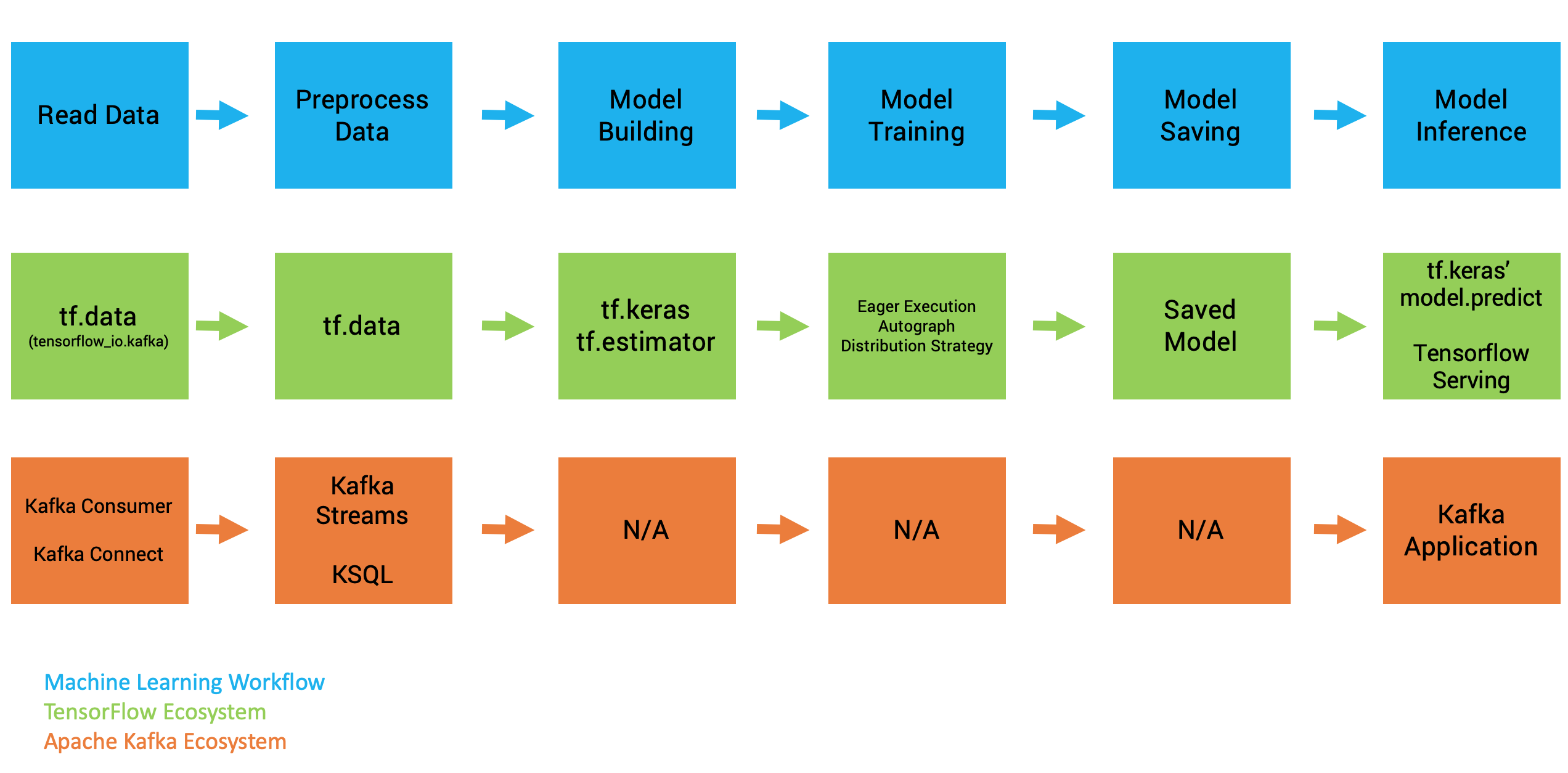

You can pick and choose the right components from the Apache Kafka and TensorFlow ecosystems to build your own machine learning infrastructure for data integration, data processing, model training and model deployment:

This demo will do the following steps:

- Consume streaming data from 100.000 (simulated) cars, forwarding it via HiveMQ MQTT Broker to Kafka consumers

- Ingest, store and scale the IoT data with Apache Kafka and Confluent Platform

- Preprocess the data with KSQL (filter, transform)

- Ingest the data into TensorFlow (tf.data and tensorflow-io + Kafka plugin)

- Build, train and save the model (TensorFlow 2.0 API)

- Store the trained model in Google Cloud Storage object store and load it dynamically from a Kafka client application

- Deploy the model in two variants: In a simple Python application using TensorFlow I/Os inference capabilities and within a powerful Kafka Streams application for embedded real time scoring

Optional steps (nice to have)

- Show IoT-specific features with HiveMQ tool stack

- Deploy the model via TensorFlow Serving

- Some kind of A/B testing (maybe with Istio Service Mesh)

- Re-train the model and updating the Kafka Streams application (via sending the new model to a Kafka topic)

- Monitoring of model training (via TensorBoard) and model deployment / inference (via some kind of Kafka integration + dashboard technology)

- Confluent Cloud for Kafka as a Service (-> Focus on business problems, not running infrastructure)

- Enhance demo with C / librdkafka clients and TensorFlow Lite for edge computing

- Add Confluent Tiered storage as cost-efficient long-term storage

- TODO - other ideas?

Why is Streaming Machine Learning so awesome

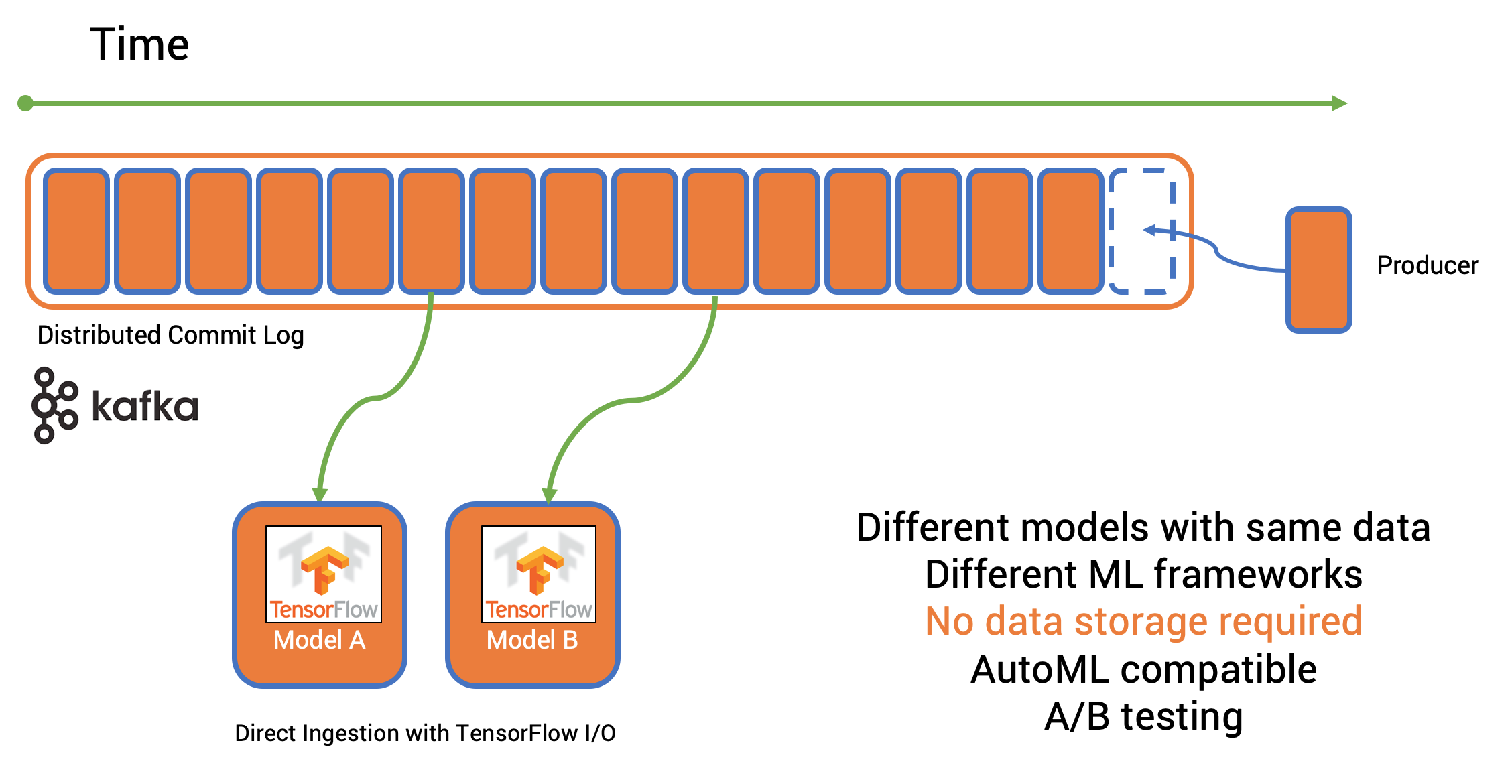

Again, you don't need another data store anymore! Just ingest the data directly from the distributed commit log of Kafka:

This totally simplfies your architecture as you don't need another data store in the middle. In conjunction with Tiered Storage - a Confluent add-on to Apache Kafka, you can built a cost-efficient long-term storage (aka data lake) with Kafka. No need for HDFS or S3 as additional storage. See the blog post "Streaming Machine Learning with Tiered Storage and Without a Data Lake" for more details.

Be aware: This is still NOT Online Training

Streaming ingestion for model training is fantastic. You don't need a data store anymore. This simplifies the architecture and reduces operations and developemt costs.

However, one common misunderstanding has to be clarified - as this question comes up every time you talk about TensorFlow I/O and Apache Kafka: As long as machine learning / deep learning frameworks and algorythms expect data in batches, you cannot achieve real online training (i.e. re-training / optimizing the model with each new input event).

Only a few algoryhtms and implementations are available today, like Online Clustering.

Thus, even with TensorFlow I/O and streaming ingestion via Apache Kafka, you still do batch training. Though, you can configure and optimize these batches to fit your use case. Additionally, only Kafka allows ingestion at large scale for use cases like connected cars or assembly lines in factories. You cannot build a scalable, reliable, mission-critical ML infrastructure just with Python.

The combination of TensorFlow I/O and Apache Kafka is a great step closer to real time training of analytic models at scale!

I posted many articles and videos about this discussion. Get started with How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka and check out my other resources if you want to learn more.

Requirements and Setup

Full Live Demo for End-to-End MQTT-Kafka Integration

We have prepared a terraform script to deploy the complete environment in Google Kubernetes Engine (GKE). This includes:

- Kafka Cluster: Apache Kafka, KSQL, Schema Registry, Control Center

- MQTT Cluster: HiveMQ Broker, Kafka Plugin, Test Data Generator

- Streaming Machine Learning (Model Training and Inference): TensorFlow I/O and Kafka Plugin

- Monitoring Infrastructure: Prometheus, Grafana, HiveMQ Control Center, Confluent Control Center

The setup is pretty straightforward. No previous experience required for getting the demo running. You just need to install some CLIs on your laptop (gcloud, kubectl, helm, terraform) and then run two or three script commands as described in the quick start guide.

With default configuration, the demo starts at small scale. This is sufficient to show an impressive demo. It also reduces cost and to enables free usage without the need for commercial licenses. You can also try it out at extreme scale (100000+ IoT connections). This option is also described in the quick start.

Afterwards, you execute one single command to set up the infrastructure and one command to generate test data. Of course, you can configure everything to your needs (like the cluster size, test data, etc).

Follow the instructions in the quick start to setup the cluster. Please let us know if you have any problems setting up the demo so that we can fix it!

Streaming Machine Learning with Kafka and TensorFlow

If you are just interested in the "Streaming ML" part for model training and model inference using Python, Kafka and TensorFlow I/O, check out:

Python Application leveraging Apache Kafka and TensorFlow for Streaming Model Training and Inference. python-scripts/LSTM-TensorFlow-IO-Kafka/README.md

You can also checkout two simple examples which use Kafka Python clients to produce data to Kafka topics and then consume the streaming data directly with TensorFlow I/O for streaming ML without an additional data store:

-

Streaming ingestion of MNIST data into TensorFlow via Kafka for image regonition.

-

Autoencoder for anomaly detection of sensor data into TensorFlow via Kafka. Producer (Python Client) and Consumer (TensorFlow I/O Kafka Plugin) + Model Training.