IsaacChanghau / Neural_sequence_labeling

Programming Languages

Projects that are alternatives of or similar to Neural sequence labeling

Neural Sequence Labeling

A TensorFlow implementation of Neural Sequence Labeling model, which is able to tackle sequence labeling tasks such as Part-of-Speech (POS) Tagging, Chunking, Named Entity Recognition (NER), Punctuation Restoration, Sentence Boundary Detection, Spoken Language Understanding and so forth.

Convert file format to

UTF-8on Mac OS X:iconv -f <other_format> -t utf-8 file > new_file

Convert file format toUTF-8on Linux (Ubuntu 16.04):iconv -f <other_format> -t utf-8 file -o new_file

Tasks

All the models are trained by one GeForce GTX 1080Ti GPU.

Part-Of-Speech (POS) Tagging Task

Experiment on CoNLL-2003 POS dataset, (45 target annotations), example:

EU rejects German call to boycott British lamb .

NNP VBZ JJ NN TO VB JJ NN .

This task is a typical sequence labeling, and current SOTA POS tagging methods are well solved this problem, those methods work rapidly and reliably, with per-token accuracies of slightly over 97% (ref.). So, for the model of this task, I just follow the structure of NER Task, to build a POS tagger, which is able to achieve good performance.

Similarly, all the configurations are put in the train_conll_pos_blstm_cnn_crf.py and

the model is built in the blstm_cnn_crf_model.py.

Simply run python3 train_conll_pos_blstm_cnn_crf.py to start a training process.

Chunking Task

Experiment on CoNLL-2003 Chunk dataset, (21 target annotations), example:

EU rejects German call to boycott British lamb .

B-NP B-VP B-NP I-NP B-VP I-VP B-NP I-NP O

This task is also similar to the NER task below, so the model for Chunking task also follows the structure of NER. ALL the configurations are put in the train_conll_chunk_blstm_cnn_crf.py and the model is built in the blstm_cnn_crf_model.py.

To achieve the SOTA results, the parameters are need to be carefully tuned.

Named Entity Recognition (NER) Task

Experiment on CoNLL-2003 NER dataset, standard BIO2 annotation format (9 target annotations), example:

Stanford University located at California .

B-ORG I-ORG O O B-LOC O

To tackle this task, I build the model follows the structure of Words Embeddings + Chars Embeddings (RNNs/CNNs) + RNNs + CRF

(baseline), and several variant modules as well as attention mechanism are available. All the configurations are put in

the train_conll_ner_blstm_cnn_crf.py and the model is built in the

blstm_cnn_crf_model.py.

The SOTA performance (F1 score, ref)

is F1 Score = 91.21 achieved by End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF,

so the basement model also follow the similar structure as this paper, but the parameters setting is different.

To train and inference the model, directly run python3 train_conll_ner_blstm_cnn_crf.py, and below gives an example of training

basement model and achieves F1 Score = 91.82, which is similar to the SOTA results.

Unlike the

End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF, the basement model converges much faster than this SOTA method, since the basement model (with large embedding dimension and rnn hidden units) has much more parameters than the SOTA method, although, the basement model also has high probability to be overfitting.

Build models...

word embedding shape: [None, None, 300]

chars representation shape: [None, None, 200]

word and chars embedding shape: [None, None, 500]

rnn output shape: [None, None, 600]

logits shape: [None, None, 9]

Start training...

...

703/703 [==============================] - 95s - Global Step: 24605 - Train Loss: 0.0246

Valid dataset -- accuracy: 98.89, precision: 94.41, recall: 94.39, FB1: 94.40

Test dataset -- accuracy: 98.18, precision: 91.23, recall: 91.31, FB1: 91.27

-- new BEST score on test dataset: 91.27

Some SOTA NER F1 score on test data set from CoNLL-2003:

| Model | F1 Score (on CoNLL 2003 dataset) |

|---|---|

| Bidirectional LSTM-CRF Models for Sequence Tagging | 90.10 |

| Neural Architectures for Named Entity Recognition | 90.94 |

| Multi-Task Cross-Lingual Sequence Tagging from Scratch | 91.20 |

| End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF | 91.21 |

| The Basement Model | 91.27 (+0.21, -0.22) |

Some reported F1 scores are higher than 91.21 are not shown here, since the author of

End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRFmentioned that they are not comparable to the work since they use larger data set for training.

The variant modules include Stack Bidirectional RNN (multi-layers), Multi-RNN Cells (multi-layers), Lurong/Bahdanau Attention

Mechanism, Self-attention Mechanism, Residual Connection, Layer Normalization and so on. However, these modifications did not

improve (sometime even worse than basement model) the performance significantly (F1 score improves >= 1.5). It's easy to apply

those variants and train by modifying the config settings in train_conll_ner_blstm_cnn_crf.py.

Spoken Language Understanding Task

Experiment on MEDIA dataset (French language), standard BIO2 annotation format (138 target annotations), example:

réserver dans l' un de ces hôtels

B-command-tache O B-nombre I-nombre B-lienRef-coRef I-lienRef-coRef B-objetBD

Current highest F1 score is 86.4, which is slightly lower than the SOTA F1 result, which is 86.95.

Details of configurations and model are placed in train_media_multi_attention.py and multi_attention_model.py separately.

Punctuation Restoration/Sentence Boundary Detection Task

Experiment on Transcripts of TED Talks (IWSLT) dataset, example:

$ raw sentence: I'm a savant, or more precisely, a high-functioning autistic savant.

$ processed annotation format:

$ i 'm a savant or more precisely a high-functioning autistic savant

$ O O O COMMA O O COMMA O O O PERIOD

To deal with this task, I build an attention-based model, which follows the structure

Words Embeddings + Chars Embeddings (RNNs/CNNs) + Densely Connected Bi-LSTM + Attention Mechanism + CRF. All the

configurations are put in the train_punct_attentive_model.py and the model is built in

the punct_attentive_model.py.

To train the model, directly run python3 train_punct_attentive_model.py, and below gives an example of attentive model and

achieves F1 Score = 68.9, which is slightly higher than the SOTA results.

Build models...

word embedding shape: [None, None, 300]

chars representation shape: [None, None, 100]

word and chars concatenation shape: [None, None, 400]

densely connected bi_rnn output shape: [None, None, 600]

attention output shape: [None, None, 300]

logits shape: [None, None, 4]

Start training...

...

Epoch 5/30:

349/349 [==============================] - 829s - Global Step: 1745 - Train Loss: 28.7219

Evaluate on data/raw/LREC_converted/ref.txt:

----------------------------------------------

PUNCTUATION PRECISION RECALL F-SCORE

,COMMA 64.8 59.6 62.1

.PERIOD 73.5 77.0 75.2

?QUESTIONMARK 70.8 73.9 72.3

----------------------------------------------

Overall 69.4 68.4 68.9

Err: 5.96%

SER: 44.8%

Evaluate on data/raw/LREC_converted/asr.txt:

----------------------------------------------

PUNCTUATION PRECISION RECALL F-SCORE

,COMMA 49.7 53.5 51.5

.PERIOD 67.5 70.9 69.2

?QUESTIONMARK 51.4 54.3 52.8

----------------------------------------------

Overall 58.4 62.1 60.2

Err: 8.23%

SER: 64.4%

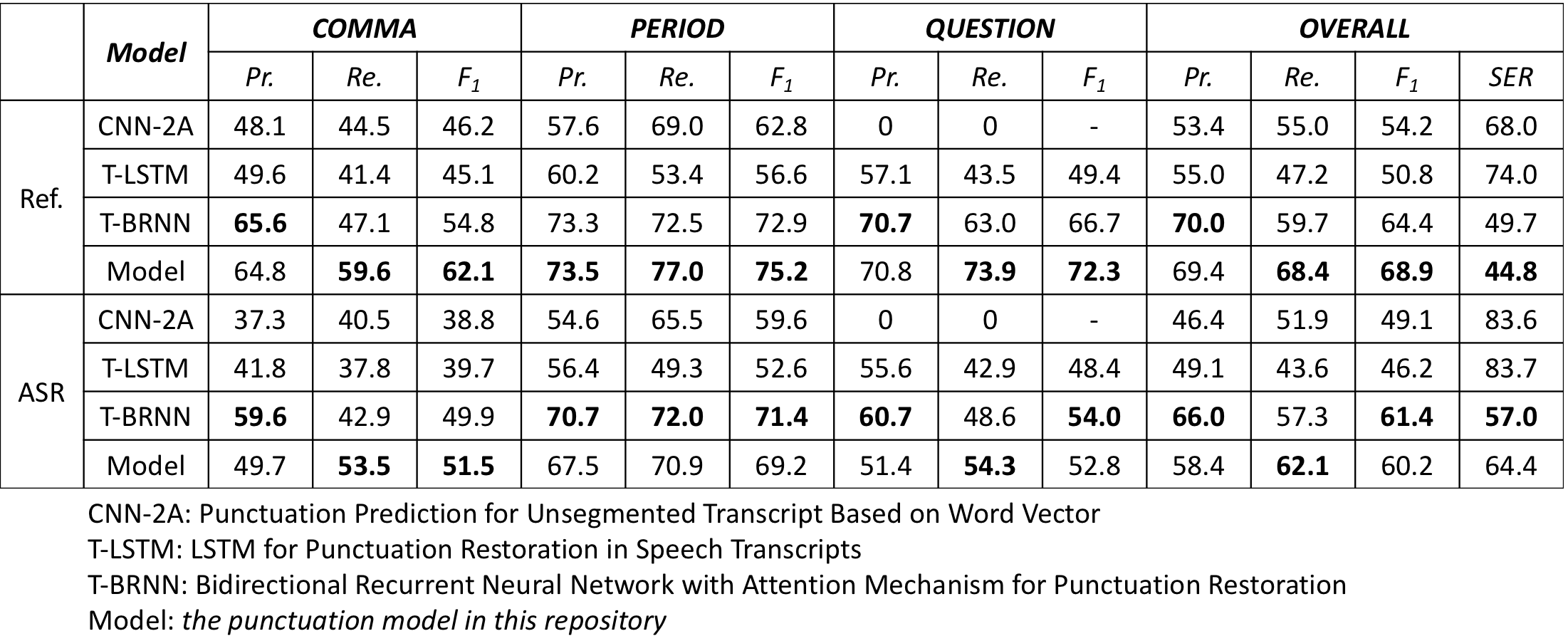

Some SOTA scores on English reference transcripts (ref) and ASR output testset (asr) from IWSLT:

The overall F1 score of the attentive model on

refdataset is67.6~69.5, while onasrdataset is60.2~61.5.

Resources

Datasets

- CoNLL 2003 POS, Chunking and NER datasets.

- MEDIA dataset, details in Is it time to switch to Word Embedding and Recurrent Neural Networks for Spoken Language Understanding?.

- Transcripts of TED Talks (IWSLT) dataset for Punctuation Restoration or Sentence Boundary Detection, details in Bidirectional Recurrent Neural Network with Attention Mechanism for Punctuation Restoration. I also provided the converted dataset built from the raw dataset, which can be used to train a model directly.

Embeddings and Evaluation Script

- GloVe Embeddings (6B, 42B, 840B)

- minimaxir: GloVe 840B 300d Char Embeddings

- Word2Vec Google News 300d Embeddings

- AdolfVonKleist/rnn-slu/rnn-slu/CoNLLeval.py

Papers

- Named Entity Recognition with Bidirectional LSTM-CNNs

- Bidirectional LSTM-CRF Models for Sequence Tagging

- End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF

- Neural Architectures for Named Entity Recognition

- Multi-Task Cross-Lingual Sequence Tagging from Scratch

- Part-of-Speech Tagging from 97% to 100%: Is It Time for Some Linguistics?

- Part-of-Speech Tagging with Bidirectional Long Short-Term Memory Recurrent Neural Network

- Bidirectional Recurrent Neural Network with Attention Mechanism for Punctuation Restoration

-blue.svg)