lucidrains / Perceiver Pytorch

Licence: mit

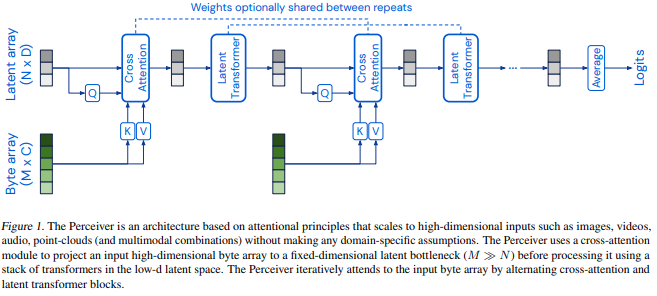

Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch

Stars: ✭ 130

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Perceiver Pytorch

Linformer Pytorch

My take on a practical implementation of Linformer for Pytorch.

Stars: ✭ 239 (+83.85%)

Mutual labels: artificial-intelligence, attention-mechanism

Bottleneck Transformer Pytorch

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408 (+213.85%)

Mutual labels: artificial-intelligence, attention-mechanism

Timesformer Pytorch

Implementation of TimeSformer from Facebook AI, a pure attention-based solution for video classification

Stars: ✭ 225 (+73.08%)

Mutual labels: artificial-intelligence, attention-mechanism

Dalle Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Stars: ✭ 3,661 (+2716.15%)

Mutual labels: artificial-intelligence, attention-mechanism

Se3 Transformer Pytorch

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. This specific repository is geared towards integration with eventual Alphafold2 replication.

Stars: ✭ 73 (-43.85%)

Mutual labels: artificial-intelligence, attention-mechanism

X Transformers

A simple but complete full-attention transformer with a set of promising experimental features from various papers

Stars: ✭ 211 (+62.31%)

Mutual labels: artificial-intelligence, attention-mechanism

Alphafold2

To eventually become an unofficial Pytorch implementation / replication of Alphafold2, as details of the architecture get released

Stars: ✭ 298 (+129.23%)

Mutual labels: artificial-intelligence, attention-mechanism

Sinkhorn Transformer

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Stars: ✭ 156 (+20%)

Mutual labels: artificial-intelligence, attention-mechanism

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (-50.77%)

Mutual labels: artificial-intelligence, attention-mechanism

Isab Pytorch

An implementation of (Induced) Set Attention Block, from the Set Transformers paper

Stars: ✭ 21 (-83.85%)

Mutual labels: artificial-intelligence, attention-mechanism

Linear Attention Transformer

Transformer based on a variant of attention that is linear complexity in respect to sequence length

Stars: ✭ 205 (+57.69%)

Mutual labels: artificial-intelligence, attention-mechanism

Reformer Pytorch

Reformer, the efficient Transformer, in Pytorch

Stars: ✭ 1,644 (+1164.62%)

Mutual labels: artificial-intelligence, attention-mechanism

Point Transformer Pytorch

Implementation of the Point Transformer layer, in Pytorch

Stars: ✭ 199 (+53.08%)

Mutual labels: artificial-intelligence, attention-mechanism

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (+60.77%)

Mutual labels: artificial-intelligence, attention-mechanism

Slot Attention

Implementation of Slot Attention from GoogleAI

Stars: ✭ 168 (+29.23%)

Mutual labels: artificial-intelligence, attention-mechanism

Vit Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Stars: ✭ 7,199 (+5437.69%)

Mutual labels: artificial-intelligence, attention-mechanism

Routing Transformer

Fully featured implementation of Routing Transformer

Stars: ✭ 149 (+14.62%)

Mutual labels: artificial-intelligence, attention-mechanism

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (+320%)

Mutual labels: artificial-intelligence, attention-mechanism

Simplednn

SimpleDNN is a machine learning lightweight open-source library written in Kotlin designed to support relevant neural network architectures in natural language processing tasks

Stars: ✭ 81 (-37.69%)

Mutual labels: artificial-intelligence, attention-mechanism

Lambda Networks

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Stars: ✭ 1,497 (+1051.54%)

Mutual labels: artificial-intelligence, attention-mechanism

Perceiver - Pytorch

Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch

Install

$ pip install perceiver-pytorch

Usage

import torch

from perceiver_pytorch import Perceiver

model = Perceiver(

input_channels = 3, # number of channels for each token of the input

input_axis = 2, # number of axis for input data (2 for images, 3 for video)

num_freq_bands = 6, # number of freq bands, with original value (2 * K + 1)

max_freq = 10., # maximum frequency, hyperparameter depending on how fine the data is

depth = 6, # depth of net

num_latents = 256, # number of latents, or induced set points, or centroids. different papers giving it different names

cross_dim = 512, # cross attention dimension

latent_dim = 512, # latent dimension

cross_heads = 1, # number of heads for cross attention. paper said 1

latent_heads = 8, # number of heads for latent self attention, 8

cross_dim_head = 64,

latent_dim_head = 64,

num_classes = 1000, # output number of classes

attn_dropout = 0.,

ff_dropout = 0.,

weight_tie_layers = False # whether to weight tie layers (optional, as indicated in the diagram)

)

img = torch.randn(1, 224, 224, 3) # 1 imagenet image, pixelized

model(img) # (1, 1000)

Experimental

I have also included a version of Perceiver that includes bottom-up (in addition to top-down) attention, using the same scheme as presented in the original Set Transformers paper as the Induced Set Attention Block.

You simply have to change the above import to

from perceiver_pytorch.experimental import Perceiver

Citations

@misc{jaegle2021perceiver,

title = {Perceiver: General Perception with Iterative Attention},

author = {Andrew Jaegle and Felix Gimeno and Andrew Brock and Andrew Zisserman and Oriol Vinyals and Joao Carreira},

year = {2021},

eprint = {2103.03206},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].