lucidrains / Slot Attention

Licence: mit

Implementation of Slot Attention from GoogleAI

Stars: ✭ 168

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Slot Attention

Isab Pytorch

An implementation of (Induced) Set Attention Block, from the Set Transformers paper

Stars: ✭ 21 (-87.5%)

Mutual labels: artificial-intelligence, attention-mechanism

Simplednn

SimpleDNN is a machine learning lightweight open-source library written in Kotlin designed to support relevant neural network architectures in natural language processing tasks

Stars: ✭ 81 (-51.79%)

Mutual labels: artificial-intelligence, attention-mechanism

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (-61.9%)

Mutual labels: artificial-intelligence, attention-mechanism

Alphafold2

To eventually become an unofficial Pytorch implementation / replication of Alphafold2, as details of the architecture get released

Stars: ✭ 298 (+77.38%)

Mutual labels: artificial-intelligence, attention-mechanism

Perceiver Pytorch

Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch

Stars: ✭ 130 (-22.62%)

Mutual labels: artificial-intelligence, attention-mechanism

Bottleneck Transformer Pytorch

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408 (+142.86%)

Mutual labels: artificial-intelligence, attention-mechanism

Ml code

A repository for recording the machine learning code

Stars: ✭ 75 (-55.36%)

Mutual labels: artificial-intelligence, clustering

Linformer Pytorch

My take on a practical implementation of Linformer for Pytorch.

Stars: ✭ 239 (+42.26%)

Mutual labels: artificial-intelligence, attention-mechanism

Lambda Networks

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Stars: ✭ 1,497 (+791.07%)

Mutual labels: artificial-intelligence, attention-mechanism

Reformer Pytorch

Reformer, the efficient Transformer, in Pytorch

Stars: ✭ 1,644 (+878.57%)

Mutual labels: artificial-intelligence, attention-mechanism

Vit Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Stars: ✭ 7,199 (+4185.12%)

Mutual labels: artificial-intelligence, attention-mechanism

Machine Learning With Python

Practice and tutorial-style notebooks covering wide variety of machine learning techniques

Stars: ✭ 2,197 (+1207.74%)

Mutual labels: artificial-intelligence, clustering

Timesformer Pytorch

Implementation of TimeSformer from Facebook AI, a pure attention-based solution for video classification

Stars: ✭ 225 (+33.93%)

Mutual labels: artificial-intelligence, attention-mechanism

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (+225%)

Mutual labels: artificial-intelligence, attention-mechanism

L2c

Learning to Cluster. A deep clustering strategy.

Stars: ✭ 262 (+55.95%)

Mutual labels: artificial-intelligence, clustering

Se3 Transformer Pytorch

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. This specific repository is geared towards integration with eventual Alphafold2 replication.

Stars: ✭ 73 (-56.55%)

Mutual labels: artificial-intelligence, attention-mechanism

X Transformers

A simple but complete full-attention transformer with a set of promising experimental features from various papers

Stars: ✭ 211 (+25.6%)

Mutual labels: artificial-intelligence, attention-mechanism

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (+24.4%)

Mutual labels: artificial-intelligence, attention-mechanism

Ml

A high-level machine learning and deep learning library for the PHP language.

Stars: ✭ 1,270 (+655.95%)

Mutual labels: artificial-intelligence, clustering

Routing Transformer

Fully featured implementation of Routing Transformer

Stars: ✭ 149 (-11.31%)

Mutual labels: artificial-intelligence, attention-mechanism

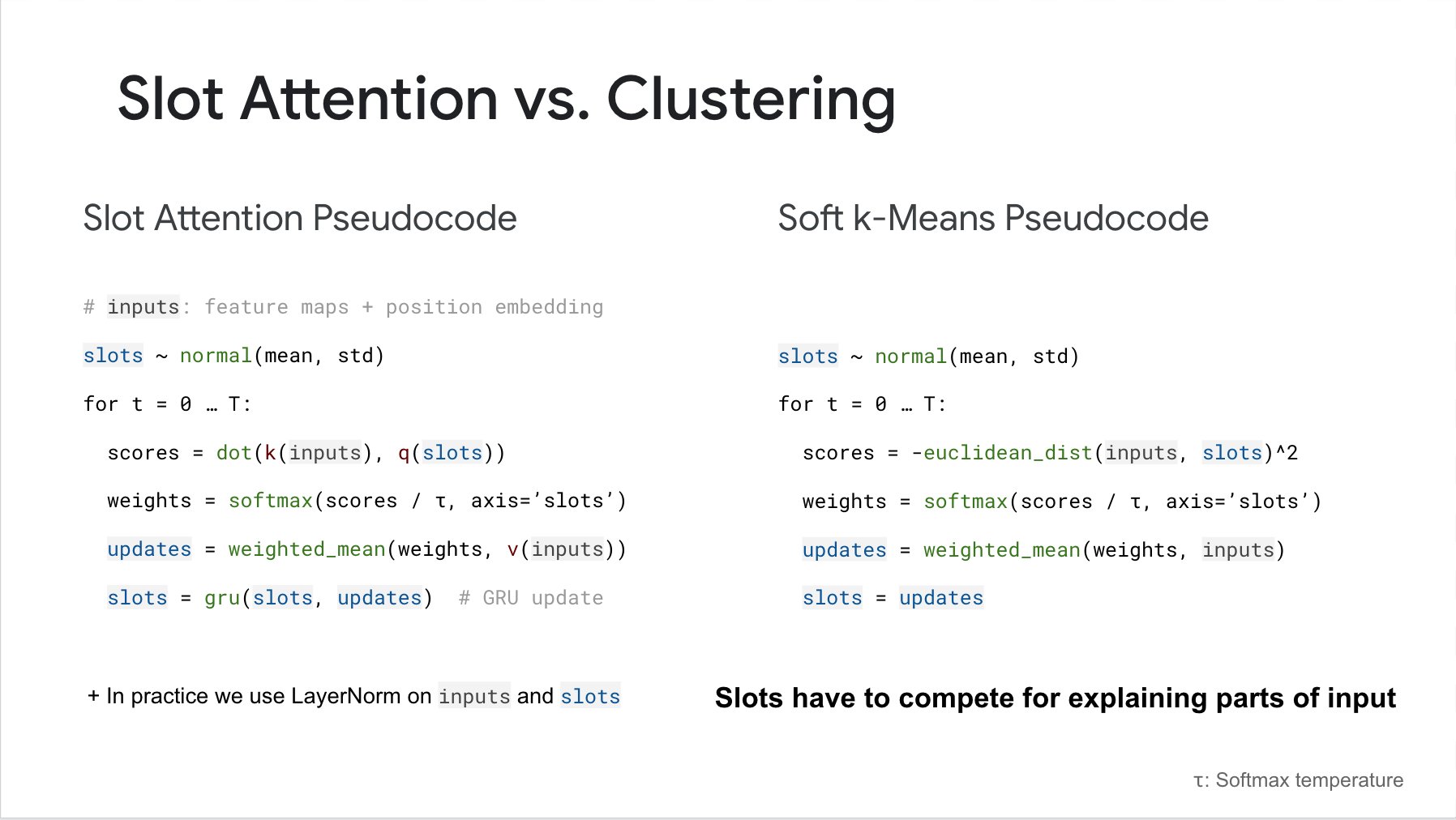

Slot Attention

Implementation of Slot Attention from the paper 'Object-Centric Learning with Slot Attention' in Pytorch. Here is a video that describes what this network can do.

Update: The official repository has been released here

Install

$ pip install slot_attention

Usage

import torch

from slot_attention import SlotAttention

slot_attn = SlotAttention(

num_slots = 5,

dim = 512,

iters = 3 # iterations of attention, defaults to 3

)

inputs = torch.randn(2, 1024, 512)

slot_attn(inputs) # (2, 5, 512)

After training, the network is reported to be able to generalize to slightly different number of slots (clusters). You can override the number of slots used by the num_slots keyword in forward.

slot_attn(inputs, num_slots = 8) # (2, 8, 512)

Citation

@misc{locatello2020objectcentric,

title = {Object-Centric Learning with Slot Attention},

author = {Francesco Locatello and Dirk Weissenborn and Thomas Unterthiner and Aravindh Mahendran and Georg Heigold and Jakob Uszkoreit and Alexey Dosovitskiy and Thomas Kipf},

year = {2020},

eprint = {2006.15055},

archivePrefix = {arXiv},

primaryClass = {cs.LG}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].