facebookresearch / Habitat Sim

Programming Languages

Projects that are alternatives of or similar to Habitat Sim

Habitat-Sim

A flexible, high-performance 3D simulator with configurable agents, multiple sensors, and generic 3D dataset handling (with built-in support for MatterPort3D, Gibson, Replica, and other datasets). When rendering a scene from the Matterport3D dataset, Habitat-Sim achieves several thousand frames per second (FPS) running single-threaded, and reaches over 10,000 FPS multi-process on a single GPU!

Habitat-Lab uses Habitat-Sim as the core simulator and is a modular high-level library for end-to-end experiments in embodied AI -- defining embodied AI tasks (e.g. navigation, instruction following, question answering), training agents (via imitation or reinforcement learning, or no learning at all as in classical SLAM), and benchmarking their performance on the defined tasks using standard metrics.

Table of contents

- Motivation

- Citing Habitat

- Details

- Performance

- Installation

- Testing

- Documentation

- Rendering to GPU Tensors

- WebGL

- Datasets

- Examples

- Code Style

- Development Tips

- Acknowledgments

- External Contributions

- License

- References

Motivation

AI Habitat enables training of embodied AI agents (virtual robots) in a highly photorealistic & efficient 3D simulator, before transferring the learned skills to reality. This empowers a paradigm shift from 'internet AI' based on static datasets (e.g. ImageNet, COCO, VQA) to embodied AI where agents act within realistic environments, bringing to the fore active perception, long-term planning, learning from interaction, and holding a dialog grounded in an environment.

Citing Habitat

If you use the Habitat platform in your research, please cite the following paper:

@inproceedings{habitat19iccv,

title = {Habitat: {A} {P}latform for {E}mbodied {AI} {R}esearch},

author = {Manolis Savva and Abhishek Kadian and Oleksandr Maksymets and Yili Zhao and Erik Wijmans and Bhavana Jain and Julian Straub and Jia Liu and Vladlen Koltun and Jitendra Malik and Devi Parikh and Dhruv Batra},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2019}

}

Habitat-Sim also builds on work contributed by others. If you use contributed methods/models, please cite their works. See the External Contributions section for a list of what was externally contributed and the corresponding work/citation.

Details

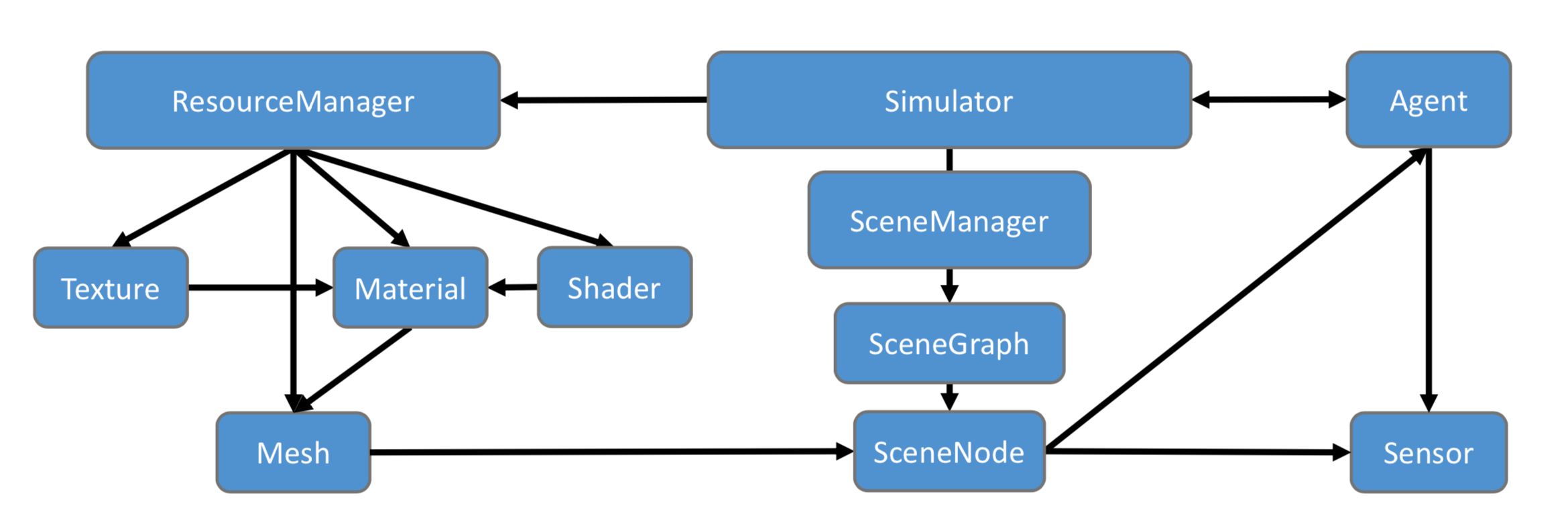

The Habitat-Sim backend module is implemented in C++ and leverages the magnum graphics middleware library to support cross-platform deployment on a broad variety of hardware configurations. The architecture of the main abstraction classes is shown below. The design of this module ensures a few key properties:

- Memory-efficient management of 3D environment resources (triangle mesh geometry, textures, shaders) ensuring shared resources are cached and re-used

- Flexible, structured representation of 3D environments using SceneGraphs, allowing for programmatic manipulation of object state, and combination of objects from different environments

- High-efficiency rendering engine with multi-attachment render passes for reduced overhead when multiple sensors are active

- Arbitrary numbers of Agents and corresponding Sensors that can be linked to a 3D environment by attachment to a SceneGraph.

Architecture of Habitat-Sim main classes

The Simulator delegates management of all resources related to 3D environments to a ResourceManager that is responsible for loading and caching 3D environment data from a variety of on-disk formats. These resources are used within SceneGraphs at the level of individual SceneNodes that represent distinct objects or regions in a particular Scene. Agents and their Sensors are instantiated by being attached to SceneNodes in a particular SceneGraph.

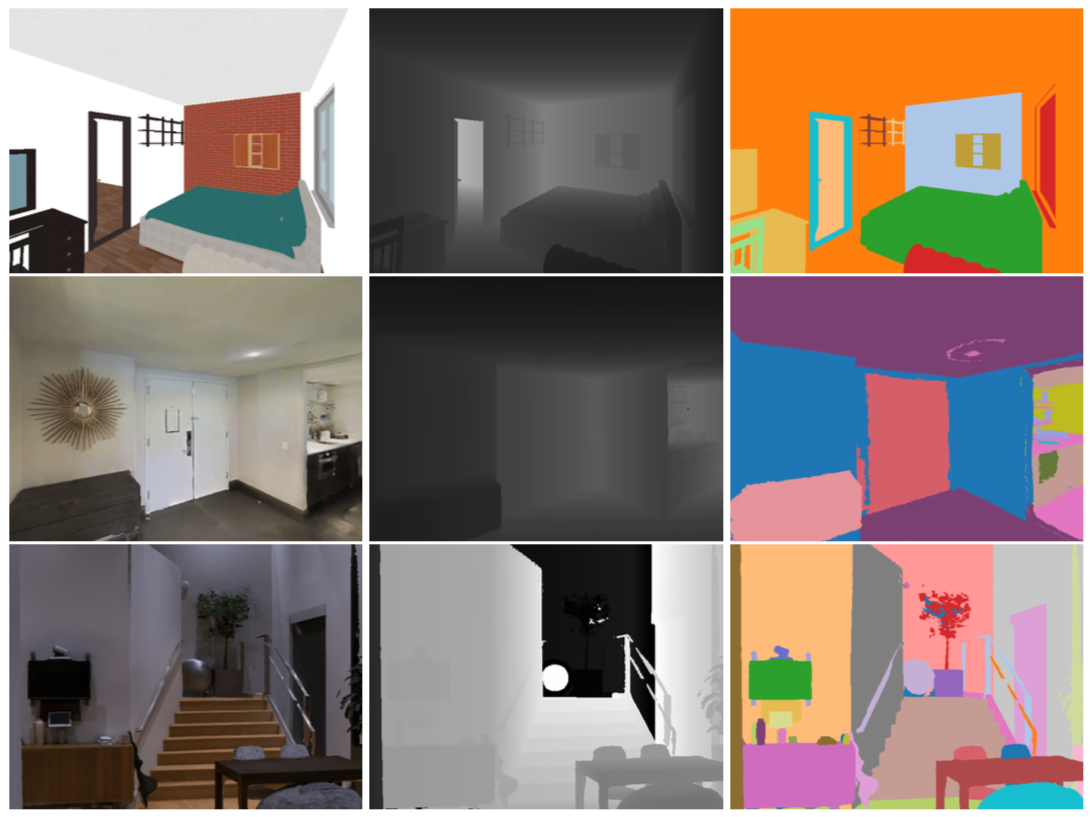

Example rendered sensor observations

Performance

The table below reports performance statistics for a test scene from the Matterport3D dataset (id 17DRP5sb8fy) on a Xeon E5-2690 v4 CPU and Nvidia Titan Xp. Single-thread performance reaches several thousand frames per second, while multi-process operation with several independent simulation backends can reach more than 10,000 frames per second on a single GPU!

| 1 proc | 3 procs | 5 procs | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Sensors / Resolution | 128 | 256 | 512 | 128 | 256 | 512 | 128 | 256 | 512 |

| RGB | 4093 | 1987 | 848 | 10638 | 3428 | 2068 | 10592 | 3574 | 2629 |

| RGB + depth | 2050 | 1042 | 423 | 5024 | 1715 | 1042 | 5223 | 1774 | 1348 |

| RGB + depth + semantics* | 709 | 596 | 394 | 1312 | 1219 | 979 | 1521 | 1429 | 1291 |

Previous simulation platforms that have operated on similar datasets typically produce on the order of a couple hundred frames per second. For example Gibson reports up to about 150 fps with 8 processes, and MINOS reports up to about 167 fps with 4 threads.

*Note: The semantic sensor in MP3D houses currently requires the use of additional house 3D meshes with orders of magnitude more geometric complexity leading to reduced performance. We expect this to be addressed in future versions leading to speeds comparable to RGB + depth; stay tuned.

To run the above benchmarks on your machine, see instructions in the examples section.

Installation

Habitat-Sim can be installed in 3 ways:

- Via Conda - Recommended method for most users. Stable release and nightly builds.

- Via Docker - Updated approximately once per year for Habitat Challenge.

- Via Source - For active development.

[Recommended] Conda Packages

Habitat is under active development, and we advise users to restrict themselves to stable releases. Starting with v0.1.4, we provide conda packages for each release. This is the recommended and easiest way to install Habitat-Sim.

Assuming you have conda installed, let's prepare a conda env:

# We require python>=3.6 and cmake>=3.10

conda create -n habitat python=3.6 cmake=3.14.0

conda activate habitat

pip install -r requirements.txt

Next, pick one of the options below depending on your system/needs:

- To install habitat-sim on machines with an attached display:

conda install habitat-sim -c conda-forge -c aihabitat

- To install on headless machines (i.e. without an attached display, e.g. in a cluster) and machines with multiple GPUs:

conda install habitat-sim headless -c conda-forge -c aihabitat - To install habitat-sim with bullet physics [on a headless system]

conda install habitat-sim withbullet [headless] -c conda-forge -c aihabitat

We also provide a nightly conda build for the master branch. However, this should only be used if you need a specific feature not yet in the latest release version. To get the nightly build of the latest master, simply swap -c aihabitat for -c aihabitat-nightly.

Docker Image

We provide a pre-built docker container for habitat-lab and habitat-sim, refer to habitat-docker-setup.

From Source

Read build instructions and common build issues.

Testing

-

Download the test scenes from this link and extract locally.

-

Interactive testing: Use the interactive viewer included with Habitat-Sim

# ./build/viewer if compiling locally habitat-viewer /path/to/data/scene_datasets/habitat-test-scenes/skokloster-castle.glbYou should be able to control an agent in this test scene. Use W/A/S/D keys to move forward/left/backward/right and arrow keys to control gaze direction (look up/down/left/right). Try to find the picture of a woman surrounded by a wreath. Have fun!

-

Physical interactions: If you would like to try out habitat with dynamical objects, first download our pre-processed object data-set from this link and extract as

habitat-sim/data/objects/.To run an interactive C++ example GUI application with physics enabled run

# ./build/viewer if compiling locally habitat-viewer --enable-physics /path/to/data/scene_datasets/habitat-test-scenes/van-gogh-room.glb

Use W/A/S/D keys to move forward/left/backward/right and arrow keys to control gaze direction (look up/down/left/right). Press 'o' key to add a random object, press 'p/f/t' to apply impulse/force/torque to the last added object or press 'u' to remove it. Press 'k' to kinematically nudge the last added object in a random direction. Press 'v' key to invert gravity.

-

Non-interactive testing: Run the example script:

python examples/example.py --scene /path/to/data/scene_datasets/habitat-test-scenes/skokloster-castle.glbThe agent will traverse a particular path and you should see the performance stats at the very end, something like this:

640 x 480, total time: 3.208 sec. FPS: 311.7. Note that the test scenes do not provide semantic meshes. If you would like to test the semantic sensors viaexample.py, please use the data from the Matterport3D dataset (see Datasets). We have also provided an example demo for reference.To run a physics example in python (after building with "Physics simulation via Bullet"):

python examples/example.py --scene /path/to/data/scene_datasets/habitat-test-scenes/skokloster-castle.glb --enable_physics

Note that in this mode the agent will be frozen and oriented toward the spawned physical objects. Additionally,

--save_pngcan be used to output agent visual observation frames of the physical scene to the current directory.

Common testing issues

-

If you are running on a remote machine and experience display errors when initializing the simulator, e.g.

X11: The DISPLAY environment variable is missing Could not initialize GLFW

ensure you do not have

DISPLAYdefined in your environment (rununset DISPLAYto undefine the variable) -

If you see libGL errors like:

X11: The DISPLAY environment variable is missing Could not initialize GLFW

chances are your libGL is located at a non-standard location. See e.g. this issue.

Documentation

Browse the online Habitat-Sim documentation.

To get you started, see the Lighting Setup tutorial for adding new objects to existing scenes and relighting the scene & objects. The Image Extractor tutorial shows how to get images from scenes loaded in Habitat-Sim.

Rendering to GPU Tensors

We support transfering rendering results directly to a PyTorch tensor via CUDA-GL Interop.

This feature is built by when Habitat-Sim is compiled with CUDA, i.e. built with --with-cuda. To enable it, set the

gpu2gpu_transfer flag of the sensor specification(s) to True

This is implemented in a way that is reasonably agnostic to the exact GPU-Tensor library being used, but we currently have only implemented support for PyTorch.

WebGL

- Download the test scenes and extract locally to habitat-sim creating habitat-sim/data.

- Download and install emscripten (you need at least version 1.38.48, newer versions such as 2.0.6 work too)

- Activate your emsdk environment

- Build using

./build_js.sh [--bullet] - Run webserver

python -m http.server 8000 --bind 127.0.0.1

- Open http://127.0.0.1:8000/build_js/esp/bindings_js/bindings.html

Datasets

- The full Matterport3D (MP3D) dataset for use with Habitat can be downloaded using the official Matterport3D download script as follows:

python download_mp.py --task habitat -o path/to/download/. You only need the habitat zip archive and not the entire Matterport3D dataset. Note that this download script requires python 2.7 to run. - The Gibson dataset for use with Habitat can be downloaded by agreeing to the terms of use in the Gibson repository.

- Semantic information for Gibson is available from the 3DSceneGraph dataset. The semantic data will need to be converted before it can be used within Habitat:

tools/gen_gibson_semantics.sh /path/to/3DSceneGraph_medium/automated_graph /path/to/GibsonDataset /path/to/output

To use semantics, you will need to enable the semantic sensor. - To work with the Replica dataset, you need a file called

sorted_faces.binfor each model. Such files (1 file per model), along with a convenient setup script can be downloaded from here: sorted_faces.zip. You need:

- Download the file from the above link;

- Unzip it;

- Use the script within to copy each data file to its corresponding folder (You will have to provide the path to the folder containing all replica models. For example, ~/models/replica/);

Examples

Load a specific MP3D or Gibson house: examples/example.py --scene path/to/mp3d/house_id.glb.

Additional arguments to example.py are provided to change the sensor configuration, print statistics of the semantic annotations in a scene, compute action-space shortest path trajectories, and set other useful functionality. Refer to the example.py and demo_runner.py source files for an overview.

To reproduce the benchmark table from above run examples/benchmark.py --scene /path/to/mp3d/17DRP5sb8fy/17DRP5sb8fy.glb.

Code Style

We use clang-format-12 for linting and code style enforcement of c++ code.

Code style follows the Google C++ guidelines.

Install clang-format-12 through brew install clang-format on macOS. For other systems, clang-format-12 can be installed via conda install clangdev -c conda-forge or by downloading binaries or sources from releases.llvm.org/download.

For vim integration add to your .vimrc file map <C-K> :%!clang-format<cr> and use Ctrl+K to format entire file.

Integration plugin for vscode.

We use black and isort for linting and code style of python code.

Install black and isort through pip install -U black isort.

They can then be ran via black . and isort.

We use eslint with prettier plugin for linting, formatting and code style of JS code.

Install these dependencies through npm install. Then, for fixing linting/formatting errors run npm run lint-fix. Make sure you have a node version > 8 for this.

We also offer pre-commit hooks to help with automatically formatting code.

Install the pre-commit hooks with pip install pre-commit && pre-commit install.

Development Tips

- Install

ninja(sudo apt install ninja-buildon Linux, orbrew install ninjaon macOS) for significantly faster incremental builds - Install

ccache(sudo apt install ccacheon Linux, orbrew install ccacheon macOS) for significantly faster clean re-builds and builds with slightly different settings - You can skip reinstalling magnum every time by adding the argument of

--skip-install-magnumto eitherbuild.shorsetup.py. Note that you will still need to install magnum bindings once. - Arguments to

build.shandsetup.pycan be cached between subsequent invocations with the flag--cache-argson the first invocation.

Acknowledgments

The Habitat project would not have been possible without the support and contributions of many individuals. We would like to thank Xinlei Chen, Georgia Gkioxari, Daniel Gordon, Leonidas Guibas, Saurabh Gupta, Or Litany, Marcus Rohrbach, Amanpreet Singh, Devendra Singh Chaplot, Yuandong Tian, and Yuxin Wu for many helpful conversations and guidance on the design and development of the Habitat platform.

External Contributions

-

If you use the noise model from PyRobot, please cite the their technical report.

Specifically, the noise model used for the noisy control functions named

pyrobot_*and defined inhabitat_sim/agent/controls/pyrobot_noisy_controls.py -

If you use the Redwood Depth Noise Model, please cite their paper

Specifically, the noise model defined in

habitat_sim/sensors/noise_models/redwood_depth_noise_model.pyandsrc/esp/sensor/RedwoodNoiseModel.*

License

Habitat-Sim is MIT licensed. See the LICENSE for details.

References

- Habitat: A Platform for Embodied AI Research. Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh, Dhruv Batra. IEEE/CVF International Conference on Computer Vision (ICCV), 2019.