VINS-Mono:中文注释

A Robust and Versatile Monocular Visual-Inertial State Estimator

VINS-Mono is a real-time SLAM framework for Monocular Visual-Inertial Systems. It uses an optimization-based sliding window formulation for providing high-accuracy visual-inertial odometry. It features efficient IMU pre-integration with bias correction, automatic estimator initialization, online extrinsic calibration, failure detection and recovery, loop detection, and global pose graph optimization. VINS-Mono is primarily designed for state estimation and feedback control of autonomous drones, but it is also capable of providing accurate localization for AR applications. This code runs on Linux, and is fully integrated with ROS. For iOS mobile implementation, please go to VINS-Mobile.

Authors: Tong Qin, Peiliang Li, Zhenfei Yang, and Shaojie Shen from the HUKST Aerial Robotics Group

Videos:

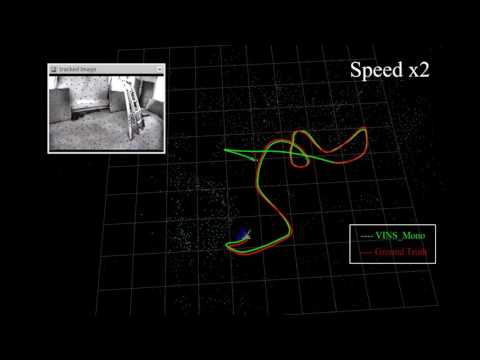

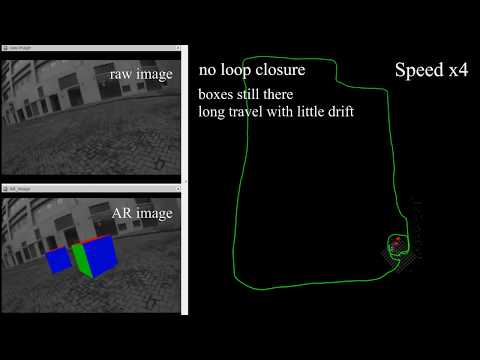

EuRoC dataset; Indoor and outdoor performance; AR application;

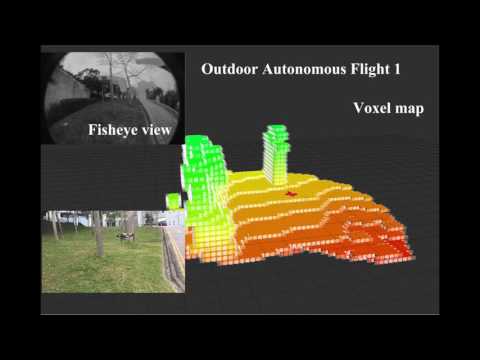

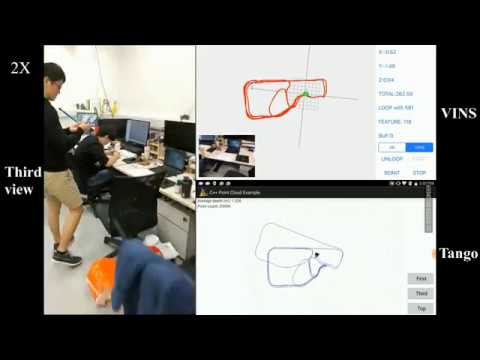

MAV application; Mobile implementation (Video link for mainland China friends: Video1 Video2 Video3 Video4 Video5)

Related Papers

- VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator, Tong Qin, Peiliang Li, Zhenfei Yang, Shaojie Shen arXiv:1708.03852

- Autonomous Aerial Navigation Using Monocular Visual-Inertial Fusion, Yi Lin, Fei Gao, Tong Qin, Wenliang Gao, Tianbo Liu, William Wu, Zhenfei Yang, Shaojie Shen, J Field Robotics. 2017;00:1–29. https://doi.org/10.1002/rob.21732

@article{qin2017vins,

title={VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator},

author={Qin, Tong and Li, Peiliang and Shen, Shaojie},

journal={arXiv preprint arXiv:1708.03852},

year={2017}

}

@article{Lin17,

Author = {Y. Lin and F. Gao and T. Qin and W. Gao and T. Liu and W. Wu and Z. Yang and S. Shen},

Journal = jfr,

Title = {Autonomous Aerial Navigation Using Monocular Visual-Inertial Fusion},

Volume = {00},

Pages = {1-29},

Year = {2017}}

If you use VINS-Mono for your academic research, please cite at least one of our related papers.

1. Prerequisites

1.1 Ubuntu and ROS Ubuntu 14.04 16.04. ROS Indigo, Kinetic. ROS Installation additional ROS pacakge

sudo apt-get install ros-YOUR_DISTRO-cv-bridge ros-YOUR_DISTRO-tf ros-YOUR_DISTRO-message-filters ros-YOUR_DISTRO-image-transport

If you install ROS Kinetic, please update opencv3 with

sudo apt-get install ros-kinetic-opencv3

1.2. Ceres Solver Follow Ceres Installation, remember to make install. (Our testing environment: Ubuntu 14.04, ROS Indigo, OpenCV 2.4.8, Eigen 3.2.0)

2. Build VINS-Mono on ROS

Clone the repository and catkin_make:

cd ~/catkin_ws/src

git clone https://github.com/HKUST-Aerial-Robotics/VINS-Mono.git

cd ../

catkin_make

source ~/catkin_ws/devel/setup.bash

3. Performance on EuRoC dataset

3.1 Download EuRoC MAV Dataset. Although it contains stereo cameras, we only use one camera.

3.2 Open three terminals, launch the vins_estimator , rviz and play the bag file respectively. Take MH_05 as example

roslaunch vins_estimator euroc.launch

roslaunch vins_estimator vins_rviz.launch

rosbag play YOUR_PATH_TO_DATASET/MH_05_difficult.bag

(If you fail to open vins_rviz.launch, just open an empty rviz, then load the config file: file -> Open Config-> YOUR_VINS_FOLDER/config/vins_rviz_config.rviz) /vins_estimator/path is the IMU center's trajectory, /vins_estimator/odometry is the IMU center's odometry and /vins_estimator/camera_pose is the camera's pose.

3.3 (Optional) Visualize ground truth. We write a naive benchmark publisher to help you visualize the ground truth. It uses a naive strategy to align VINS with ground truth. Just for visualization. not for quantitative comparison on academic publications.

roslaunch benchmark_publisher publish.launch sequence_name:=MH_05_difficult

(Green line is VINS result, red line is ground truth).

3.4 (Optional) You can even run EuRoC without extrinsic parameters between camera and IMU. We will calibrate them online. Replace the first command with:

roslaunch vins_estimator euroc_no_extrinsic_param.launch

No extrinsic parameters in that config file. Waiting a few seconds for initial calibration. Sometimes you cannot feel any difference as the calibration is done quickly.

4. AR Demo

4.1 Download the bag file, which is collected from HKUST Robotic Institute. For friends in mainland China, download from bag file.

4.2 Open three terminals, launch the ar_demo, rviz and play the bag file respectively.

roslaunch ar_demo 3dm_bag.launch

roslaunch ar_demo ar_rviz.launch

rosbag play YOUR_PATH_TO_DATASET/ar_box.bag

We put one 0.8m x 0.8m x 0.8m virtual box in front of your view.

5. Run with your device

Suppose you are familiar with ROS and you can get a camera and an IMU with raw metric measurements in ROS topic, you can follow these steps to set up your device. For beginners, we highly recommend you to first try out VINS-Mobile if you have iOS devices since you don't need to set up anything.

5.1 Change to your topic name in the config file. The image should exceed 20Hz and IMU should exceed 100Hz. Both image and IMU should have the accurate time stamp.

5.2 Camera calibration:

We support the pinhole model and the MEI model. You can calibrate your camera with any tools you like. Just write the parameters in the config file in the right format.

5.3 Camera-Imu extrinsic parameters:

If you have seen the config files for EuRoC and AR demos, you can find that we just use coarse values. If you familiar with transformation, you can figure out the rotation and position by your eyes or via hand measurements. Then write these values into config as the initial guess. Our estimator will refine extrinsic parameters online. If you don't know anything about the camera-IMU transformation, just ignore the extrinsic parameters and set the estimate_extrinsic to 2, and rotate your device set at the beginning for a few seconds. When the system works successfully, we will save the calibration result. you can use these result as initial values for next time. An example of how to set the extrinsic parameters is inextrinsic_parameter_example

5.4 Other parameter settings: Details are included in the config file.

5.5 Performance on different devices:

(global shutter camera + synchronized high-end IMU, e.g. VI-Sensor) > (global shutter camera + synchronized low-end IMU, e.g. camera+DJI A3) > (global camera + unsync high frequency IMU) > (global camera + unsync low frequency IMU) > (rolling camera + unsync low frequency IMU).

DO NOT start with a rolling shutter camera and unsync IMU (such as DJI M100 + Logitech web camera) at beginning.

6. Acknowledgements

We use ceres solver for non-linear optimization and DBoW2 for loop detection, and a generic camera model.

7. Licence

The source code is released under GPLv3 license.

We are still working on improving the code reliability. For any technical issues, please contact Tong QIN <tong.qinATconnect.ust.hk> or Peiliang LI <pliapATconnect.ust.hk>.

For commercial inquiries, please contact Shaojie SHEN <eeshaojieATust.hk>